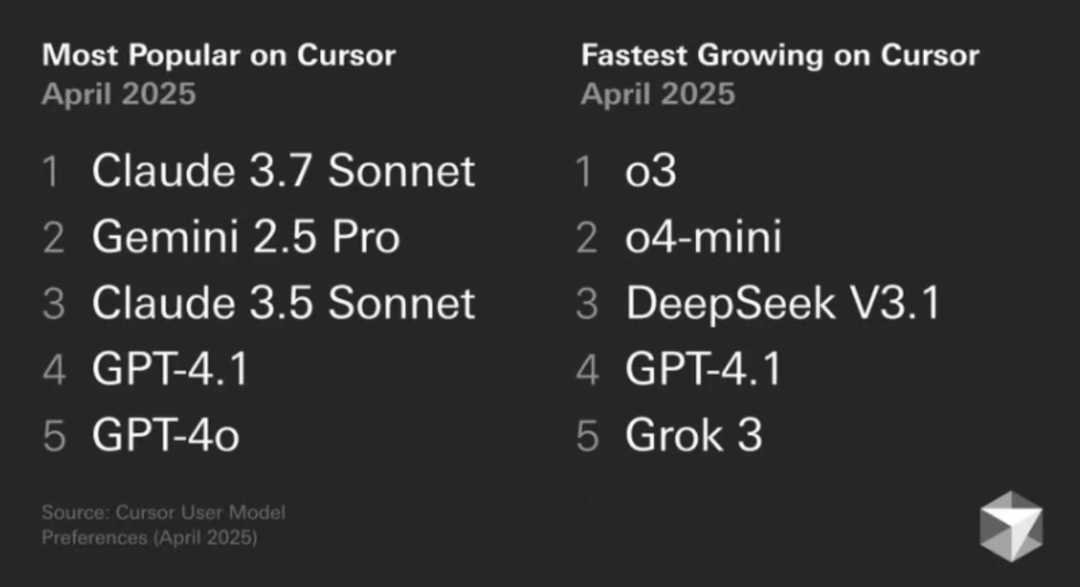

Recently, the AI-assisted programming tool Cursor published a list of the top AI models favored by developers, and the data shows that Claude 3.7 The Sonnet model takes the top spot.

This official data certainly reflects the choice of a significant portion of developers. But does this mean that developers should simply make Claude 3.7 Sonnet the default option? The actual use case may be more complicated.

Looking at the usage habits of some senior developers reveals significant differences in their model selection ratios from the official list. For example, in Cursor, Gemini 2.5 Pro may be used as much as 801 TP3T, Claude 3.7 Sonnet accounts for 101 TP3T, and GPT-3.5 and GPT-4.1 5% each. In other command-line or code-editing environments (such as Roo or Cline and other tools), Grok 3 may even reach a utilization rate of 901 TP3T, with the remaining 101 TP3T allocated to the Gemini 2.5 Flash, other models are rarely called.

Behind this difference is a combination of considerations based on actual task requirements, cost-effectiveness, and model characteristics. Here are some principles and preference settings worth considering when selecting and using these AI coding assistants.

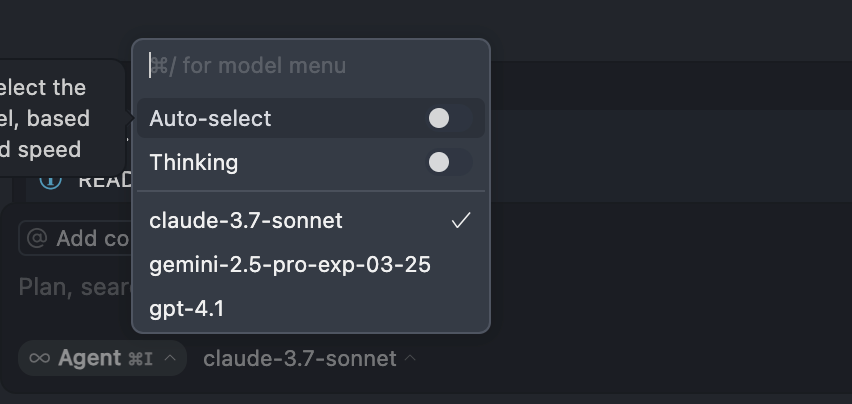

Avoid using "Auto" mode.

The "Auto" mode of model selection in tools such as Cursor is not recommended, mainly because the developer loses direct control over model selection. While this feature is intended to balance model consumption, power and responsiveness, the reality is that there is often a difficult trade-off between the three - typically, more power means higher consumption or slower speed.

Instead of letting the system automatically assign to a model that may not be suitable for the task at hand, thus wasting resources (e.g., points or number of calls), it is better to manually switch to the most suitable model for the specific needs. Therefore, it is recommended that this automatic option be turned off for the long term.

Enabling "Thinking" mode (chain of thought)

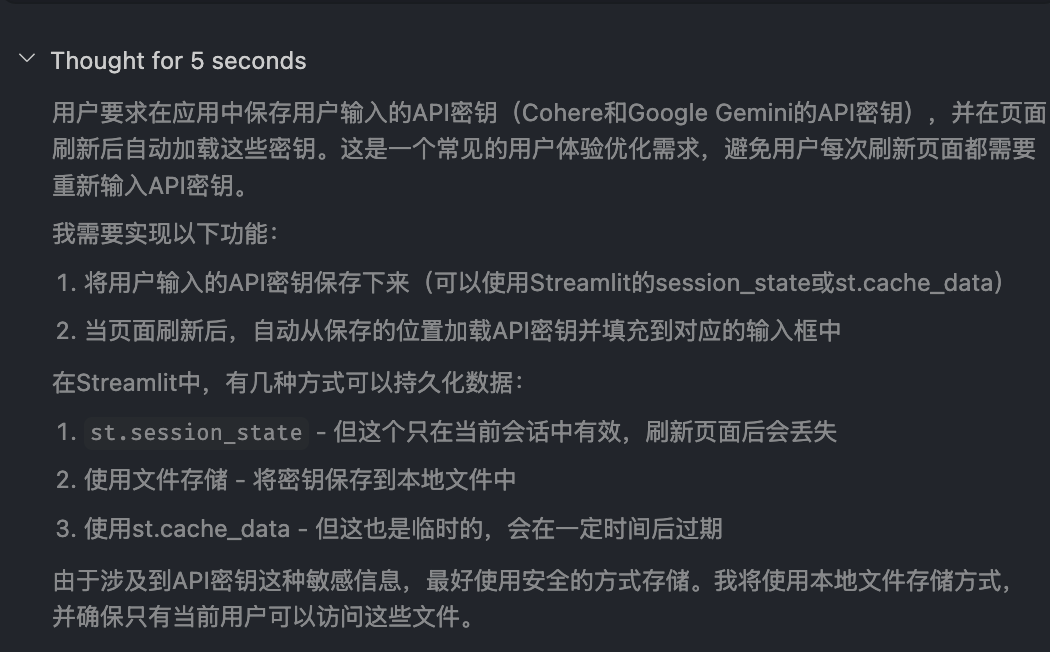

Chain-of-Thought technology is now standard in top AI models. Enabling the option to display the model's "thought process" (often called "Thinking" or similar) is critical.

Turning on this mode not only helps to improve the model's ability to handle complex problems, but the detailed thinking steps it displays also provide developers with insight into the way the model works. This has a dual benefit: first, the model's problem-solving strategy can be learned through observation, accumulating experience in handling similar tasks; second, the model's reasoning direction and scheme can be quickly determined whether they are correct or not, so as to intervene and make adjustments in a timely manner at an early stage.

Switching models according to task type

No model is perfect for all tasks, and switching models dynamically is the key to efficiency.

Large-scale project planning and code grooming

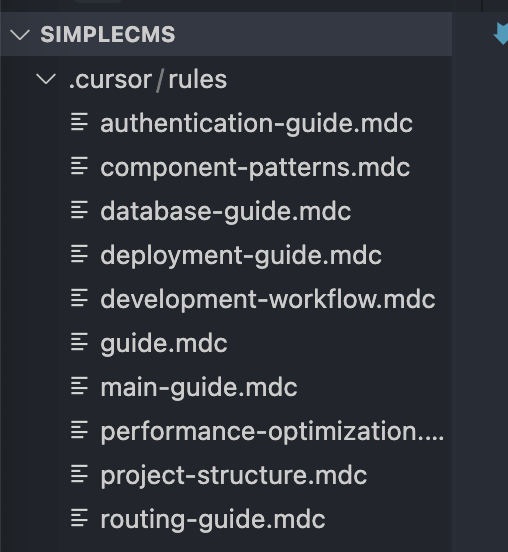

For macro tasks such as project planning, combing through complex or legacy code bases, and generating rules, Gemini 2.5 Pro or Claude 3.7 Sonnet are the main choices. Of these, Gemini 2.5 Pro has a significant advantage due to its large context window, and is especially suited to handle older projects with multiple iterations and large codebases.

The core strength here is the ability to handle the sheer volume of information; Gemini 2.5 Pro currently supports context windows of up to 1 million tokens (tokens are a unit of measurement for the amount of textual data), with plans to expand to 2 million tokens. this means that it can handle the equivalent of thousands of pages of documents, entire code bases, or large multimodal data inputs containing text, images, audio, and video, all at once. This means that it can handle the equivalent of thousands of pages of documents or entire codebases or large multimodal data inputs containing text, images, audio, and video at once. In contrast, other mainstream models such as OpenAI's gpt-4o-mini and Anthropic The Claude 3.7 Sonnet currently offers a context window of about 200,000 tokens.

Experience has shown that Gemini 2.5 Pro excels at automatically generating document files (e.g., it is assumed that the mdc file mentioned in the original article may refer to a Markdown document or some other format), with fewer instances of apparent "hallucinations" (i.e., generation of inaccurate or meaningless content).

Single file modification and modular development

For tasks that are smaller in scope, such as modifying a single file or performing modular development, switching to the Claude 3.x Sonnet family of models is often a better choice.Claude models are known for their responsiveness, accuracy, and code generation capabilities.

Here the developer may be faced with a choice: use the latest Claude 3.7 Sonnet or the slightly earlier Claude 3.5 Sonnet?

Some developers' experience suggests that Claude 3.5 Sonnet may feel more stable and reliable than 3.7 Sonnet in some scenarios. In particular, the "think" mode of 3.7 Sonnet can sometimes lead to a cycle of iterative changes that do not achieve the desired result after dealing with very complex problems or long conversations. Therefore, even though 3.5 Sonnet has been out for a while, it is still a very robust and reliable choice for many everyday development scenarios.

Think of Gemini 2.5 Pro as a strategic planner capable of handling large-scale information, while the Claude 3.x Sonnet series is more of a commando that performs specific coding tasks and solves problems quickly.

Task-specific optimizations

- Simple Debug or small modifications: For simple tasks such as fixing type errors and making minor code adjustments, consider using a lower-cost or faster model, such as GPT-4.1, which may be in a free or low-cost trial phase at the moment, and even if it is charged in the future, it is expected to consume far fewer points than the top-of-the-line models, making it cost-effective for such "small fixes". It is very cost-effective for this kind of "tinkering" tasks, and avoids the waste of resources in the form of "killing a chicken with a bull's-eye".

- Multimodal tasks: The Claude 3.x Sonnet family of models typically performs best when the task involves working with images, such as generating code for a web page based on a design drawing.The Claude models have a proven track record of understanding visual elements and generating aesthetically pleasing interface code.

Tool-specific model selection (Roo, Cline, etc.)

In some command-line AI tools or IDE plug-ins other than Cursor (e.g., Roo and Cline, as mentioned in the original article), model selection may be strongly influenced by cost and usability.

In these environments, the use of Grok 3 model may become a pragmatic option, mainly because of its relatively generous usage allowance. x AI offers a sizable free monthly allowance for the Grok 3 API (reportedly close to $150), which is extremely attractive to developers who need to make a lot of calls. For more information, see Grok 3 Shocking Release: Reasoning Intelligence Body Performance Blows Up! API Debuts "$5 Charge for $150" cap (a poem) OpenAI Codex CLI: Terminal Command Line AI Coding Assistant Released by OpenAI and other community discussions. In contrast, using other top-tier models can quickly incur high costs. While the Gemini family of models offers a free tier, it is often accompanied by rate limiting and is prone to triggering errors when there are too many consecutive or concurrent requests.

In addition, for certain repetitive or batch coding tasks (the acronym MCP may refer to scenarios such as "Mass Code Processing" or similar), a version of Gemini Flash, which, as its name suggests, optimizes responsiveness while retaining sufficient contextual comprehension, is an option worth considering. Gemini Flash strikes a good balance between speed and accuracy when dealing with these types of batch tasks that require a fast and accurate response.

Ultimately, an effective strategy for using AI models is not the fixed selection of a single model, but rather the dynamic and flexible manual switching between different scenarios and tools based on task requirements, cost budgets, and model characteristics. For example, it would be wise to prioritize high-performance models such as Claude 3.7 Sonnet or Gemini 2.5 Pro for complex tasks within available quota, and switch to more cost-effective options such as GPT-4.1 or Grok 3 when quota is close to being depleted or for simple tasks.