General Introduction

Describe Anything is an open source project developed by NVIDIA and several universities, with the Describe Anything Model (DAM) at its core. This tool generates detailed descriptions based on areas (such as dots, boxes, graffiti, or masks) that the user marks in an image or video. It not only describes the details of a still image, but also captures the change of regions in a video over time.

Function List

- Supports multiple region labeling methods: Users can specify the description region of an image or video by dots, boxes, doodles or masks.

- Image and Video Description: Generate detailed descriptions for still images or analyze dynamic changes in specific areas of a video.

- Open source models and datasets: DAM-3B and DAM-3B-Video models are provided to support joint image and video processing.

- Interactive interface: A web interface is provided through Gradio, allowing users to draw masks and get descriptions in real time.

- API support: Provide OpenAI-compatible server interfaces for easy integration into other applications.

- DLC-Bench Evaluation: Contains specialized benchmarking tools for evaluating the performance of the models described in the area.

- SAM Integration: Optional integrated Segment Anything (SAM) model to automatically generate masks and improve operational efficiency.

Using Help

Installation process

Describe Anything can be installed from a Python environment. It is recommended to use a virtual environment to avoid dependency conflicts. Here are the detailed installation steps:

- Creating a Python Environment::

Using Python 3.8 or later, create a new virtual environment:python -m venv dam_env source dam_env/bin/activate # Linux/Mac dam_env\Scripts\activate # Windows

- Install Describe Anything::

There are two mounting options:- Install directly via pip:

pip install git+https://github.com/NVlabs/describe-anything - Clone the repository and install it locally:

git clone https://github.com/NVlabs/describe-anything cd describe-anything pip install -v .

- Install directly via pip:

- Install Segment Anything (optional)::

If you need to generate masks automatically, you need to install the SAM dependency:cd demo pip install -r requirements.txt - Verify Installation::

After the installation is complete, run the following command to check for success:python -c "from dam import DescribeAnythingModel; print('Installation successful')"

Usage

Describe Anything can be used in a variety of ways, including command line scripting, interactive interfaces, and API calls. Below is a detailed walkthrough of the main features:

1. Interactive Gradio interface

The Gradio interface is suitable for beginners and allows users to upload images and manually draw masks to get descriptions.

- Launch Interface::

Run the following command to start the Gradio server:python demo_simple.pyAfter the command is executed, the browser opens a local web page (usually the

http://localhost:7860). - procedure::

- Upload Image: Click the Upload button to select a local image file.

- Draw Mask: Use the brush tool to circle the area of interest on the image.

- Get Description: Click Submit and the system will generate a detailed description of the area, e.g. "A dog with red hair, wearing a silver tag collar, running".

- Optional SAM Integration: When SAM is enabled, the system automatically generates a mask by clicking on the dots on the image.

- caveat::

- Make sure the image is in RGBA format and the mask is processed through the alpha channel.

- The level of detail of the description can be adjusted by adjusting parameters such as

max_new_tokens) Control.

2. Command-line scripts

Command line scripts are suitable for batch processing or developer use, providing greater flexibility.

- Image Description::

Run the following command to generate a description for the image:python examples/dam_with_sam.py --image_path <image_file> --input_points "[[x1,y1],[x2,y2]]"Example:

python examples/dam_with_sam.py --image_path dog.jpg --input_points "[[500,300]]"The system generates a mask and outputs a description based on the specified points.

- Video Description::

Processing video using federated models:python examples/query_dam_server_video.py --model describe_anything_model --server_url http://localhost:8000 --video_path <video_file>Simply specify the area on a frame and the system will automatically track and characterize the area changes.

- parameterization::

--temperature: Controls the creativity of the description, with a recommended value of 0.2.--top_p: Controls the generation of diversity, with a recommended value of 0.9.--max_new_tokens: Set the maximum length of the description, the default is 512.

3. API calls

Describe Anything provides an OpenAI-compatible API suitable for integration into other applications.

- Start the server::

Run the following command to start the DAM server:python dam_server.py --model-path nvidia/DAM-3B --conv-mode v1 --prompt-mode focal_promptThe server runs by default on the

http://localhost:8000The - Send Request::

Send requests using Python and the OpenAI SDK:from openai import OpenAI client = OpenAI(base_url="http://localhost:8000", api_key="not-needed") response = client.chat.completions.create( model="describe_anything_model", messages=[ {"role": "user", "content": [ {"type": "image_url", "image_url": {"url": "data:image/png;base64,<base64_image>"}}, {"type": "text", "text": "Describe the region in the mask"} ]} ] ) print(response.choices[0].message.content)interchangeability

<base64_image>Base64 encoding for the image.

4. DLC-Bench assessment

DLC-Bench is a benchmarking tool for evaluating area description models.

- Download Dataset::

git lfs install git clone https://huggingface.co/datasets/nvidia/DLC-Bench - Operational assessment::

Use the following commands to generate model output and evaluate it:python get_model_outputs.py --model_type dam --model_path nvidia/DAM-3BThe results are cached into the

model_outputs_cache/Folder.

Featured Function Operation

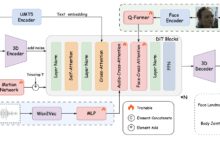

- Focal Prompting: DAM uses Focal Prompting technology to combine global image context and local area details to generate more accurate descriptions. Users do not need to manually adjust the prompts, the system optimizes them automatically.

- Gated Cross-Attention: Through a gated cross-attention mechanism, the model is able to focus on a specified region in a complex scene, avoiding the interference of irrelevant information.

- Video Motion DescriptionSimply mark an area on a frame, and DAM automatically tracks and describes how that area changes in the video, for example, "The cow's leg muscles move vigorously with her stride".

application scenario

- Medical Image Analysis

Doctors can use Describe Anything to label specific areas on medical images, such as CT or MRIs, to generate detailed descriptions to aid in diagnosis. For example, to label an abnormal area in the lungs, the system could describe "an irregularly shaded area with blurred edges, possibly inflammation". - town planning

Planners can upload an aerial video, label the building or road area, and get a description such as "a wide four-lane highway surrounded by dense commercial buildings". This helps to analyze the layout of the city. - content creation

Video creators can use Describe Anything to generate descriptions for specific objects in a video clip, such as "a flying eagle with wings spread and snowy mountains in the background". These descriptions can be used for captioning or scripting. - data annotation

Data scientists can use DAM to automatically generate descriptions for objects in an image or video, reducing the amount of manual labeling. For example, labeling vehicles in a dataset generates the phrase "red car, headlights on."

QA

- What input formats does Describe Anything support?

Common image formats such as PNG, JPEG, and video formats such as MP4 are supported. The image must be in RGBA mode, with the mask specified by the alpha channel. - How can I improve the accuracy of my descriptions?

Use a more precise mask (e.g., auto-generated via SAM) and adjust thetemperaturecap (a poem)top_pparameters to control the creativity and diversity of descriptions. - Does it require a GPU to run?

NVIDIA GPUs (e.g. RTX 3090) are recommended for accelerated inference, but CPUs can be run as well, at slower speeds. - How to handle multi-frame descriptions in videos?

Simply label the region on one frame, and the DAM automatically tracks and describes how that region changes in subsequent frames.