General Introduction

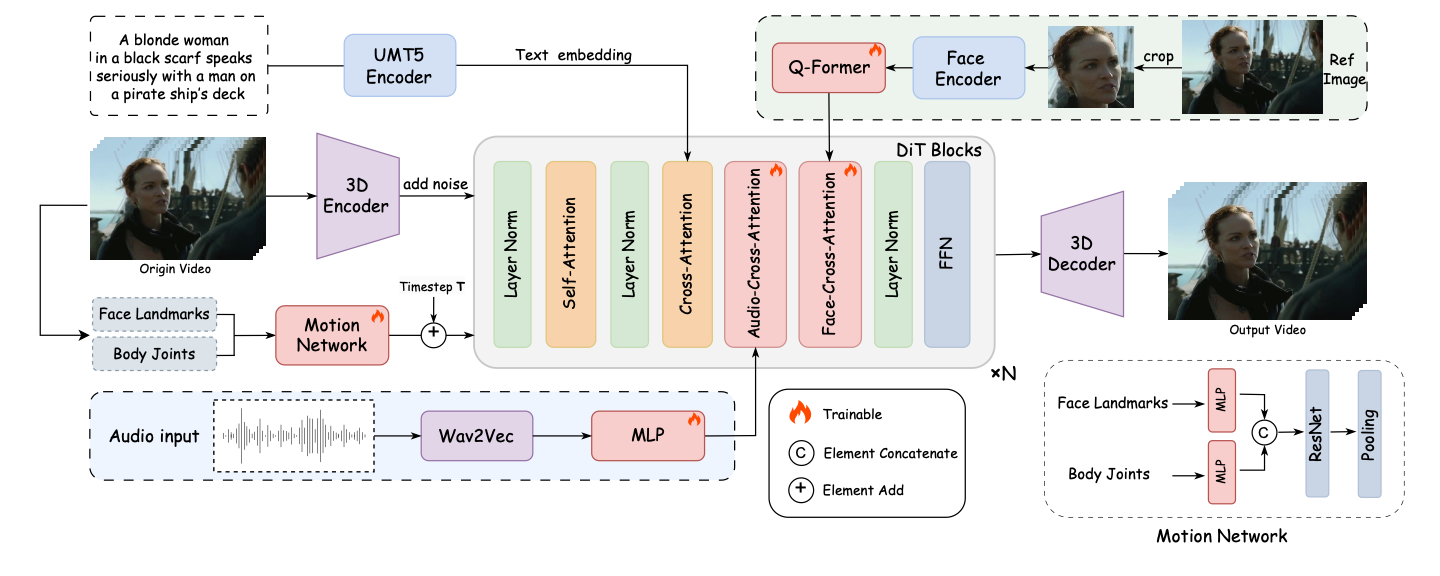

FantasyTalking is an open source project developed by the Fantasy-AMAP team focused on generating realism in talking portrait videos through audio-driven generation. The project is based on the advanced video diffusion model Wan2.1 , combined with the audio encoder Wav2Vec and proprietary model weights , using artificial intelligence techniques to achieve highly realistic lip synchronization and facial expressions . It supports multiple styles of portrait generation, including real people and cartoon images, for a wide range of viewpoints such as panorama, bust or close-up. Users can quickly generate high-quality talking videos by inputting images and audio through simple command line operations.

Function List

- Generate realism speaking portrait videos with lip movements highly synchronized with the audio.

- Supports multiple viewpoint generation, including close-up, half-body and full-body portraits.

- Compatible with real and cartoon style portraits to meet diverse needs.

- Provide cue word control function to adjust the character's expression and body movement.

- Supports high resolution output up to 720P.

- Integrated face-focused cross-attention module ensures facial feature consistency.

- Includes exercise intensity modulation module to control expression and range of motion.

- Open source models and code to support secondary community development and optimization.

Using Help

Installation process

To use FantasyTalking, you need to install the necessary dependencies and models first. Below are the detailed installation steps:

- Cloning Project Code

Run the following command in the terminal to clone the project locally:git clone https://github.com/Fantasy-AMAP/fantasy-talking.git cd fantasy-talking

- Installation of dependencies

The project depends on the Python environment and PyTorch (version >= 2.0.0). Run the following command to install the required libraries:pip install -r requirements.txtOptional Mounting

flash_attnto accelerate attentional computation:pip install flash_attn - Download model

FantasyTalking requires three models: Wan2.1-I2V-14B-720P (base model), Wav2Vec (audio encoder) and FantasyTalking model weights. They can be downloaded via Hugging Face or ModelScope:- Use the Hugging Face CLI:

pip install "huggingface_hub[cli]" huggingface-cli download Wan-AI/Wan2.1-I2V-14B-720P --local-dir ./models/Wan2.1-I2V-14B-720P huggingface-cli download facebook/wav2vec2-base-960h --local-dir ./models/wav2vec2-base-960h huggingface-cli download acvlab/FantasyTalking fantasytalking_model.ckpt --local-dir ./models - Or use the ModelScope CLI:

pip install modelscope modelscope download Wan-AI/Wan2.1-I2V-14B-720P --local_dir ./models/Wan2.1-I2V-14B-720P modelscope download AI-ModelScope/wav2vec2-base-960h --local_dir ./models/wav2vec2-base-960h modelscope download amap_cvlab/FantasyTalking fantasytalking_model.ckpt --local_dir ./models

- Use the Hugging Face CLI:

- Verification Environment

Make sure the GPU is available (RTX 3090 or higher recommended, VRAM at least 24GB). If you experience memory issues, try lowering the resolution or enabling VRAM optimization.

Usage

Once the installation is complete, users can run the reasoning script to generate videos from the command line. The basic commands are as follows:

python infer.py --image_path ./assets/images/woman.png --audio_path ./assets/audios/woman.wav

--image_path: Enter the path to the portrait image (PNG/JPG format supported).--audio_path: Enter the path of the audio file (WAV format is supported).--prompt: Optional cue word for controlling role behavior, for example:--prompt "The person is speaking enthusiastically, with their hands continuously waving."--audio_cfg_scalecap (a poem)--prompt_cfg_scale: Controls the degree of influence of the audio and cue words, with a recommended range of 3-7. Increasing the audio CFG enhances the lip synchronization effect.

Featured Function Operation

- Synchronized Lip Generation

The core function of FantasyTalking is to generate precise lip movements based on audio. The user needs to prepare a clear audio file (e.g. WAV format, 16kHz sampling rate is optimal). After running the inference script, the model will automatically analyze the audio and generate matching lip movements. Make sure the audio is free of significant noise for best results. - Cue word control

pass (a bill or inspection etc)--promptparameter, the user can define the character's expressions and actions. For example, enter--prompt "The person is speaking calmly with slight head movements."Videos of calm talking can be generated. Cue words need to be concise and clear, avoiding vague descriptions. - Multi-style support

The project supports portrait generation in both real and cartoon styles. Users can provide input images of different styles, and the model will adjust the output style according to the image features. The cartoon style is suitable for animation scenes, and the real style is suitable for applications such as virtual anchors. - Exercise intensity modulation

FantasyTalking's Motion Intensity Modulation module allows the user to control the amplitude of expressions and movements. For example, setting a higher--audio_weightParameter enhances limb movements and is suitable for dynamic scenes. Default settings are optimized and it is recommended to keep the default values for the first time.

caveat

- hardware requirementRTX 5090 with 32GB of VRAM may still experience low memory issues and it is recommended that you lower the VRAM to a lower level.

--image_sizemaybe--max_num_framesThe - Model Download: The model files are large (about tens of gigabytes), so make sure you have a stable network and enough disk space.

- Cue word optimization: The cue word has a large impact on the output and several experiments are recommended to find the best description.

application scenario

- Virtual anchor content creation

Users can utilize FantasyTalking to generate realistic talking videos for virtual anchors. Enter the anchor's portrait image and voiceover audio to generate lip-synchronized videos for live streaming, short videos or educational content production. - Animation Character Dubbing

Animators can generate voiceover videos for cartoon characters. Providing the cartoon image and audio, the model can generate matching lip movements and expressions, simplifying the animation production process. - Educational video production

Teachers or training organizations can generate virtual instructor videos. Input instructor portraits and course audio to quickly generate instructional videos and enhance content appeal. - Entertainment and Fascination Creation

Users can generate hilarious talking portraits for fanfic or entertainment videos. By adjusting the cue words, create videos with exaggerated expressions or actions suitable for social media sharing.

QA

- What input formats does FantasyTalking support?

Images are supported in PNG and JPG formats, and audio is supported in WAV format, with a recommended sampling rate of 16kHz for optimal lip synchronization. - How to solve the problem of insufficient video memory?

If the GPU does not have enough memory (e.g., 32GB VRAM on the RTX 5090), lower the--image_size(e.g. 512x512) or reduced--max_num_frames(e.g. 30 fps). You can also enable the VRAM optimization option or use a more configurable GPU. - How can the quality of the generated video be improved?

Use a high resolution input image (at least 512x512) to ensure that the audio is clear and noise free. Adjustment--audio_cfg_scale(e.g., 5-7) enhances lip synchronization, and optimizing cue words improves the naturalness of expressions. - Does it support real-time generation?

The current version only supports offline inference, real-time generation requires further model optimization and hardware support.