General Introduction

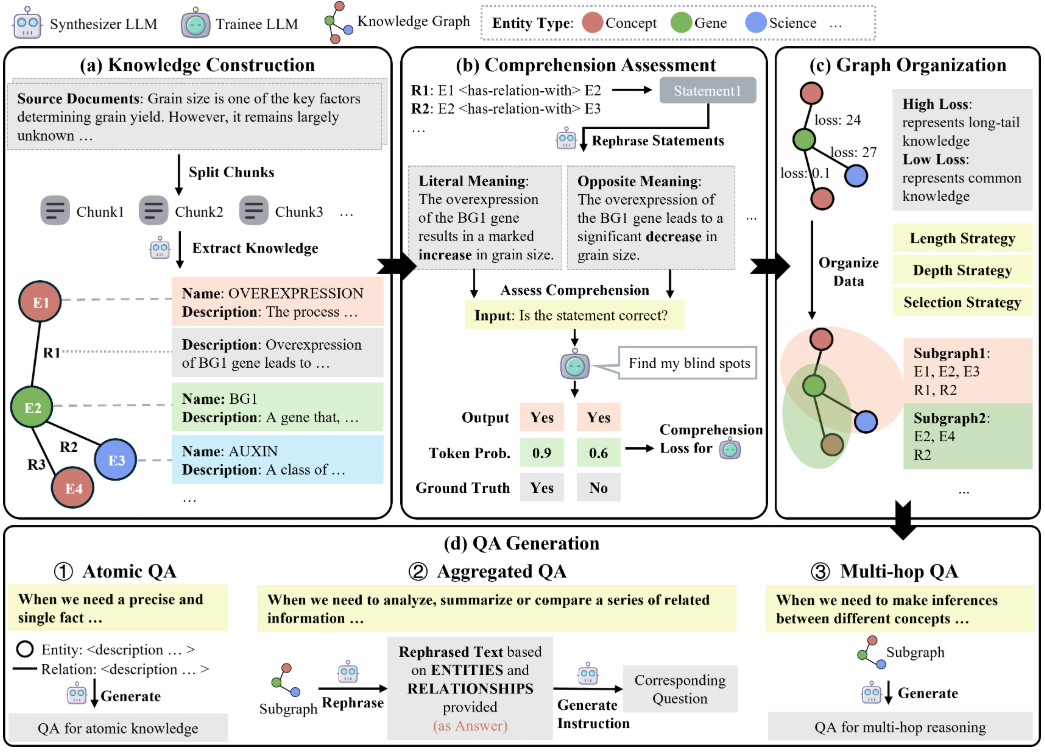

GraphGen is an open source framework developed by OpenScienceLab, an AI lab in Shanghai, hosted on GitHub, focusing on optimizing supervised fine-tuning of Large Language Models (LLMs) by guiding synthetic data generation through knowledge graphs. It constructs fine-grained knowledge graphs from source text, identifies model knowledge blind spots using expected calibration error (ECE) metrics, and prioritizes the generation of Q&A pairs targeting high-value, long-tail knowledge.GraphGen supports multi-hop neighborhood sampling to capture complex relational information, and generates diversified data through style control. The project is licensed under the Apache 2.0 license, and the code is open for academic research and commercial development. Users can configure the generation process flexibly via command line or Gradio interface, and the generated data can be directly used for model training.

Function List

- Constructing fine-grained knowledge graphs: extracting entities and relationships from text to generate structured knowledge graphs.

- Identifying knowledge blind spots: locating knowledge weaknesses in language models based on expected calibration error (ECE) metrics.

- Generate high-value Q&A pairs: prioritize the generation of Q&A data targeting long-tail knowledge to improve model performance.

- Multi-hop neighborhood sampling: capturing multi-level relationships in knowledge graphs to enhance data complexity.

- Style control generation: Support diverse Q&A styles, such as concise or detailed, to adapt to different scenarios.

- Custom Configuration: Adjust data types, input files and output paths via YAML files.

- Gradio Interface Support: Provides a visual interface to simplify data generation operations.

- Model compatibility: Supports multiple language models (e.g. Qwen, OpenAI) for data generation and training.

Using Help

Installation process

GraphGen is a Python project that supports installation from PyPI or running from source. Here are the detailed installation steps:

Installing from PyPI

- Install GraphGen

Ensure that Python version is 3.8 or higher by running the following command:pip install graphg

- Configuring Environment Variables

GraphGen requires a call to a language modeling API (such as Qwen or OpenAI). Set environment variables in the terminal:export SYNTHESIZER_MODEL="your_synthesizer_model_name" export SYNTHESIZER_BASE_URL="your_base_url" export SYNTHESIZER_API_KEY="your_api_key" export TRAINEE_MODEL="your_trainee_model_name" export TRAINEE_BASE_URL="your_base_url" export TRAINEE_API_KEY="your_api_key"SYNTHESIZER_MODEL: Models for generating knowledge graphs and data.TRAINEE_MODEL: Models used for training.

- Run the command line tool

Execute the following command to generate data:graphg --output_dir cache

Installation from source

- clone warehouse

Clone the GraphGen repository locally:git clone https://github.com/open-sciencelab/GraphGen.git cd GraphGen - Creating a Virtual Environment

Create and activate a virtual environment:python -m venv venv source venv/bin/activate # Linux/Mac venv\Scripts\activate # Windows - Installation of dependencies

Install project dependencies:pip install -r requirements.txtMake sure PyTorch (recommended 1.13.1 or higher) and related libraries (e.g. LiteLLM, DSPy) are installed. If using a GPU, install a CUDA-compatible version:

pip install torch==1.13.1+cu117 torchvision==0.14.1+cu117 --extra-index-url https://download.pytorch.org/whl/cu117 - Configuring Environment Variables

Copy the example environment file and edit it:cp .env.example .envexist

.envfile to set model-related information:SYNTHESIZER_MODEL=your_synthesizer_model_name SYNTHESIZER_BASE_URL=your_base_url SYNTHESIZER_API_KEY=your_api_key TRAINEE_MODEL=your_trainee_model_name TRAINEE_BASE_URL=your_base_url TRAINEE_API_KEY=your_api_key - Preparing to enter data

GraphGen requires input text in JSONL format. The example data is located in theresources/examples/raw_demo.jsonl. Users can prepare customized data to ensure consistent formatting.

Usage

GraphGen supports both command line and Gradio interface. Here are the detailed steps:

command-line operation

- Modify the configuration file

compilerconfigs/graphgen_config.yamlfile to set the data generation parameters:data_type: "raw" input_file: "resources/examples/raw_demo.jsonl" output_dir: "cache" ece_threshold: 0.1 sampling_hops: 2 style: "detailed"data_type: Input data type (e.g.raw).input_file: Enter the file path.output_dir: Output directory.ece_threshold: ECE thresholds for knowledge blind spot identification.sampling_hops: Multi-hop sampling depth.style: Q&A generation styles (e.g.detailedmaybeconcise).

- Run the generated script

Execute the following command to generate data:bash scripts/generate.shor just run a Python script:

python -m graphg --config configs/graphgen_config.yaml - View Generated Results

The generated Q&A pairs are saved in thecache/data/graphgendirectory in the format of a JSONL file:ls cache/data/graphgen

Gradio Interface Operation

- Launching the Gradio Interface

Run the following command to start the visualization interface:python webui/app.pyThe browser will open the Gradio interface showing the data generation process.

- workflow

- Uploads a JSONL-formatted input file in the interface.

- Configure generation parameters (e.g., ECE threshold, sample depth, generation style).

- Click on the "Generate" button and the system will process the input and output the Q&A pairs.

- Download the generated JSONL file.

Featured Function Operation

- knowledge graph construction: GraphGen automatically extracts entities and relationships from the input text, generates a knowledge graph, and saves it in JSON format. No manual intervention is required.

- Knowledge blind spot identification: Predict bias through the ECE metrics analytics model and generate targeted Q&A pairs. Adjustment

ece_thresholdControls blind screening rigor. - Multi-hop neighborhood sampling: Capturing multi-level relationships in knowledge graphs to generate complex Q&A pairs. Settings

sampling_hopsControls the sampling depth. - Style control generation: Multiple Q&A styles are supported for different scenarios. Users can

styleThe parameter selects the style.

training model

The generated data can be used for supervised fine tuning (SFT). Import the output file into a framework that supports SFT (e.g. XTuner):

xtuner train --data cache/data/graphgen/output.jsonl --model qwen-7b

caveat

- Ensure that the API key and network connection are stable and that the generation process calls an external model.

- The input data should be in JSONL format, refer to the

raw_demo.jsonlThe - GPU devices are recommended for large-scale data generation to optimize performance.

- Check dependency versions to avoid conflicts. Update if necessary

requirements.txtThe

Supplementary resources

- OpenXLab Application Center: Users can access this information through the OpenXLab Experience GraphGen.

- Official FAQ: refer to the GitHub FAQ Solve common problems.

- technical analysis: Courtesy of DeepWiki System Architecture AnalysisThis section describes the workflow of GraphGen in detail.

application scenario

- academic research

Researchers can use GraphGen to generate Q&A data for specialized domains. For example, generating training data for a chemistry or medical domain model improves the model's knowledge coverage. - Enterprise AI Optimization

Enterprises can use GraphGen to generate customized Q&A pairs for customer service or recommender systems, optimizing the responsiveness of the conversation model. - Education platform development

Developers can generate diverse teaching Q&A data to build intelligent educational tools to support personalized learning.

QA

- What models does GraphGen support?

GraphGen supports OpenAI, Qwen, Ollama and other models through LiteLLM. Model API keys and addresses are required. - How do I prepare the input data?

The input data should be in JSONL format, with each line containing text content. Referenceresources/examples/raw_demo.jsonlThe - How long does it take to generate data?

Small data sizes (100 entries) can take a few minutes, and large data sizes can take hours, depending on the amount of input and hardware performance. - How does the Gradio interface work?

(of a computer) runpython webui/app.pyIf you want to generate data, you can upload the input file through your browser and configure the parameters to generate the data.