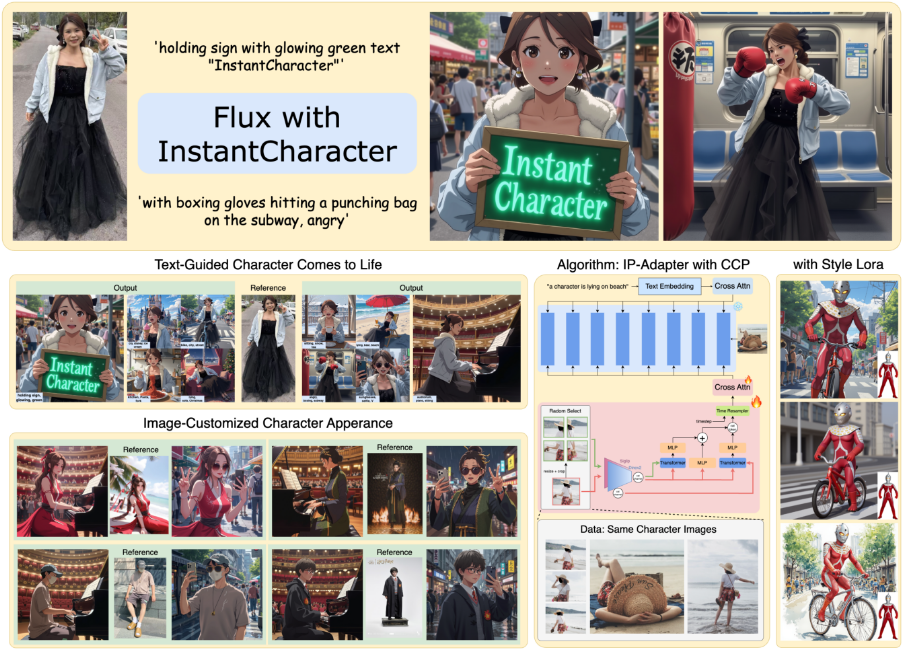

InstantCharacter: An Open Source Tool for Generating Consistent Characters from a Single Image

General Introduction

InstantCharacter is an open source project developed by Tencent Hunyuan and the InstantX team, hosted on GitHub. It uses a reference image and a text description to generate consistent-looking character images for a variety of scenes and styles. The project is based on Diffusion Transformer technology, which breaks through the limitations of the traditional U-Net architecture to provide higher image quality and flexibility. Users can generate character images that match their descriptions without complex parameterization and with simple operation, which is widely used in animation, games and digital art. Project Support Flux.1 model, and provide stylistic LoRA adaptation to facilitate stylized generation.

Function List

- Single Image Generation for Consistent Characters: Generate character images for different scenes, actions and viewpoints with just one reference image.

- Text-driven generation: Adjust character movements, scenes and styles with text prompts, e.g. "girl playing guitar in the street".

- Style Migration Support: Compatible with many styles of LoRA, such as Ghibli style or Makoto Shinkai style, to generate artistic images.

- High-quality image output: Generate high-resolution, detailed character images based on Flux.1 models.

- Open source model support: support for Flux and other open source text to image model, developers can freely extend the function.

- Lightweight adapters: use scalable adapter modules to reduce computational resource consumption and improve generation efficiency.

- Large-scale dataset optimization: training based on ten million sample datasets to ensure role consistency and text controllability.

Using Help

Installation process

InstantCharacter requires a GPU-enabled environment, and an NVIDIA GPU is recommended (minimum 48GB VRAM, optimized for 24GB). Below are the detailed installation steps:

- environmental preparation::

- Install Python 3.8 or later.

- Installing PyTorch (with CUDA support) is recommended.

pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu118The - To install the dependency library, run the following command:

pip install transformers accelerate diffusers huggingface_cli - Make sure you have Git and Git LFS installed on your system for downloading large files.

- clone warehouse::

- Open a terminal and run the following command to clone the InstantCharacter repository:

git clone https://github.com/Tencent/InstantCharacter.git cd InstantCharacter

- Open a terminal and run the following command to clone the InstantCharacter repository:

- Download Model Checkpoints::

- Download the model from Hugging Face:

huggingface-cli download --resume-download Tencent/InstantCharacter --local-dir checkpoints --local-dir-use-symlinks False - If you cannot access the Hugging Face, you can use a mirrored address:

export HF_ENDPOINT=https://hf-mirror.com huggingface-cli download --resume-download Tencent/InstantCharacter --local-dir checkpoints --local-dir-use-symlinks False - Once the download is complete, check that the folder structure contains

checkpoints,assets,modelsand other catalogs.

- Download the model from Hugging Face:

- Setting up the runtime environment::

- Make sure the GPU driver and CUDA version are compatible with PyTorch.

- Move the model and code to a CUDA-enabled device, run the

pipe.to("cuda")The

Usage

The core function of InstantCharacter is to generate character images from reference images and text prompts. Below is the detailed procedure:

1. Loading models and adapters

- Use the provided

pipeline.pyThe script loads the base model and adapters. The sample code is shown below:import torch from PIL import Image from pipeline import InstantCharacterFluxPipeline # 设置种子以确保可重复性 seed = 123456 # 加载基础模型 base_model = 'black-forest-labs/FLUX.1-dev' ip_adapter_path = 'checkpoints/instantcharacter_ip-adapter.bin' image_encoder_path = 'google/siglip-so400m-patch14-384' image_encoder_2_path = 'facebook/dinov2-giant' # 初始化管道 pipe = InstantCharacterFluxPipeline.from_pretrained(base_model, torch_dtype=torch.bfloat16) pipe.to("cuda") pipe.init_adapter( image_encoder_path=image_encoder_path, image_encoder_2_path=image_encoder_2_path, subject_ipadapter_cfg=dict(subject_ip_adapter_path=ip_adapter_path, nb_token=1024) ) - The above code loads the Flux.1 model and InstantCharacter's IP adapter, ensuring that the model runs on the GPU.

2. Preparation of reference images

- Select an image that contains a character (e.g.

assets/girl.jpg), make sure the background is simple (e.g. white background). - Loads the image and converts it to RGB format:

ref_image_path = 'assets/girl.jpg' ref_image = Image.open(ref_image_path).convert('RGB')

3. Generation of character images (no style migration)

- Use textual prompts to generate images and set parameters such as the number of inference steps and guidance scales:

prompt = "A girl is playing a guitar in street" image = pipe( prompt=prompt, num_inference_steps=28, guidance_scale=3.5, subject_image=ref_image, subject_scale=0.9, generator=torch.manual_seed(seed) ).images[0] image.save("flux_instantcharacter.png") subject_scaleControls role consistency, with lower values favoring stylization (e.g., 0.6 or 0.8).

4. Style migration using Style LoRA

- InstantCharacter supports stylized LoRA (e.g. Ghibli or Makoto Shinkai style). Load LoRA files and generate stylized images:

lora_file_path = 'checkpoints/style_lora/ghibli_style.safetensors' trigger = 'ghibli style' prompt = "A girl is playing a guitar in street" image = pipe.with_style_lora( lora_file_path=lora_file_path, trigger=trigger, prompt=prompt, num_inference_steps=28, guidance_scale=3.5, subject_image=ref_image, subject_scale=0.9, generator=torch.manual_seed(seed) ).images[0] image.save("flux_instantcharacter_style_ghibli.png") - interchangeability

lora_file_pathcap (a poem)triggerSwitchable between different styles (e.g.Makoto_Shinkai_style.safetensors).

5. Run the Gradio interface (optional)

- The project provides a Gradio interface for easy interaction. Run

app.py::python app.py - Open a browser to access the local address (e.g.

http://127.0.0.1:7860), upload the image and enter the prompt word to generate the image.

caveat

- Animal character generation may be unstable, human character images are recommended.

- Higher resolution generation requires more video memory and an A100 or RTX 5000 series GPU is recommended.

- Model downloads may be interrupted due to network issues, it is recommended to use the

--resume-downloadParameters.

application scenario

- Animation and Film Production

- Quickly generate character concept art or storyboards to shorten the pre-design cycle. Users can upload character sketches, enter scene descriptions (e.g. "character running in the forest"), and generate consistent images for animation preview or character testing.

- game development

- Generate multi-view, multi-motion images for game characters. Developers upload character designs and generate sprites with different poses (e.g. "character swinging a sword") for use in 2D or 3D game resources.

- Digital Art Creation

- Artists use the Style LoRA to generate artistic character images, such as real characters converted to Ghibli style, for use in illustration or NFT creation.

- Social Media Content

- Users upload selfies to generate anime-style avatars or dynamic scenes (e.g., "Dancing by myself in a sci-fi city") for personalized social media content.

QA

- What models does InstantCharacter support?

- Currently supports Flux.1 models, and may be extended to other open source text-to-image models in the future.

- How much video memory is needed to run it?

- Recommended 48GB of memory, optimized to support 24GB. quantization techniques are being developed to reduce memory requirements.

- How do I fix a model download failure?

- Use the Hugging Face mirror address or

--resume-downloadparameters to ensure network stability.

- Use the Hugging Face mirror address or

- Why are the generated animal characters unstable?

- The model training data is dominated by human characters, and the animal features are under-optimized, so it is recommended to use human character images.

- Is it commercially available?

- The current license is for research use only, commercial use requires contacting Tencent for authorization.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...