JetBrains, a provider of software development tools, recently announced that it has open-sourced the base version of Mellum, its language model designed specifically for code completion, and made it available on the Hugging Face platform. The move is designed to drive transparency and collaboration in the field of AI in software development.

Rather than striving for ubiquity, Mellum's design philosophy focuses on one core task: code completion. JetBrains refers to this type of model as specialized model (specialized model), emphasizing that its design goal is to have deep capabilities in specific domains, rather than blindly pursuing broad generality. The model supports code completion in a variety of programming languages, including Java, Kotlin, Python, Go, PHP, C, C++, C#, JavaScript, TypeScript, CSS, HTML, Rust and Ruby.

open source Mellum-4b-base is the first model in the Mellum family. JetBrains plans to expand this family in the future with more specialized models for different coding tasks (e.g., disparity prediction, etc.).

The Considerations Behind Open Source

The decision to make Mellum open source was not taken lightly. Rather than being a fine-tuned version of an existing open source model, Mellum was trained from the ground up by JetBrains to provide cloud-based code-completion functionality for its IDE products, and was released to the public last year.

JetBrains says Open Source Mellum is based on a belief in the power of transparency, collaboration and shared progress. From Linux and Git to Node.js and Docker, the open source paradigm has been a key driver of major leaps in technology. Considering that there are already open source LLMs outperforming some industry leaders, it's not unlikely that the overall development of AI will follow a similar trajectory.

The move also means JetBrains is opening up one of its core technologies to the community. By releasing Mellum on Hugging Face, the company is offering researchers, educators and senior technical teams the opportunity to delve into the inner workings of a specialized model. It's more than just providing a tool, it's an investment in open research and collaboration.

What is a specialized model?

In the field of machine learning, specialization is not a new concept, but rather a core approach that has guided model design for decades - building models to solve specific tasks efficiently and effectively. However, in recent years the discussion in AI has gradually shifted toward trying to cover all tasks with generalized grand models, but this often comes with significant computational and environmental costs.

specialized modelInstead, it returns to the original purpose of specialization: to build models that perform well in specific domains.

An analogy can be drawn to "T-Skills": individuals have a broad understanding of many topics (breadth of knowledge, the horizontal bar of the T), but deep expertise in a particular domain (depth of knowledge, the vertical bar of the T). Specialized models follow the same idea; they are not made to handle everything, but rather specialize and excel at a single task to be truly valuable in a particular domain.

Mellum is the embodiment of this philosophy. It is a relatively small, efficient model designed for code-related tasks, starting with code completion.

The reason for this approach is that not all problems require generic solutions, and not all teams have the resources or the need to run large, all-encompassing models. Specialized models, such as Mellum, offer distinct advantages:

- Provide precision for domain-specific tasks.

- Cost-effective in terms of operation and deployment.

- Calculation needs and carbon footprints are low.

- Provides greater accessibility for researchers, educators, and small teams.

This is not a technological step backwards, but rather an application of proven specialization principles to modern AI problems. JetBrains sees this as a smarter way forward.

How does Mellum perform?

Mellum is a multilingual 4B parametric model (Mellum-4b-base), optimized specifically for code completion. JetBrains benchmarked it across multiple languages on multiple datasets and performed extensive manual evaluation in its IDE.

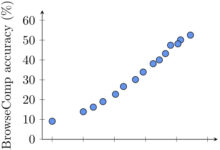

Below is data comparing the performance of Mellum with a number of models with larger parameter counts (full details, results, and comparisons can be found in Hugging Face's model card):

| mould | HumanEval Infilling (single line) | HumanEval Infilling (multiple lines) | RepoBench 1.1 (2K context, py) | SAFIM (average) |

|---|---|---|---|---|

| Mellum-4B-base | 66.2 | 38.5 | 28.2 | 38.1 |

| InCoder-6B | 69.0 | 38.6 | - | 33.8 |

| CodeLlama-7B-base | 83.0 | 50.8 | 34.1 | 45.0 |

| CodeLlama-13B-base | 85.6 | 56.1 | 36.2 | 52.8 |

| DeepSeek-Coder-6.7B | 80.7 | - | - | 63.4 |

Note: HumanEval Infilling tests code-filling capabilities, RepoBench evaluates performance in the context of a real code base, and SAFIM is another code-completion benchmark test. Comparison models include the CodeLlama family from Meta and the Coder model from DeepSeek.

The data shows that Mellum performs competitively in specific benchmarks (especially when its size is taken into account) despite its small number of participants. This further supports the notion that dedicated models can achieve efficient performance on specific tasks. Parameter count is not the only measure of a model's capability; task-specific optimization is also critical.

Who is Mellum for?

To be clear, the current version of Mellum released on Hugging Face is not primarily aimed at the average end developer, who may not be directly fine-tuning or deploying the model.

The model is open to the following groups:

- AI/ML Researchers: In particular, academics who are exploring the role of AI in software development, conducting benchmarking, or investigating model interpretability.

- AI/ML engineers and educators: It can be used as a basis for learning how to build, fine-tune, and adapt domain-specific language models, or to support educational programs focused on LLM architecture and specialization.

Experience Mellum Now

The Mellum base model is now available in Hugging Face Going Live. JetBrains emphasizes that this is just the beginning and that their goal is not to strive for generality, but to build focused, efficient tools. For users looking to explore, experiment, or build based on Mellum, the model can now be accessed and tried.