Background: Challenges of integrating n8n with the RAG Knowledge Base

n8n is gaining traction as a powerful open source automated workflow tool. Founded in 2019 by Jan Oberhauser, former visual designer of Pirates of the Caribbean, it aims to provide a more flexible and less costly automation solution than tools like Zapier. With a philosophy of being "free and sustainable, open and pragmatic," n8n is centered on the idea of automation through Visualization & Code Dual mode, allowing users to connect different applications to automate complex processes (official documentation: https://docs.n8n.io/). Once users are familiar with it, building simple workflows with it is usually quite fast, and it supports one-click publishing of workflows to the public network, providing great convenience.

n8n is often described as the "Lego of automation", emphasizing its flexibility and combinability.

However, in the context of the increasing popularity of AI applications, a common need surfaces: how to efficiently integrate RAG (Retrieval-Augmented Generation) knowledge bases in n8n?RAG is a technology that combines information retrieval and text generation, enabling large language models to refer to external knowledge bases when answering questions, thus providing more accurate and contextualized answers. RAG is a technology that combines information retrieval and text generation to enable large language models to refer to an external knowledge base when answering questions, thus providing more accurate and contextualized answers.

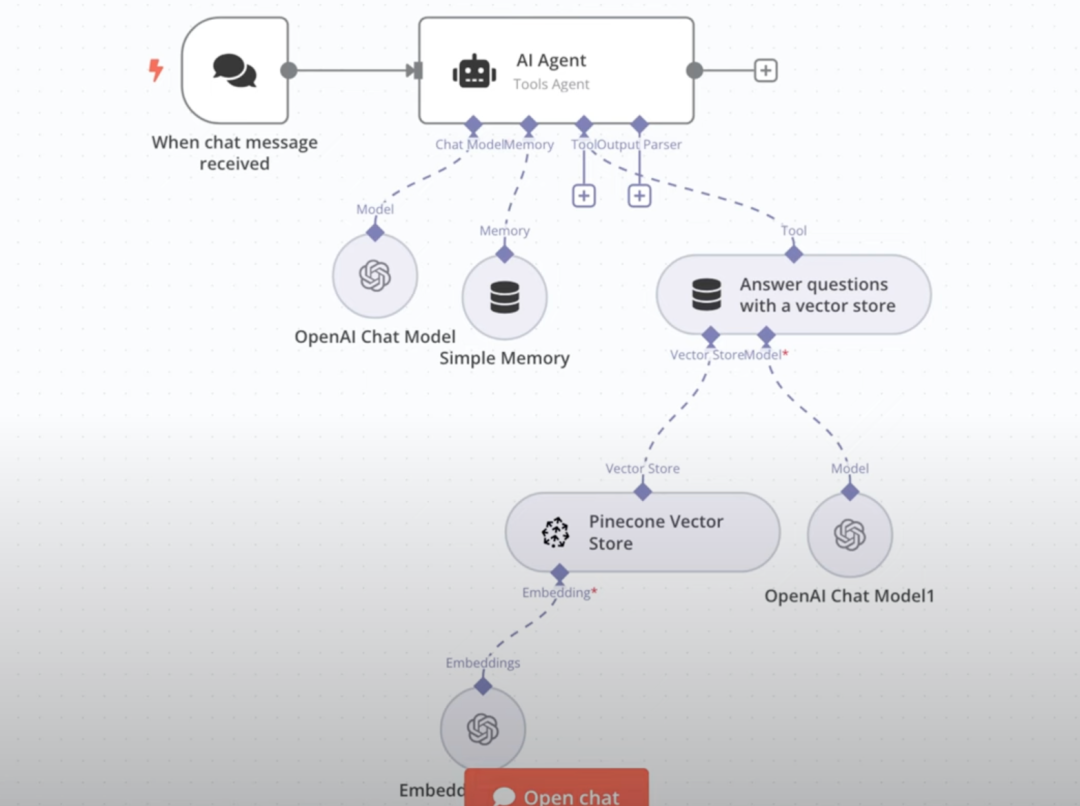

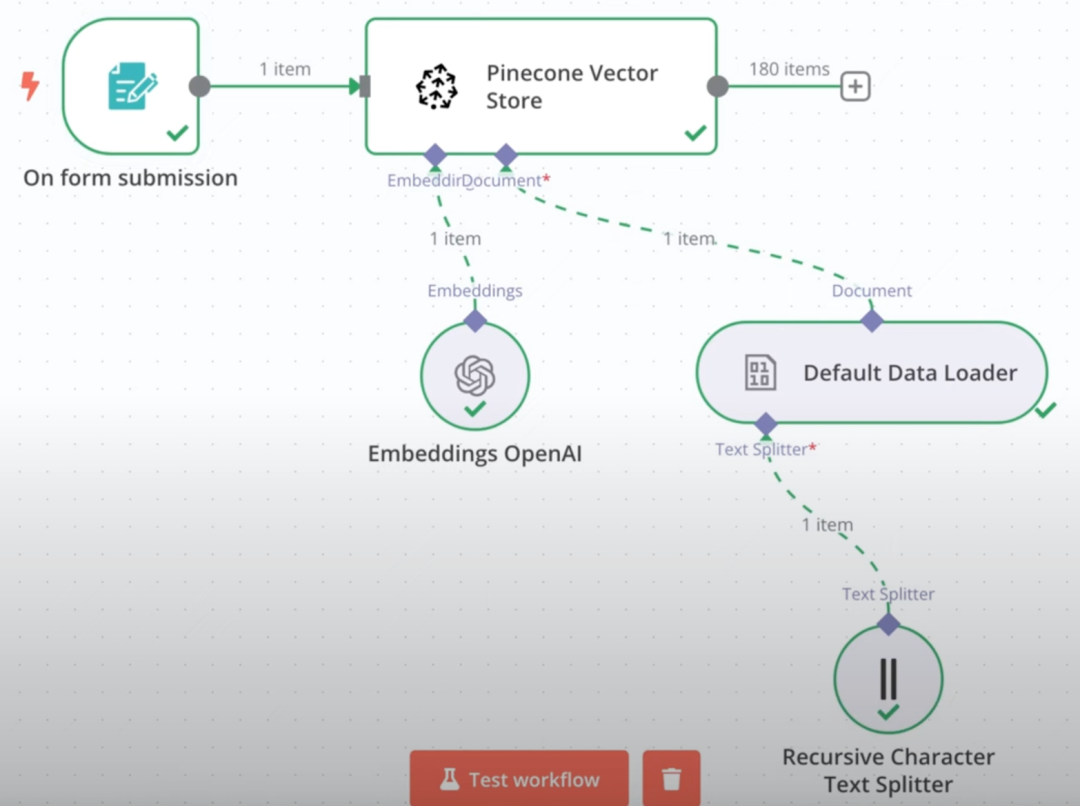

Build directly in n8n RAG Knowledge bases have proven to be a relatively complex process. It typically requires developers to manually build two separate workflows: one to handle file uploads, vectorization, and storage in a database, and another to implement RAG-based Q&A interactions.

Example: File upload workflow on the left, RAG Q&A workflow on the right.

Even if successfully built, this native solution can be unsatisfactory in terms of usage experience. This prompted us to think: Is it possible to plug in external mature knowledge base solutions, such as the popular FastGPT, into n8n?

Solution: Utilizing FastGPT and MCP protocols

FastGPT is a highly acclaimed open source LLM application platform, especially known for its powerful RAG features and ease of use. A recent update to FastGPT (follow its GitHub: https://github.com/labring/FastGPT) brings an exciting feature: support for MCP (Meta Component Protocol).

MCP Designed to solve the interoperability problem between different AI applications and services, FastGPT can be understood as a "universal socket" protocol that not only supports access to other services as an MCP client, but also serves as an MCP server, exposing its capabilities (e.g., knowledge base) to other MCP-compatible platforms.

Coincidentally, n8n has recently officially supported the MCP protocol. This means that it is possible to publish FastGPT's knowledge base capabilities through MCP and then access them as a "tool" in n8n. This solution has proven to be completely feasible and the interface process is quite smooth. Utilizing FastGPT's mature RAG implementation, the integrated knowledge base Q&A results are often very good.

The whole operation process can be summarized in the following steps:

- Deploy the n8n environment (local or server).

- Deploy or upgrade FastGPT to a version that supports MCP.

- Create and configure the MCP-Server in FastGPT to provide services externally.

- Access this FastGPT MCP-Server in an n8n workflow.

Deploying or upgrading FastGPT

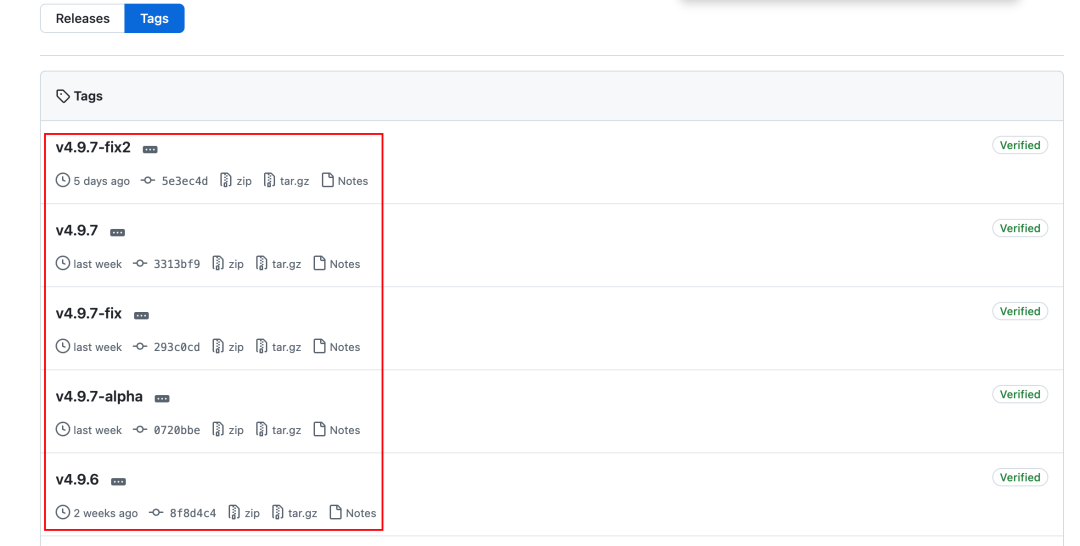

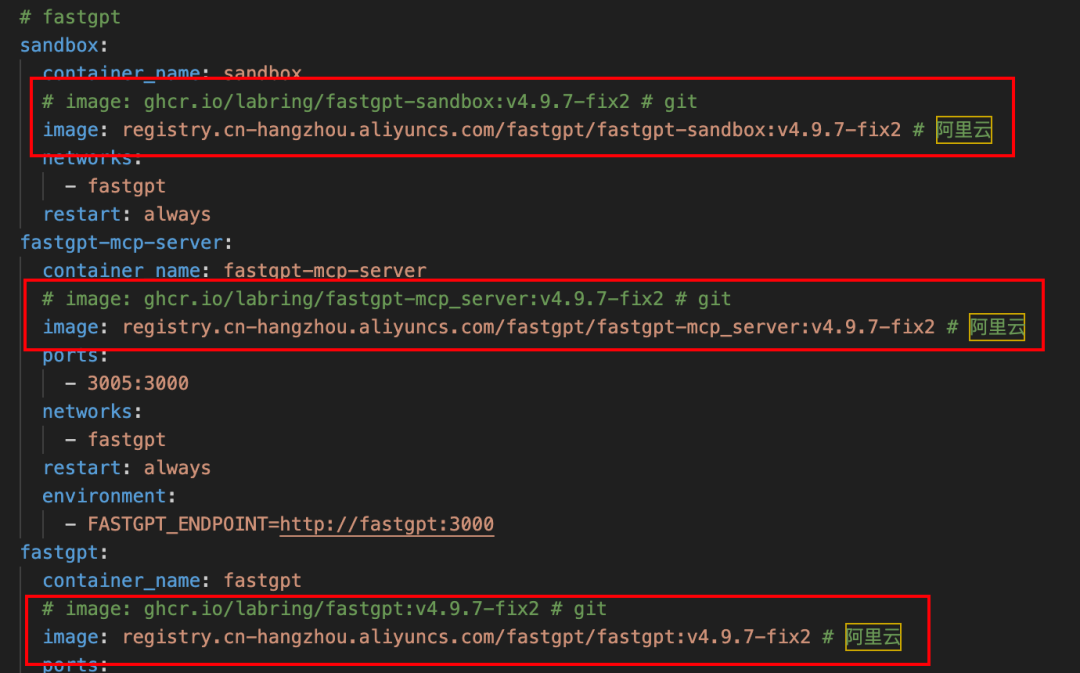

FastGPT has supported MCP since version v4.9.6, and it is recommended to use the latest stable version (v4.9.7-fix2 at the time of writing). Here is the introduction of using docker-compose A way to perform deployments or upgrades (requires a pre-installed Docker environment).

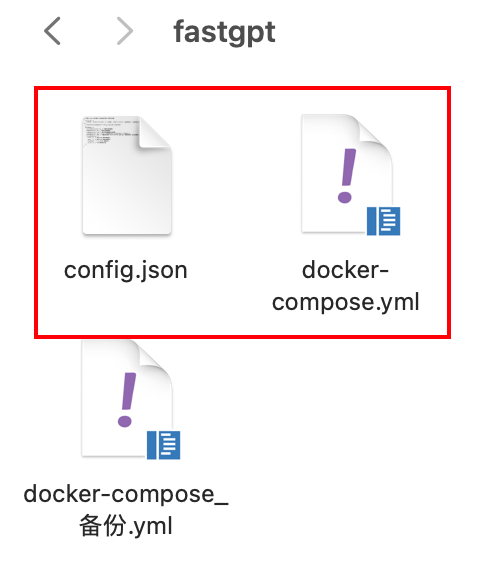

The following two documents need to be prepared:

- FastGPT Latest

docker-compose.ymlDocumentation. - FastGPT Latest

config.jsonDocumentation.

Both files can be obtained from FastGPT's GitHub repository:

docker-compose-pgvector.yml: https://github.com/labring/FastGPT/blob/main/deploy/docker/docker-compose-pgvector.ymlconfig.json: https://raw.githubusercontent.com/labring/FastGPT/refs/heads/main/projects/app/data/config.json

Note: If you can't access GitHub directly, you can try to find another way to get it or use a domestic mirror source.

The following steps apply to new deployments and upgrades:

- If you are upgrading, be sure to back up your old

docker-compose.ymland associated data volumes. - The latest available data will be obtained from the

docker-compose.ymlcap (a poem)config.jsonThe files are placed in the same directory.

exist config.json In the document, special attention needs to be paid to mcp.server.host Configuration item.

- If both FastGPT and n8n are deployed in a local Docker environment, you can set the

mcp.server.hostis set to the local IP address of the machine, and the port is usually fixed at3005The - If deployed on a cloud server, it should be set to the server's external IP address or domain name.

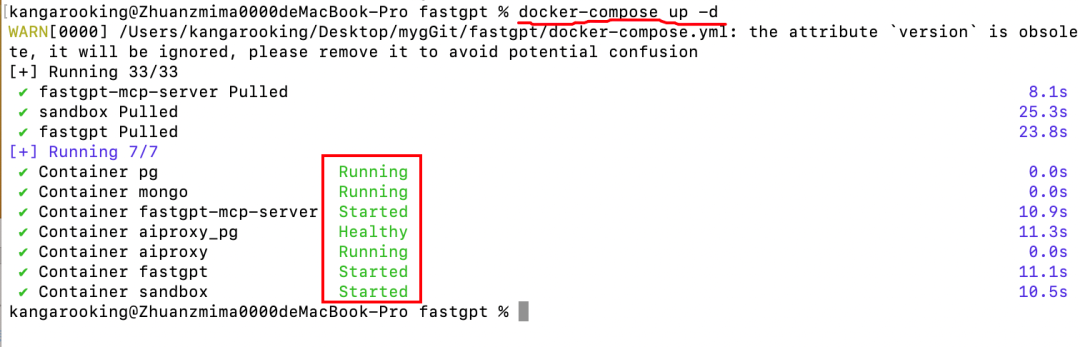

After confirming that the configuration is correct, open a terminal or command line in the file directory and execute the following command:

docker-compose up -d

When you see log output similar to the following figure and the containers are all running normally, it means that the deployment or upgrade was successful.

Tip: If you have trouble pulling Docker images, try modifying the docker-compose.yml file, replace the official mirror address with the domestic mirror repository address (e.g. AliCloud mirror).

Creating an MCP Service in FastGPT

After successfully deploying or upgrading FastGPT, access the FastGPT web interface via a browser (default is http://127.0.0.1:3000).

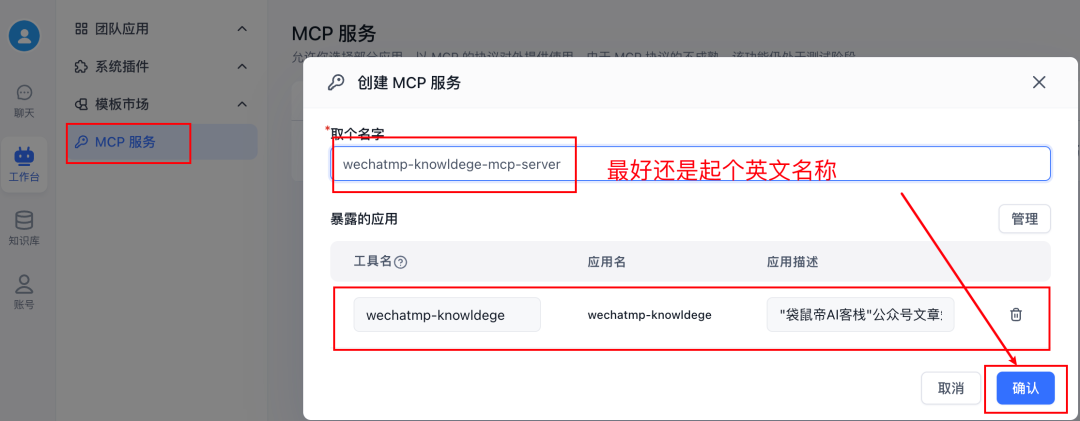

Navigate to Workbench -> MCP Service -> New ServiceThis is where you can create an MCP-Server that exposes capabilities to the outside world. Here you can create MCP-Servers that expose their capabilities to the outside world.

After creating the service, click managerialYou can see that you can add "tools" to this MCP service. These tools can be applications such as Bot, Workflow, etc. created in FastGPT.

There is a key issue here: it seems that it is not possible to directly add the "knowledge base" created in FastGPT to the MCP service as a standalone tool in its own right. If a Bot with access to a Knowledge Base is put into the MCP service as a tool, then when n8n calls through the MCP, it may get the content processed (e.g., summarized, rewritten) by the big model inside the Bot, instead of the original fragment retrieved from the Knowledge Base. This may not be as expected, especially in scenarios where the original text needs to be quoted precisely.

A clever workaround is to utilize FastGPT's workflow Functionality. A simple workflow can be created in FastGPT whose core function is to perform knowledge base searches and specify the response format on demand.

The specific steps are as follows:

- Create a new workflow in FastGPT.

- In the workflow only add Knowledge Base Search nodes and Designated response nodes (or other logical nodes as necessary).

- configure Knowledge Base Search node, select the target knowledge base, and set the relevant parameters (e.g., minimum relevance, maximum number of citations, etc.).

- configure Designated response node that determines how to output the retrieval results (e.g., directly output the original text block).

- Click on the upper right corner of the Save and PublishThe

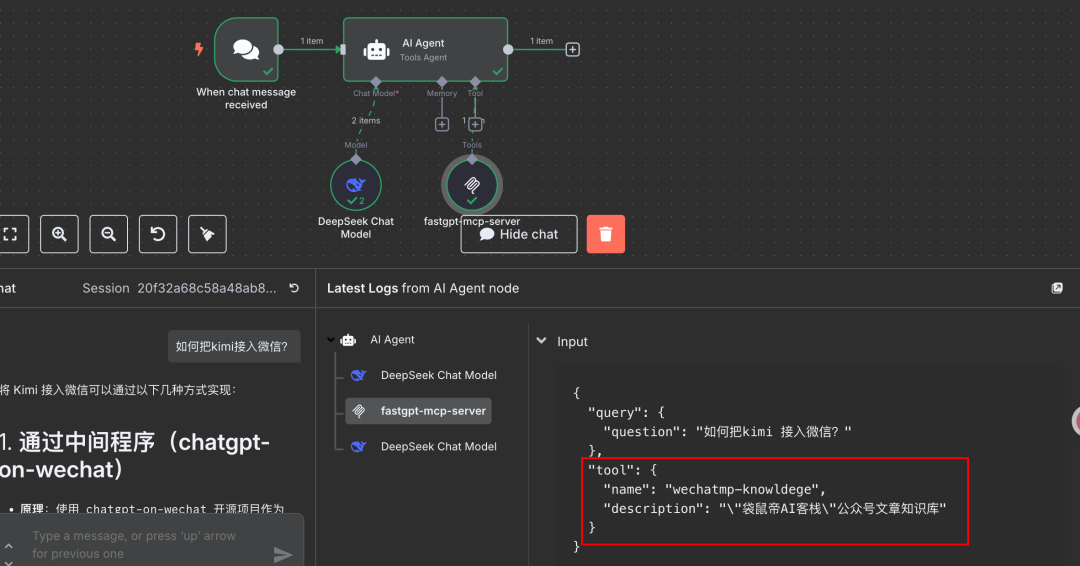

Important: The name of the workflow is recommended to be in English and be sure to fill in the application description. This information is displayed in n8n and helps to identify the tool.

After completing workflow creation and publishing, go back to the MCP Services Management Interface.

- Create a new MCP service (or edit an existing one).

- Add the workflow you just created that contains the knowledge base search as a tool under this service.

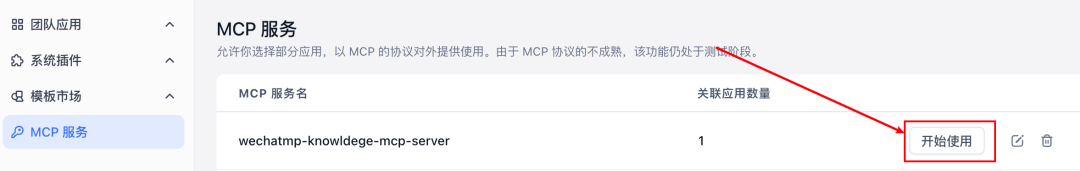

When you're done adding, click start usingThe

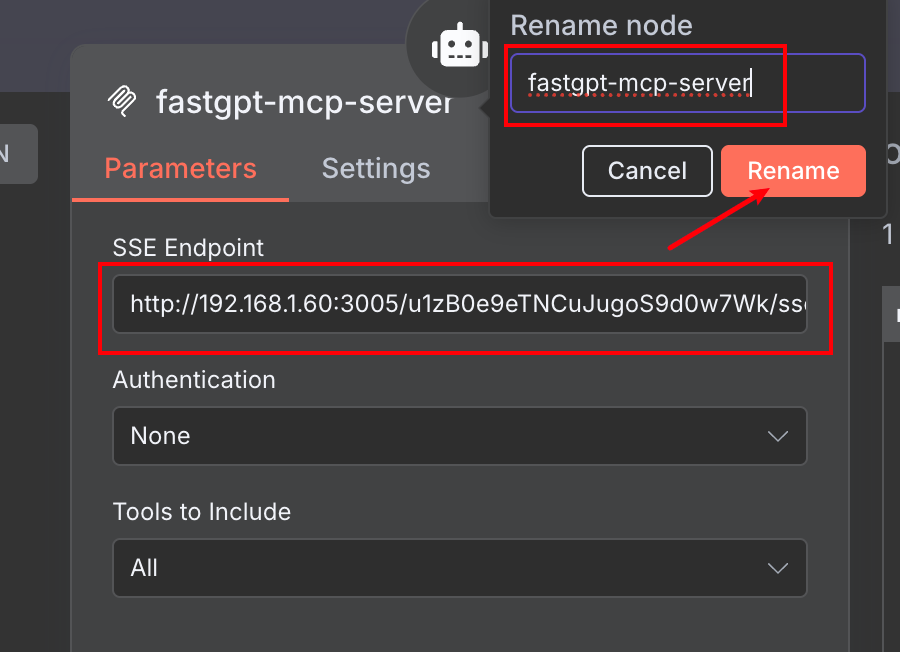

In the pop-up window, find the SSE column, copy the address provided. This address is the access point for the FastGPT MCP-Server you just created.

Accessing FastGPT MCP Services in the n8n

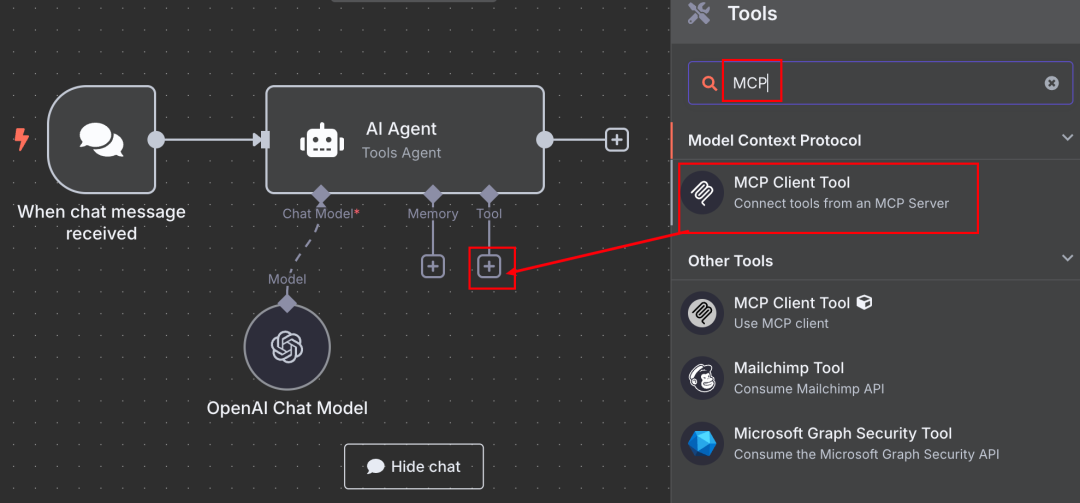

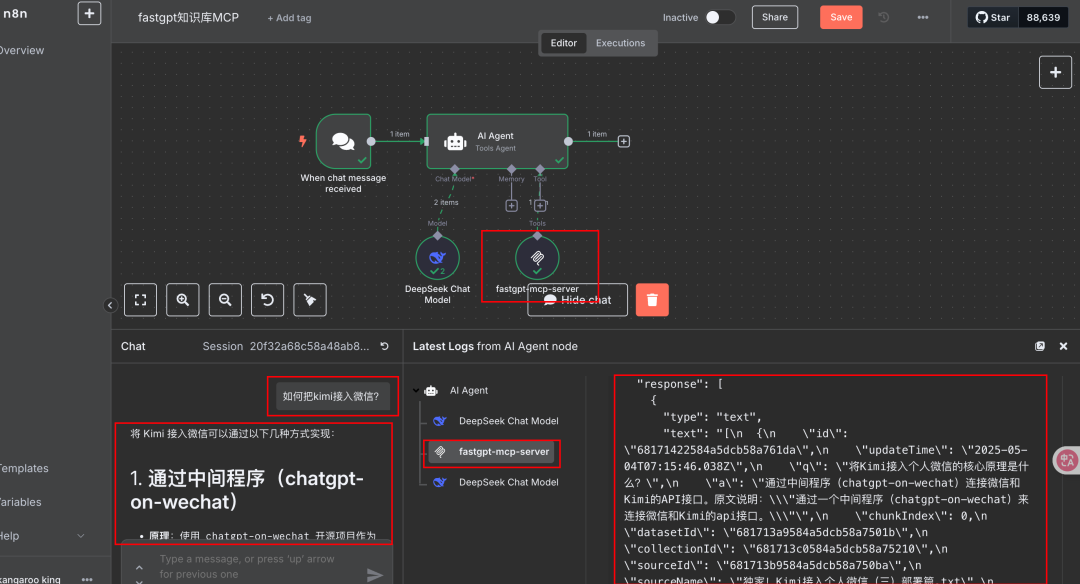

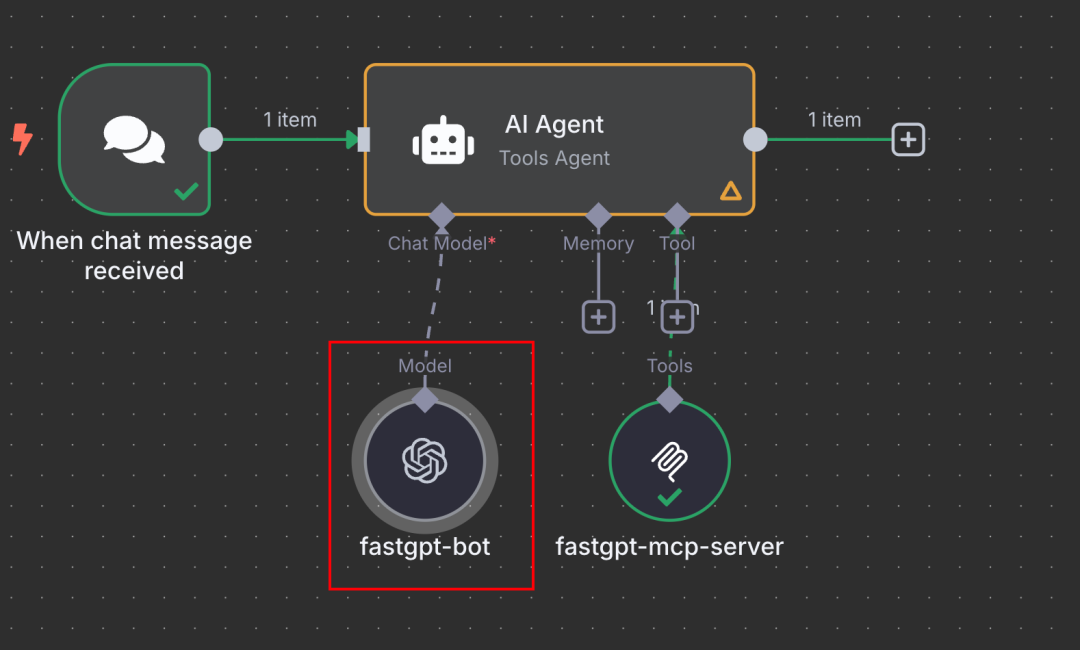

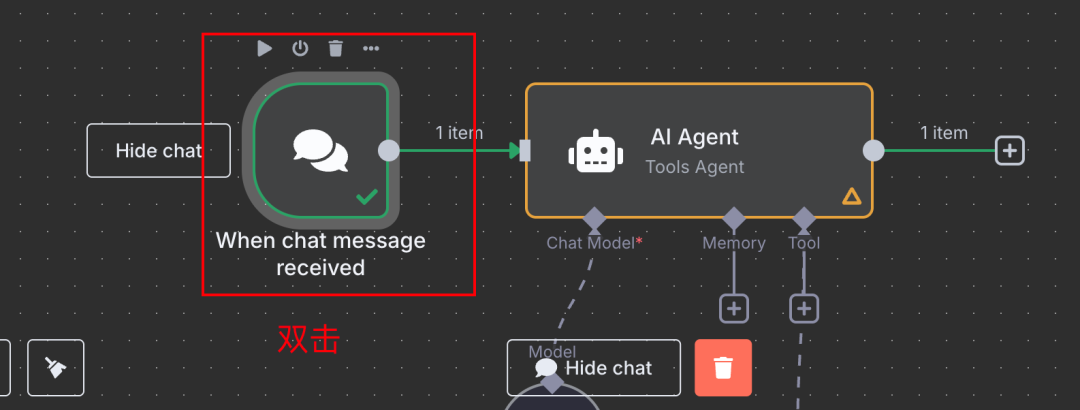

Now, switch to n8n's workflow editing screen.

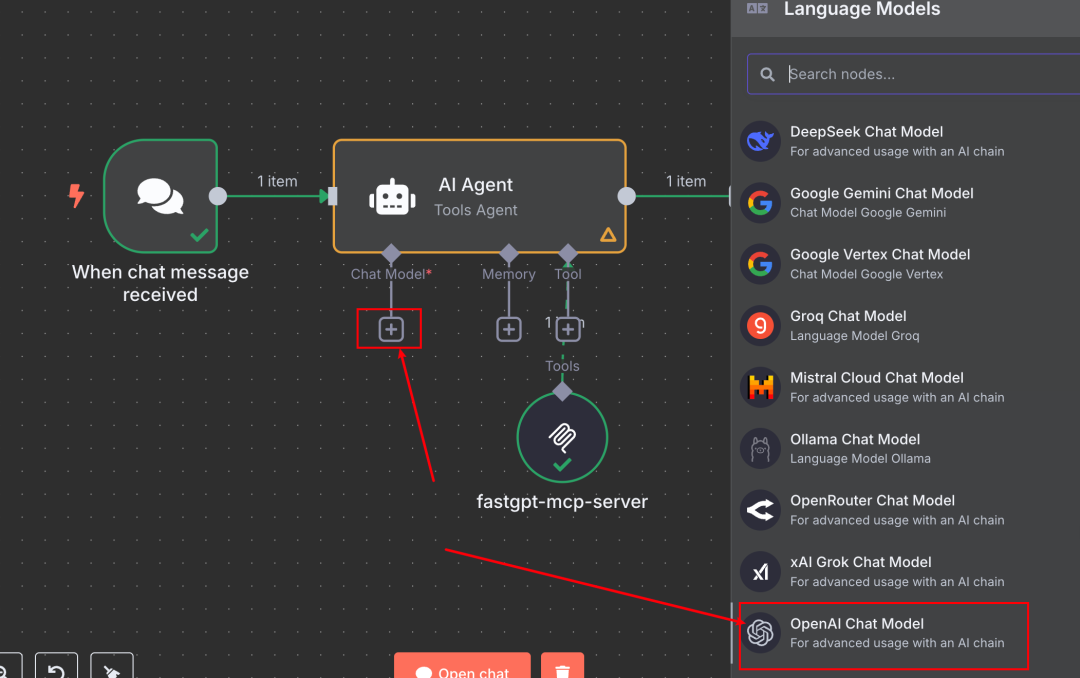

- Choose an appropriate node, for example AI Agent nodes in their Tool Configuration section, click on the plus sign +The

- Search and add the officially provided MCP Client Tool Nodes.

- exist MCP Client Tool in the configuration screen of the

- Paste the SSE address you copied earlier from FastGPT into the Server URL Fields.

- You can set a descriptive name for this Tool node (e.g.

FastGPT Knowledge Base via MCP) for easy calling in the Agent.

Once configured, you can test it in n8n's Chat dialog (or other triggers). Issue a question that requires a knowledge base call. If the configuration is correct, you can see that n8n successfully invokes the FastGPT MCP service and gets the relevant information from FastGPT's knowledge base as the basis for the answer.

As mentioned earlier, FastGPT's RAG knowledge base is generally considered to be quite effective in lightweight LLM application platforms.

In addition, you can see that the name and introduction set for the workflow in FastGPT are displayed as tool information on the n8n side to help the Agent understand and choose which tool to use.

Expanding Ideas and Other Access

Accessing the FastGPT knowledge base (encapsulated in a workflow) to n8n via MCP is an efficient and flexible approach. Based on this, it can also be extended:

- Setting FastGPT's plug-in (software component) maybe Bot with Knowledge Base It is packaged as an MCP service.

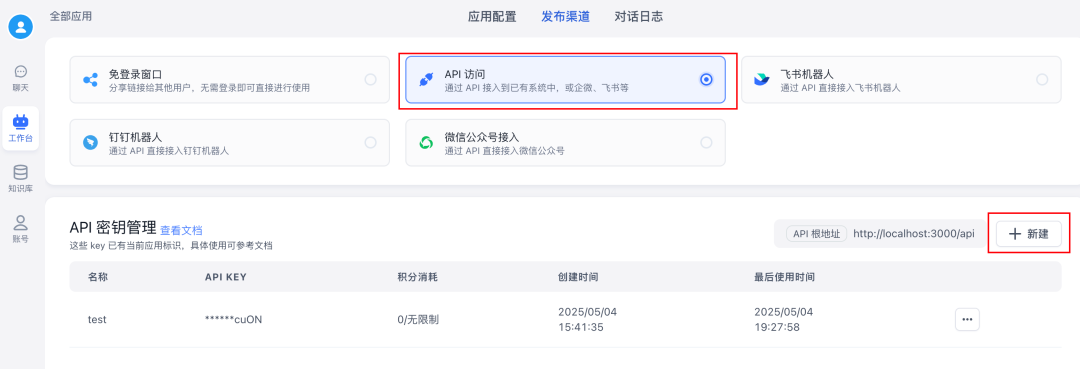

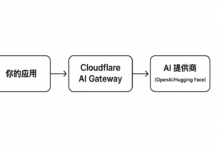

- Utilizing FastGPT's API FastGPT allows to publish the created application (e.g. a Bot with a knowledge base) as an interface compatible with the OpenAI API format.

This API approach can also be used to access the FastGPT knowledge base in n8n:

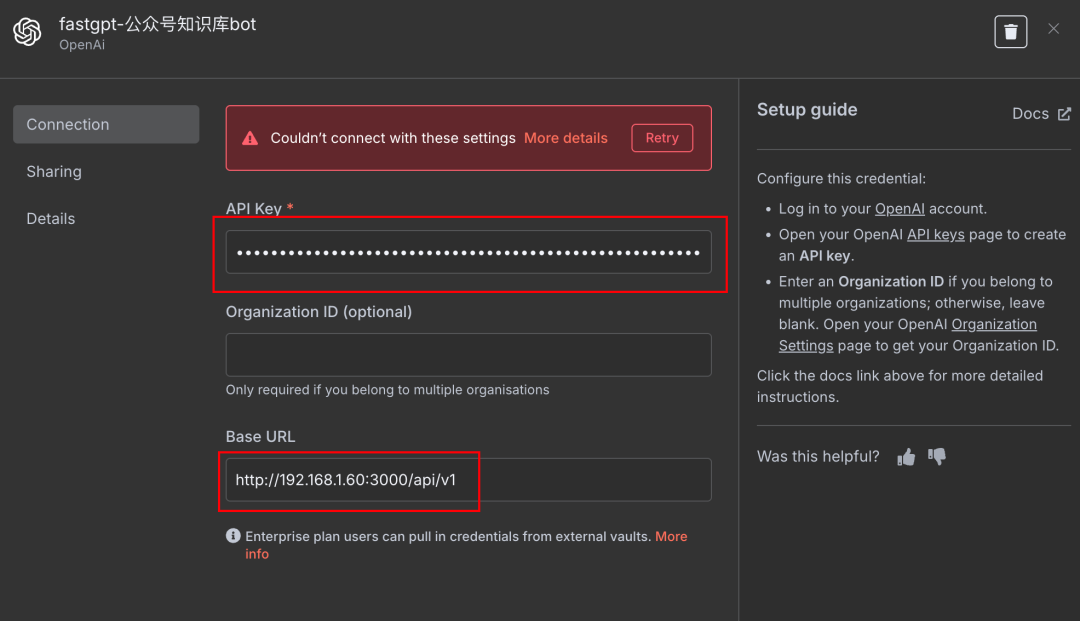

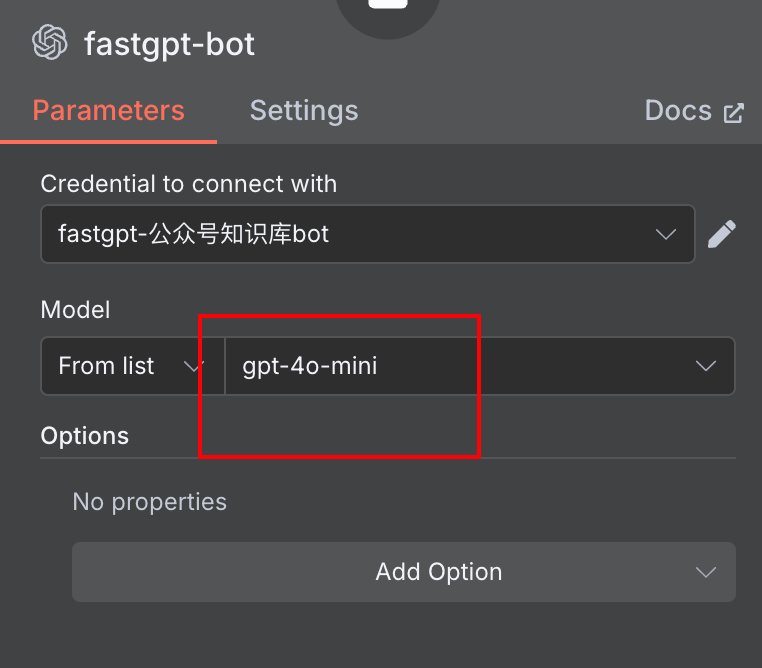

- In n8n's AI Agent node (or any other node that needs to invoke LLM), add a new Chat ModelThe

- option OpenAI Compatible Chat Model Type.

- Configuration Credentials:

Base URLFill in the API address provided by FastGPT.API KeyFill in the API key corresponding to the FastGPT application.

Model NameIt can be filled in arbitrarily, and the actual model in effect will be as configured within the FastGPT Bot.

In this way, n8n sends the request to FastGPT's API endpoint, which FastGPT processes and returns the result. This is equivalent to calling FastGPT's Bot (and its knowledge base) as an external big model service.

Compare the MCP and API approaches:

- MCP method::

- Pros: more in line with n8n's notion of "tools", can be used in combination with other tools in Agent, more native; potentially richer metadata (e.g., tool descriptions).

- Cons: There are relatively more setup steps (creating workflows, configuring MCP services).

- API method::

- Pros: Relatively easy to set up, just need to get the API address and Key; straightforward for scenarios where FastGPT is used as a single Q&A engine.

- Disadvantages: behaves as a call to an external model in n8n rather than a composable "tool"; model configuration (e.g. temperature, max_tokens, etc.) may need to be coordinated between the n8n side and the FastGPT side.

Developers can choose the appropriate integration method based on specific needs and preferences.

Publishing and Applications

Regardless of how the FastGPT knowledge base is integrated, it is easy to publish it for external use once you have finished debugging the n8n workflow.

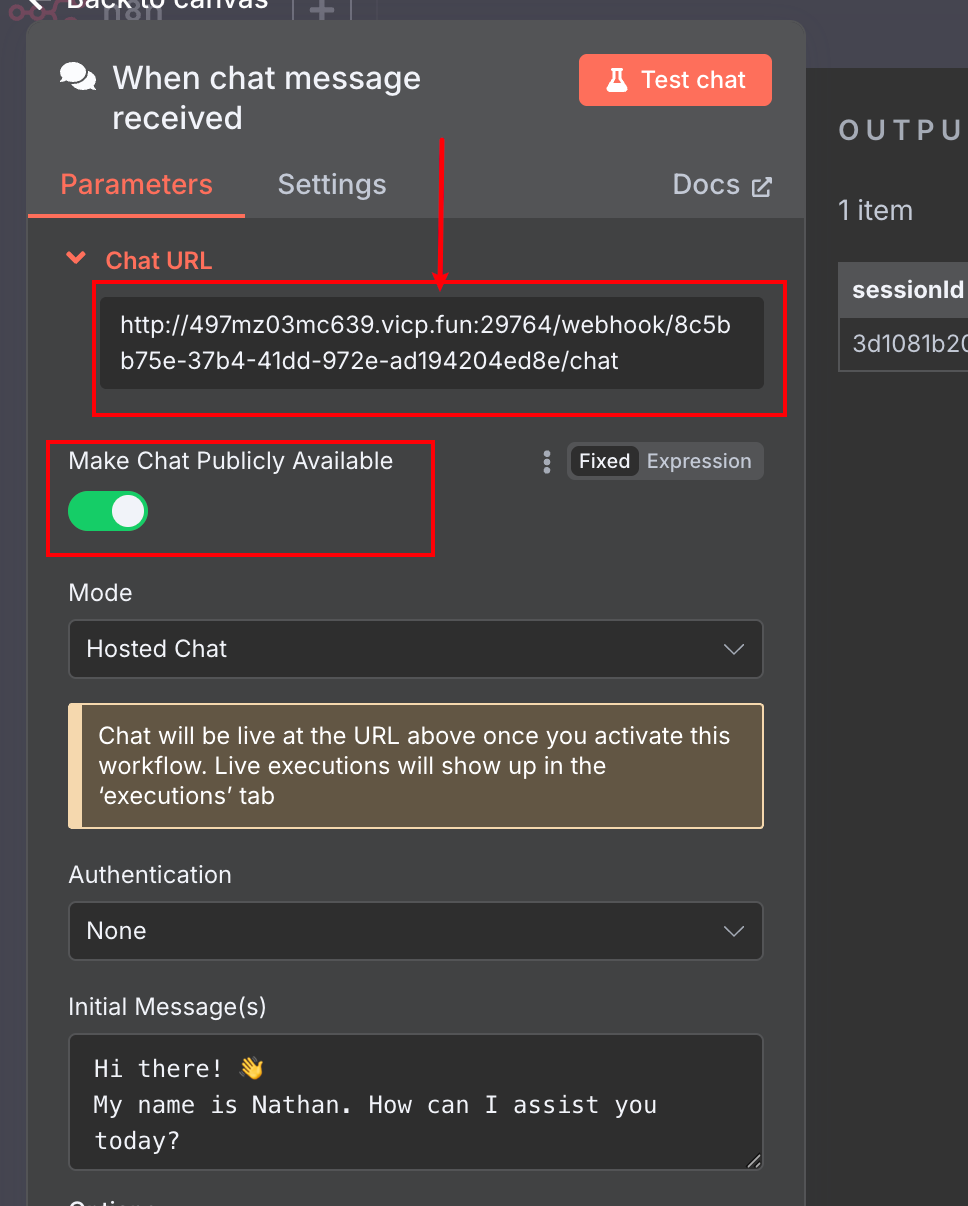

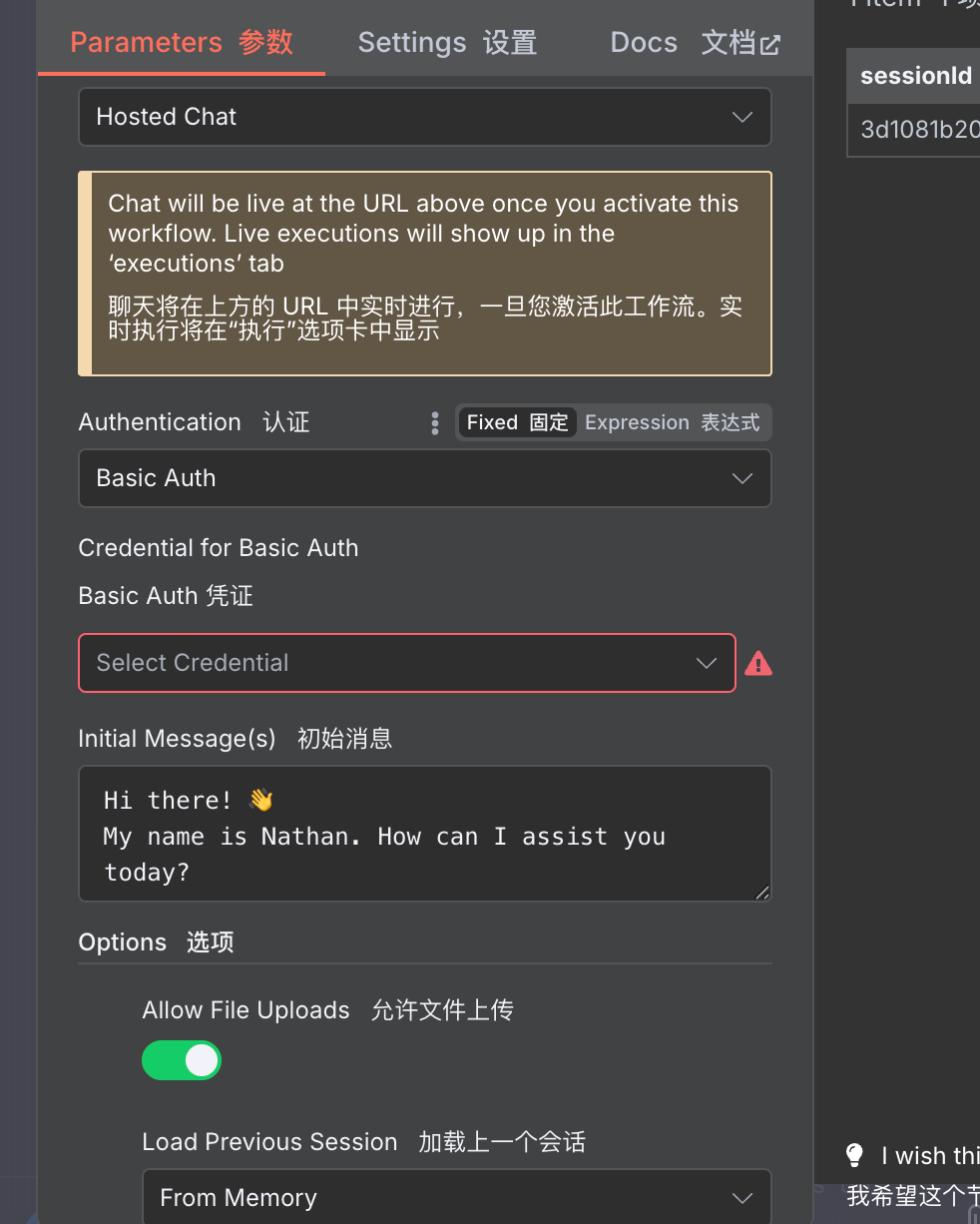

For example, using the Chat Message When used as a trigger node:

- double-click Chat Message Nodes.

- opens Make chat publicly available Options.

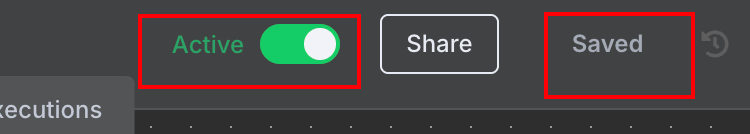

- The system generates a public Chat URL link, which you can copy to share.

- Don't forget. Save furthermore Activate Workflow.

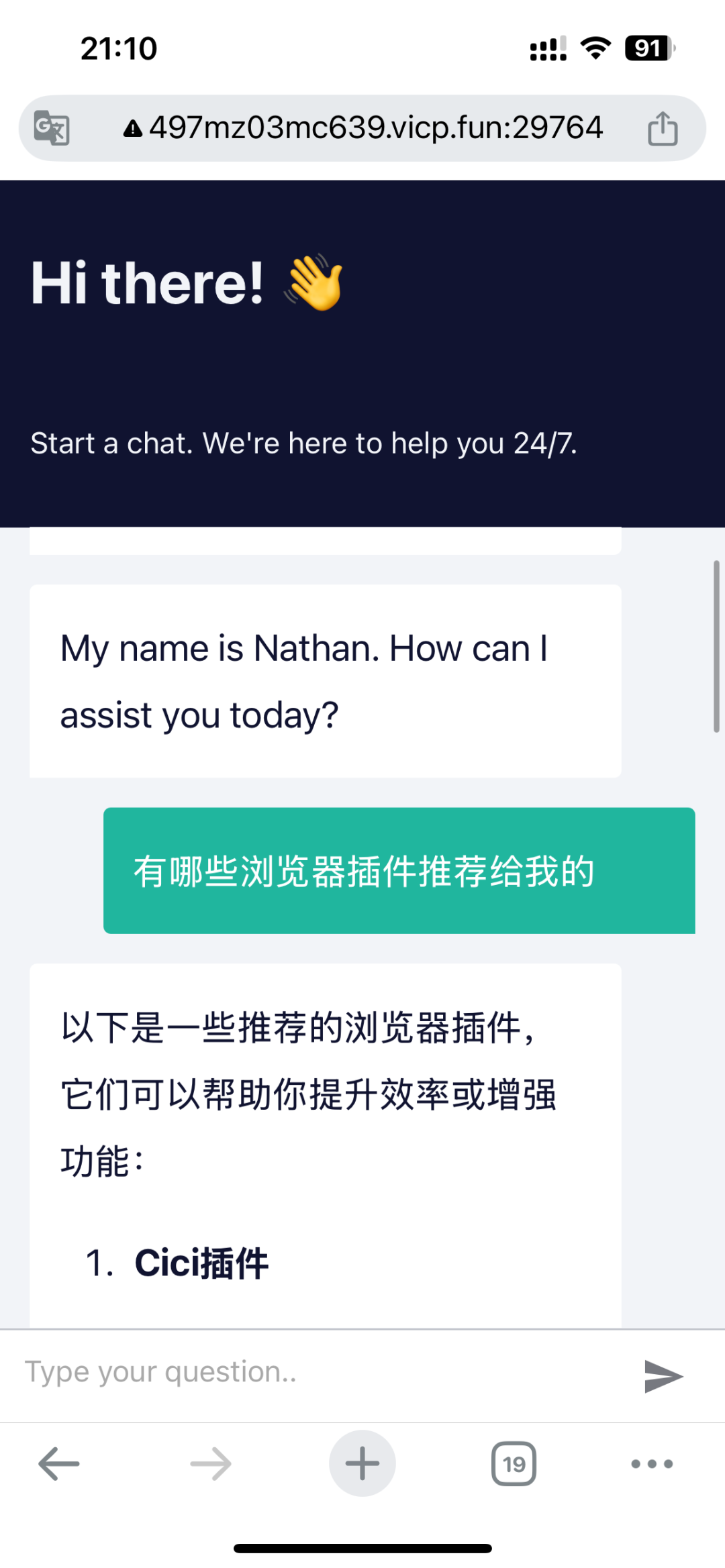

This public chat page can even be accessed and used in the browser of a mobile device.

It is worth mentioning that this public chat page provided by n8n also supports many customizations such as setting password access, allowing users to upload files, etc.

This makes n8n ideal for rapidly building MVP (Minimum Viable Product) product prototypes, or for building efficiency-enhancing AI workflows for individuals and teams.

apart from Chat Message nodes, many of n8n's other trigger nodes (e.g., Webhook) also support publishing to extranet access, providing a very high degree of freedom. If n8n is deployed locally, extranet access can be achieved through techniques such as intranet penetration.

Concluding thoughts: n8n's value proposition

A common question when discussing these types of low-code/no-code automation tools is: why not just implement them in code? For developers familiar with programming, using code seems more straightforward and controllable.

It's true that tools like n8n have a learning curve, and it may take some time to get started. However, once mastered, the efficiency of building and iterating workflows often far exceeds that of traditional code development, especially in scenarios involving the integration of multiple APIs and services. n8n's robust node ecosystem and community support can fulfill a wide range of automation needs, from the simple to the complex, and get ideas off the ground quickly.

Of course, for enterprise-level projects that require a high degree of customization, extreme performance, or involve complex underlying logic, pure code development is still necessary. But for a large number of automation scenarios, internal tool building, rapid prototyping and other needs, n8n and its similar tools provide a very efficient and flexible option. Integrating excellent AI applications like FastGPT through protocols like MCP further expands the boundaries of n8n's capabilities.