With the rapid development and wide application of large-scale language modeling technology, its potential security risks have increasingly become the focus of the industry's attention. In order to address these challenges, many top technology companies, standardization organizations and research institutes around the world have constructed and released their own security frameworks. In this paper, we will sort out and analyze nine representative large model security frameworks, aiming to provide a clear reference for practitioners in related fields.

Figure: Overview of the Big Model Security Framework

Google's Secure AI Framework (SAIF) (released 2025.04)

Figure: Google SAIF framework structure

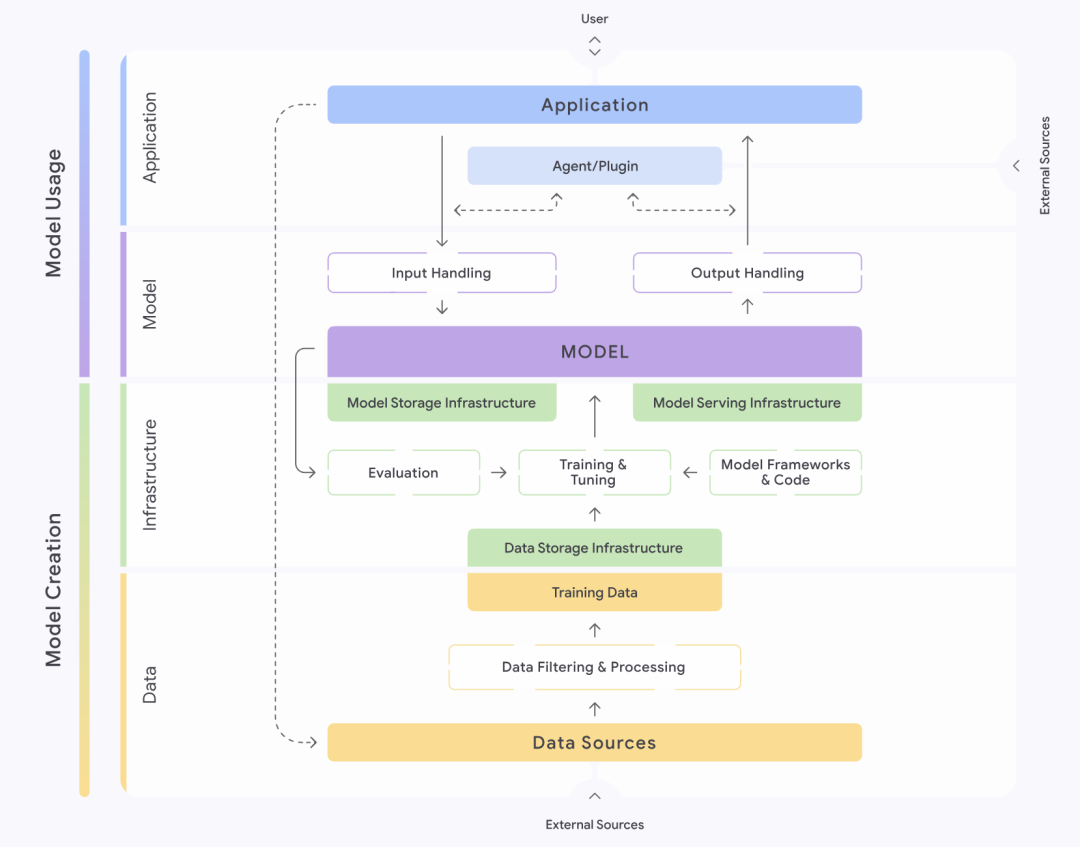

The Secure AI Framework, or SAIF, introduced by Google (Google) provides a structured approach to understanding and managing the security of AI systems. The framework meticulously divides AI systems into four layers: data, infrastructure, model and application. Each layer is further broken down into different components, such as the data layer, which contains key parts such as data sources, data filtering and processing, and training data, etc. SAIF emphasizes that each of these parts harbors specific risks and hazards.

Based on the complete lifecycle of an AI system, SAIF identifies and sorts out fifteen major risks, including data poisoning, unauthorized access to training data, model source tampering, excessive data processing, model leakage, model deployment tampering, model denial of service, model reverse engineering, insecure components, cue word injection, model scrambling, sensitive data leakage, sensitive data access through extrapolation, insecure model output, and malicious behavior. In response to these fifteen risks, SAIF also proposes fifteen prevention and control measures accordingly, which constitute its core security guidance.

OWASP's Top 10 Security Threats for Large Model Applications (Released 2025.03)

Figure : OWASP Top 10 Security Threats for Large Model Applications

The Open World Application Security Project (OWASP), a major force in cybersecurity, has released its signature Top 10 Security Threats list for big model applications, which it deconstructs into key "trust domains," including the big model service itself, third-party functions, plug-ins, and external training data. OWASP deconstructs big model applications into several key "trust domains," including the big model service itself, third-party features, plug-ins, private databases and external training data. The organization identifies a number of security threats, both in the interactions between these trust domains and within the trust domains.

OWASP's top 10 most significant security threats are, in order of impact: Prompt Word Injection, Sensitive Information Disclosure, Supply Chain Risks, Data and Model Poisoning, Improper Output Processing, Over Authorization, System Prompt Leakage, Vector and Embedding Vulnerabilities, Misleading Information, and Unlimited Resource Consumption. For each of these threats, OWASP offers recommendations for prevention and control, providing practical guidance for developers and security personnel.

OpenAI's Model Safety Framework (Continuously Updated)

Figure : OpenAI model security framework dimensions

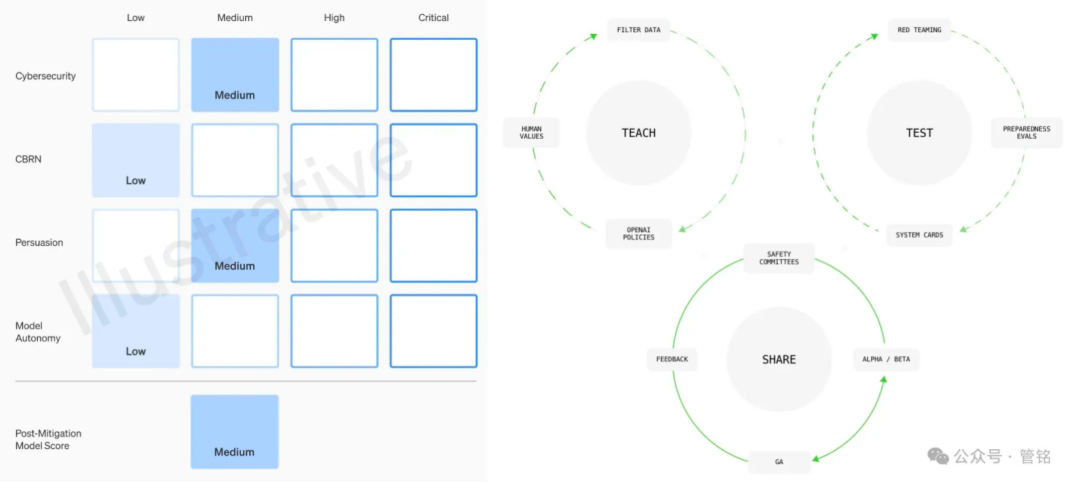

As a leader in big model technology, OpenAI places a high priority on the security of its models. Its model security framework is based on four dimensions: weapons of mass destruction (CBRN) risk, cyberattack capability, persuasion (i.e., the model's ability to influence human opinions and behaviors), and model autonomy, which OpenAI categorizes into low, medium, high, and severe based on the degree of potential harm. Before each model release, a detailed security assessment, known as a System Card, must be submitted based on this framework.

In addition, OpenAI proposes a governance framework that includes values alignment, adversarial evaluation, and control iteration. In the values alignment phase, OpenAI is committed to formulating a set of model behavioral norms that are consistent with universal human values, and guiding the data cleaning work in all phases of model training. In the adversarial evaluation phase, OpenAI will build professional test cases to fully test the model before and after taking protective measures, and finally produce system cards. In the control iteration phase, OpenAI will adopt a batch launch strategy for models that have already been deployed, and continue to add and optimize protection measures.

AI Security Governance Framework for Cybersecurity Standards Committee (2024.09 release)

Figure: Net Security Standard Committee Artificial Intelligence Security Governance Framework

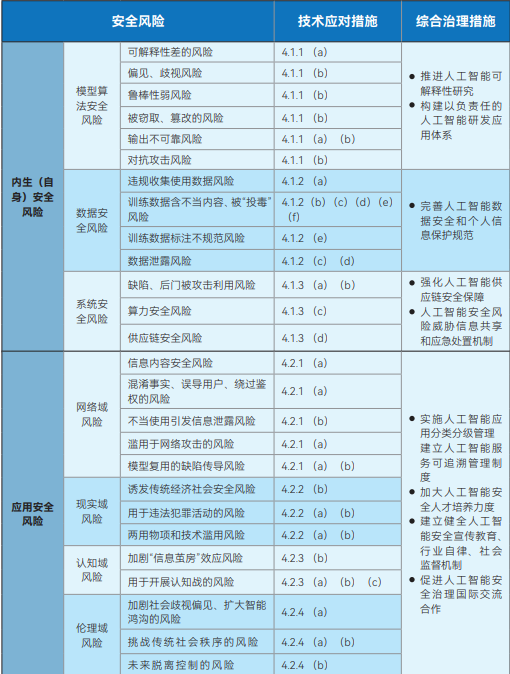

The Artificial Intelligence Safety Governance Framework released by the National Cybersecurity Standardization Technical Committee (NCSSTC) aims to provide macro guidance for the safe development of AI. The framework divides AI security risks into two main categories: endogenous (self) security risks and application security risks. Endogenous safety risks refer to the risks inherent in the model itself, which mainly include model algorithm safety risks, data safety risks and system safety risks. Application safety risk, on the other hand, refers to the risks that the model may face in the process of application, and is further subdivided into four aspects: network domain, reality domain, cognitive domain and ethical domain.

In response to these identified risks, the framework clearly points out that the developers of model algorithms, service providers, system users and other relevant parties need to actively take technical measures to prevent them from a variety of aspects such as training data, arithmetic facilities, model algorithms, product services and application scenarios. At the same time, the framework advocates the establishment and improvement of a comprehensive AI safety risk management system that involves technology research and development institutions, service providers, users, government departments, industry associations and social organizations.

Net Security Standard Committee's Artificial Intelligence Security Standard System V1.0 (2025.01 Release)

Figure: Net Security Standard Committee Artificial Intelligence Security Standard System V1.0

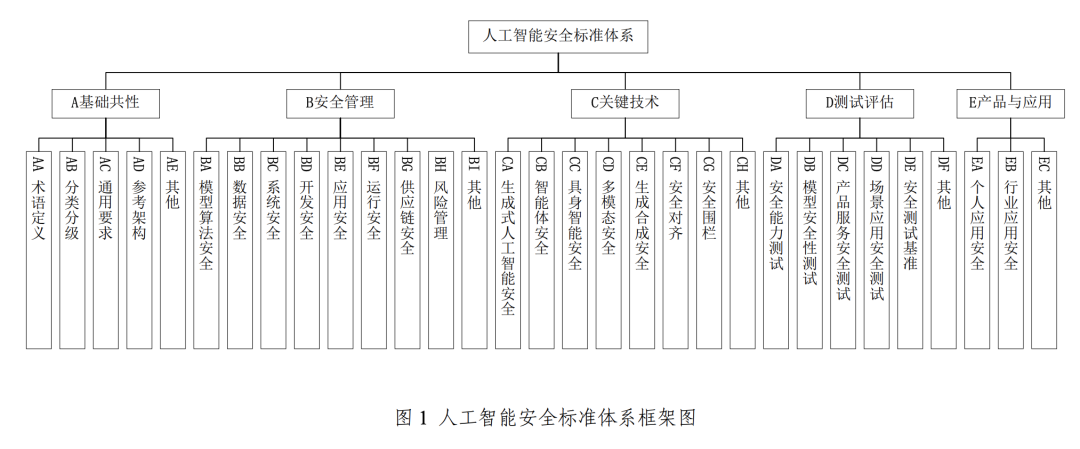

To support and implement the aforementioned Artificial Intelligence Security Governance Framework, the CNSC further launched the Artificial Intelligence Security Standard System V1.0, which systematically sorts out key standards that can help prevent and defuse relevant AI security risks, and focuses on effective interface with the existing national standard system for cybersecurity.

This standard system is mainly composed of five core parts: basic commonality, security management, key technology, testing and evaluation, and products and applications. Among them, the key safety management part covers model algorithm safety, data safety, system safety, development safety, application safety, operation safety and supply chain safety. And the key technology section focuses on the cutting-edge areas of generative AI safety, intelligent body safety, embodied intelligence safety (referring to AI with physical entities, such as robots, whose safety involves interactions with the physical world), multimodal safety, generative synthesis safety, safety alignment, and safety fencing.

Big Model Security Practices 2024 by Tsinghua University, Zhongguancun Lab and Ant Group (released 2024.11)

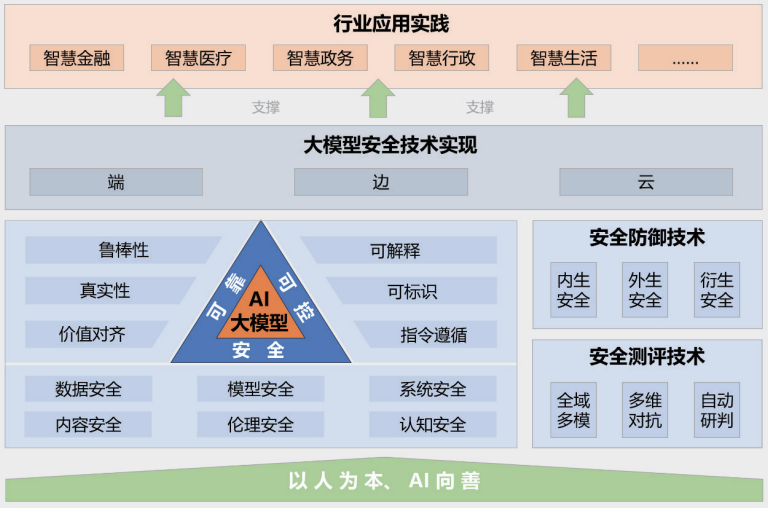

Figure : Big Model Security Practices 2024 Framework

The report "Big Model Security Practice 2024", jointly released by Tsinghua University, Zhongguancun Lab and Ant Group, provides insights into big model security from the perspective of industry-university-research integration. The big model security framework proposed in the report contains five main parts: the guiding principle of "people-oriented, AI for good"; a safe, reliable and controllable big model security technology system; security measurement and defense technologies; end-to-end, edge-to-edge, and cloud collaborative security technology implementations; and application and practice cases in multiple industries.

The report points out in detail the many risks and challenges that big models are currently facing, such as data leakage, data theft, data poisoning, adversarial attacks, command attacks (where models are induced to produce unintended behaviors through well-designed commands), model-stealing attacks, hardware security vulnerabilities, software security vulnerabilities, security problems of the framework itself, security risks introduced by external tools, generation of toxic content, dissemination of biased content, generation of false information, ideological risks, telecommunication fraud and identity theft, intellectual property and copyright infringement, integrity crisis in the education industry, and fairness issues induced by bias. information generation, ideological risks, telecom fraud and identity theft, intellectual property and copyright infringement, integrity crisis in the education industry, and bias-induced fairness issues. In response to these complex risks, the report also proposes corresponding defense techniques.

Big Model Security Research Report by Aliyun and ICTA (2024.09 Release)

Figure: Aliyun & ICT Academy Big Model Security Research Report Framework

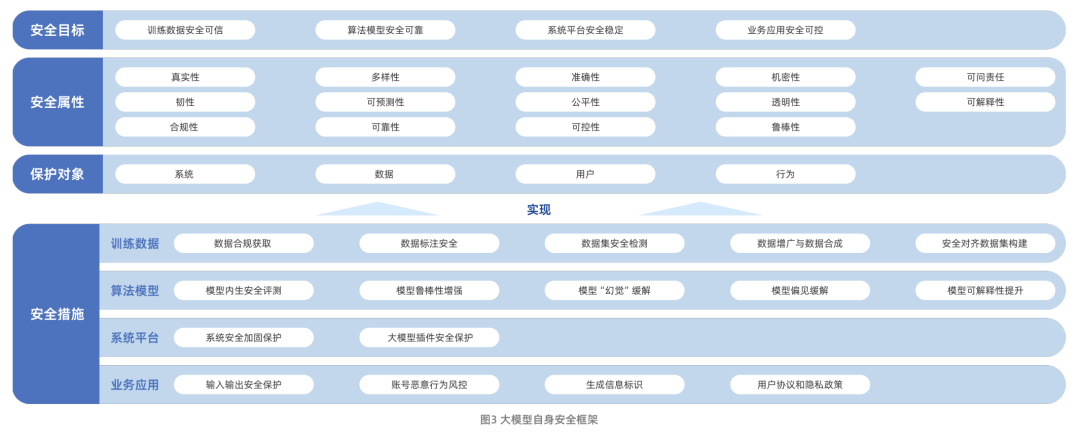

The Big Model Security Research Report, jointly released by Aliyun and the China Academy of Information and Communications Technology (CAICT), systematically describes the evolution path of big model technology and the security challenges it currently faces. These challenges mainly include data security risks, algorithm model security risks, system platform security risks, and business application security risks. It is worth noting that the report further extends the research scope of big model security from the security of the model itself to how to utilize big model technology to empower and enhance traditional network security protection capabilities.

In terms of the model's own security, the report builds a framework containing four dimensions: security goals, security attributes, protection objects and security measures. Among them, the security measures are centered on the four core aspects of training data, model algorithms, system platforms and business applications, reflecting the idea of all-round protection.

Tencent Research Institute's Big Model Security and Ethics Study 2024 (released 2024.01)

Figure: Tencent Research Institute's Big Model Security and Ethics Research Concerns

The report "Big Model Security and Ethics Research 2024" published by Tencent Research Institute provides an in-depth analysis of the trends in big model technology and the opportunities and challenges these trends bring to the security industry. The report lists fifteen major risks, including data leakage, data poisoning, model tampering, supply chain poisoning, hardware vulnerabilities, component vulnerabilities, and platform vulnerabilities. Meanwhile, the report shares four big model security best practices: Prompt security assessment, big model blues attack and defense drills, big model source code security protection practices, and big model infrastructure vulnerability security protection solutions.

The report also highlights progress and future trends in big model values alignment. The report points out that how to ensure that the capabilities and behaviors of big models are aligned with human values, true intentions, and ethical principles, so as to safeguard safety and trust in the process of collaboration between human beings and AI, has become a core topic of big model governance.

360's Big Model Security Solution (Continuously Updated)

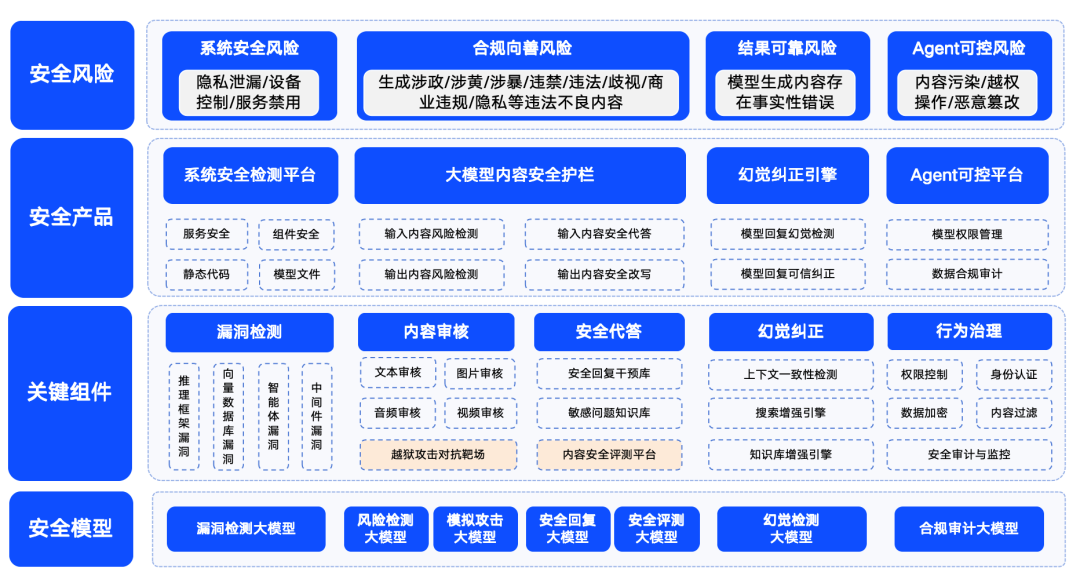

Figure: 360 Big Model Security Solution Schematic

Qihoo 360 has also actively laid out the field of large model security, and put forward its security solutions. 360 summarizes the security risks of large models into four categories: system security risks, content security risks, trusted security risks and controllable security risks. Among them, system security mainly refers to the security of various types of software in the big model ecosystem; content security focuses on the compliance risk of input and output content; trusted security focuses on solving the "illusion" problem of the model (i.e., the model generates information that seems to be reasonable but is not real); and controllable security deals with the more complex Agent process security problem. Controllable security deals with the more complex issue of agent process security.

In order to ensure that large models can be safe, good, trustworthy and controllable for application in various industries, 360 has built a series of large model security products based on its accumulated capabilities in the field of large models. These products include the "360 Smart Forensics", which is mainly aimed at detecting vulnerabilities in the LLM ecosystem, the "360 Smart Shield", which focuses on the content security of large models, and the "360 Smart Search", which guarantees credible security. 360SmartSearch". Through the combination of these products, 360 has formed a set of relatively mature security solutions for large models.