General Introduction

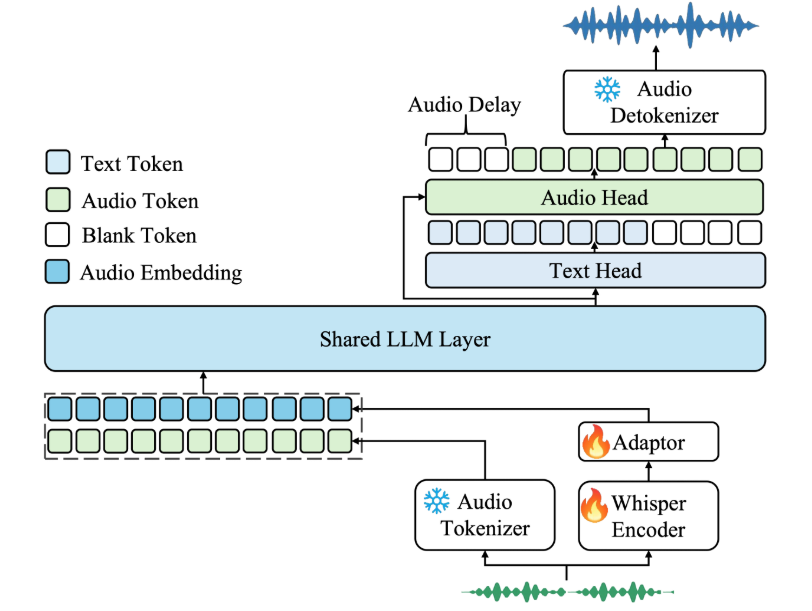

Kimi-Audio is an open source audio base model developed by Moonshot AI that focuses on audio understanding, generation and dialog. It supports a wide range of audio processing tasks such as speech recognition, audio Q&A, and speech emotion recognition. The model has been pre-trained on over 13 million hours of audio data and, combined with an innovative hybrid architecture, performs well in multiple audio benchmarks. kimi-Audio provides model weights, inference code, and an evaluation toolkit for developers to easily integrate into their research and applications. It is suitable for scenarios requiring efficient audio processing and dialog capabilities, with clear documentation, support for Docker deployment, an active community, and continuous updates.

Function List

- Speech Recognition (ASR): Converts audio to text and supports multi-language speech transcription.

- Audio Question and Answer (AQA): answering user questions based on the audio content and understanding the audio context.

- Audio Captioning (AAC): generates accurate captions or descriptions for audio.

- Speech Emotion Recognition (SER): analyzes emotional states in audio, such as happy or sad.

- Sound Event/Scene Classification (SEC/ASC): recognizes specific events or scenes in audio, such as car sounds or indoor environments.

- Text-to-Speech (TTS): converts text to natural speech, supports multiple tones.

- Voice Conversion (VC): Change the timbre or style of the voice to generate personalized audio.

- End-to-end voice dialog: supports continuous voice interaction to simulate natural conversation.

- Streaming Audio Generation: Low-latency audio generation through a chunked streaming decoder.

- Evaluation Toolkit: Provides a standardized evaluation tool that facilitates comparison of different model performances.

Using Help

Installation process

Docker is recommended for Kimi-Audio deployment to ensure a consistent environment and easy installation. Here are the detailed installation steps:

- clone warehouse

Run the following command in the terminal to get the code and submodules of Kimi-Audio:git clone https://github.com/MoonshotAI/Kimi-Audio.git cd Kimi-Audio git submodule update --init --recursive

- Installation of dependencies

Install the Python dependencies, Python 3.10 environment is recommended:pip install -r requirements.txtEnsure installation

torchcap (a poem)soundfileFor users with GPU support, you need to install the CUDA version of PyTorch. - Building a Docker Image

Build the Docker image in the Kimi-Audio directory:docker build -t kimi-audio:v0.1 .Or use an official pre-built image:

docker pull moonshotai/kimi-audio:v0.1 - Running containers

Start the Docker container and mount the local working directory:docker run -it -v $(pwd):/app kimi-audio:v0.1 bash - Download model weights

Kimi-Audio offers two main models:Kimi-Audio-7B(base model) andKimi-Audio-7B-Instruct(Instructions for fine-tuning the model). Downloaded from Hugging Face:moonshotai/Kimi-Audio-7B-Instruct: Suitable for direct use.moonshotai/Kimi-Audio-7B: Suitable for further fine-tuning.

Use the Hugging Face CLI to login and download:

huggingface-cli loginThe model is automatically downloaded to the specified path.

Usage

The core functions of Kimi-Audio are realized through Python API calls. The following is the detailed operation flow of the main functions:

1. Speech recognition (ASR)

audio file to text. Sample code:

import soundfile as sf

from kimia_infer.api.kimia import KimiAudio

# 加载模型

model_path = "moonshotai/Kimi-Audio-7B-Instruct"

model = KimiAudio(model_path=model_path, load_detokenizer=True)

# 设置采样参数

sampling_params = {

"audio_temperature": 0.8,

"audio_top_k": 10,

"text_temperature": 0.0,

"text_top_k": 5,

"audio_repetition_penalty": 1.0,

"audio_repetition_window_size": 64,

"text_repetition_penalty": 1.0,

"text_repetition_window_size": 16,

}

# 准备输入

asr_audio_path = "asr_example.wav" # 确保文件存在

messages_asr = [

{"role": "user", "message_type": "text", "content": "请转录以下音频:"},

{"role": "user", "message_type": "audio", "content": asr_audio_path}

]

# 生成文本输出

_, text_output = model.generate(messages_asr, **sampling_params, output_type="text")

print("转录结果:", text_output)

procedure::

- Prepare an audio file in WAV format.

- set up

messages_asrSpecify the task as transcription. - Run the code and get the text output.

2. Audio Quiz (AQA)

Answer the questions based on the audio. Example:

qa_audio_path = "qa_example.wav"

messages_qa = [

{"role": "user", "message_type": "text", "content": "音频中说了什么?"},

{"role": "user", "message_type": "audio", "content": qa_audio_path}

]

_, text_output = model.generate(messages_qa, **sampling_params, output_type="text")

print("回答:", text_output)

procedure::

- Upload an audio file containing information.

- exist

messages_qaSet specific questions in the - Get textual responses from the model.

3. Text-to-speech (TTS)

Converts text to speech output. Example:

messages_tts = [

{"role": "user", "message_type": "text", "content": "请将以下文本转为语音:你好,欢迎使用 Kimi-Audio!"}

]

audio_output, _ = model.generate(messages_tts, **sampling_params, output_type="audio")

sf.write("output.wav", audio_output, samplerate=16000)

procedure::

- Enter the text to be converted.

- set up

output_type="audio"Get audio data. - utilization

soundfileSave as WAV file.

4. End-to-end voice dialog

Supports continuous voice interaction. Example:

messages_conversation = [

{"role": "user", "message_type": "audio", "content": "conversation_example.wav"},

{"role": "user", "message_type": "text", "content": "请回复一段语音,介绍你的功能。"}

]

audio_output, text_output = model.generate(messages_conversation, **sampling_params, output_type="both")

sf.write("response.wav", audio_output, samplerate=16000)

print("文本回复:", text_output)

procedure::

- Provides initial voice input and text commands.

- set up

output_type="both"Get voice and text responses. - Save the audio output and view the text.

5. Utilization of the assessment toolkit

Courtesy of Kimi-Audio Kimi-Audio-Evalkit For model performance evaluation. Installation:

git clone https://github.com/MoonshotAI/Kimi-Audio-Evalkit.git

cd Kimi-Audio-Evalkit

pip install -r requirements.txt

Operational assessment:

bash run_audio.sh --model Kimi-Audio --dataset all

procedure::

- Download the dataset to the specified directory.

- configure

config.yamlSpecifies the dataset path. - Run the script to generate the assessment report.

caveat

- Ensure that the audio file format is WAV with a sample rate of 16kHz.

- The GPU environment accelerates inference and CUDA 12.4 is recommended.

- Model loading requires a large amount of memory, at least 16GB of graphics memory is recommended.

- Non-Docker deployments require manual installation of system dependencies, see the GitHub documentation.

application scenario

- Intelligent Customer Service

Kimi-Audio can be used to build customer service systems for voice interaction. It transcribes user questions through speech recognition, combines audio Q&A to provide answers, and generates natural voice responses. It is suitable for e-commerce platforms or technical support scenarios to enhance user experience. - Educational aids

In language learning, Kimi-Audio transcribes student pronunciation, analyzes emotion and intonation, and provides feedback. It also transcribes teaching texts into speech to generate listening materials, making it suitable for online education platforms. - content creation

Video producers can use Kimi-Audio to generate subtitles or dubbing. It can automatically generate accurate subtitles for videos or convert scripts into speech with multiple tones to simplify post-production. - medical record

Doctors can input cases by voice, Kimi-Audio transcribes them into text and categorizes the emotions to assist in diagnosing the patient's emotional state. Suitable for hospital information systems.

QA

- What languages does Kimi-Audio support?

Kimi-Audio supports multi-language speech recognition and generation, especially in English and Chinese. For other language support, please refer to the official documentation. - How to optimize the speed of reasoning?

To use GPU acceleration, installflash-attnlibrary, set thetorch_dtype=torch.bfloat16. In addition, adjustmentsaudio_top_kcap (a poem)text_top_kparameter balances speed and quality. - Does the model support real-time dialog?

Yes, Kimi-Audio's streaming decoder supports low-latency audio generation for real-time voice interaction. - How do I add a custom dataset for evaluation?

existKimi-Audio-EvalkitCreate a JSONL file in theindex,audio_pathcap (a poem)questionfield. Modifyconfig.yamlJust specify the dataset path and run the evaluation script.