General Introduction

llm.pdf is an open source project that allows users to run Large Language Models (LLMs) directly in PDF files. Developed by EvanZhouDev and hosted on GitHub, this project demonstrates an innovative approach: compiling llama.cpp to asm.js via Emscripten and combining it with PDF's JavaScript injection feature to allow the reasoning process of the LLM to be done entirely in the PDF file. The project supports quantitative models in GGUF format, and recommends using the Q8 quantitative model for best performance. Users can generate a PDF file containing the LLM via the provided Python script, which is simple and efficient. This project is a proof-of-concept demonstrating the feasibility of running complex AI models in a non-traditional environment for developers and researchers interested in AI and PDF technologies.

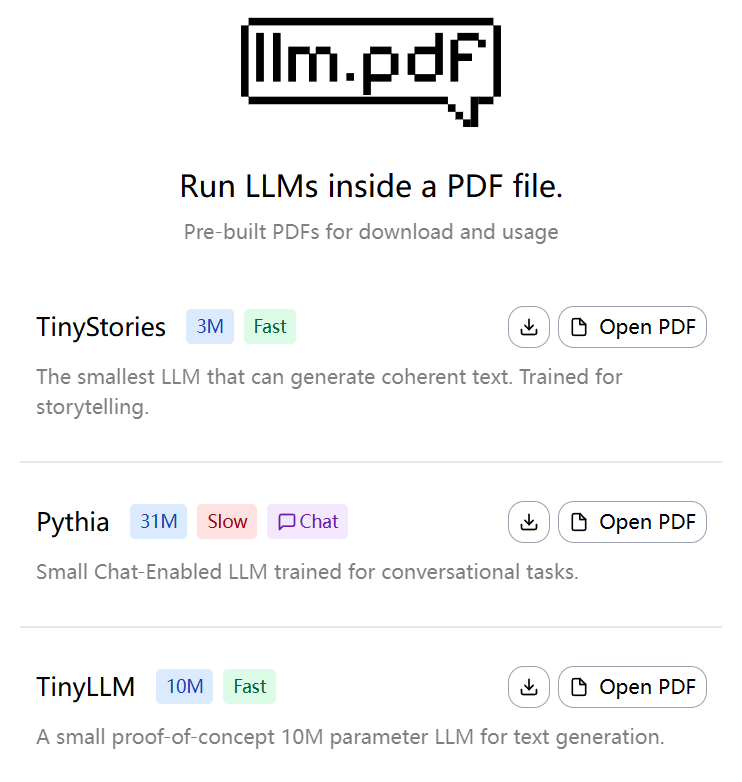

Experience: https://evanzhoudev.github.io/llm.pdf/

Function List

- Run large language models in PDF files to support text generation and interaction.

- Compile llama.cpp to asm.js using Emscripten to implement model inference in a browser environment.

- Quantization models in GGUF format are supported, and Q8 quantization is recommended to improve runtime speed.

- Providing Python Scripts

generatePDF.pyThis is used to generate PDF files containing LLM. - Supports embedding of model files in PDF via base64 encoding to simplify distribution and use.

- Provide YouTube video tutorials to show the project building process and usage in detail.

- Open source code, allowing user customization and extended functionality.

Using Help

Installation and environment preparation

To use the llm.pdf project, users need to prepare an environment that supports Python 3 and install the necessary dependencies. The following are the detailed installation steps:

- Cloning Project Warehouse

Open a terminal and run the following command to clone the llm.pdf repository:git clone https://github.com/EvanZhouDev/llm.pdf.git cd llm.pdf

- Installing Python dependencies

The project relies on a Python environment, Python 3.8 or higher is recommended. Go toscriptscatalog, install the required libraries:cd scripts pip install -r requirements.txtMake sure Emscripten and other necessary compilation tools are installed. If Emscripten is not installed, refer to its official documentation to configure it.

- Preparing the GGUF model

llm.pdf only supports quantitative models in GGUF format, Q8 quantitative models are recommended for best performance. Users can download GGUF models from Hugging Face or other model repositories, such as TinyLLaMA or other small LLMs. model files need to be saved locally, such as/path/to/model.ggufThe - Generate PDF files

The project provides a Python scriptgeneratePDF.pyUsed to generate a PDF file containing the LLM. Run the following command:python3 generatePDF.py --model "/path/to/model.gguf" --output "/path/to/output.pdf"--model: Specifies the path of the GGUF model.--output: Specify the path to save the generated PDF file.

The script embeds the model file in PDF via base64 encoding and injects the JavaScript code needed to run the inference. The generation process may take a few minutes, depending on model size and device performance.

- Running PDF files

The generated PDF file can be opened in a JavaScript-enabled PDF reader, such as Adobe Acrobat or a modern browser's PDF viewer. Once the PDF is opened, the model will automatically load and run the reasoning. The user can enter text through the PDF interface and the model will generate the appropriate response. Note: Due to performance limitations, the 135M Parametric Model takes about 5 seconds to generate a token.

Featured Function Operation

- Text Generation

In the PDF file, the user can interact with the LLM via a text input box. After entering a prompt, the model generates a response token by token. Short prompts are recommended to minimize inference time. For example, if you enter "Write a short sentence about cats," the model might respond "Cats like to chase hairballs." The generated text is displayed in the output area of the PDF. - Model Selection and Optimization

The project supports a variety of GGUF models, and users can choose different sizes according to their needs. the Q8 quantization model is the recommended choice because it strikes a balance between performance and speed. If users need faster response, they can try smaller models (e.g. 135M parameters), but the generation quality may be slightly lower. - Custom extensions

llm.pdf is an open source project, users can modify thescripts/generatePDF.pyor injected JavaScript code to implement custom functionality. For example, to add new interactive interfaces or to support other model formats. Users need to be familiar with the JavaScript API for Emscripten and PDF.

caveat

- Performance Requirements: Higher computational resources are required to run LLM reasoning in PDF. It is recommended to run on a device with at least 8GB of RAM.

- Model Compatibility: Only quantization models in GGUF format are supported. Non-quantized models or non-GGUF formats will cause generation failure.

- Browser compatibility: Some older browsers may not support asm.js, we recommend using the latest version of Chrome or Firefox.

- adjust components during testing: If the PDF file does not work, check the terminal log or your browser's developer tools (F12) for JavaScript errors.

Learning Resources

The project provides a YouTube video tutorial that explains the build process of llm.pdf in detail. Users can visit the README in the GitHub repository or the docs directory for more documentation. Community discussions can be found on the GitHub Issues page, where developers can ask questions or contribute code.

application scenario

- AI Technology Showcase

llm.pdf can be used to demonstrate to clients or students the ability of AI models to operate in non-traditional environments. For example, at a technical conference, developers can open the PDF file and demonstrate LLM's text generation capabilities in real time, highlighting the portability and innovation of AI. - Education and Research

Students and researchers can use llm.pdf to learn the inference process of LLM with the JavaScript functionality of the PDF. The project provides a hands-on platform to help users understand the role of model quantization and the compilation principles of Emscripten. - Offline AI Deployment

In network-constrained environments, llm.pdf provides a server-less way to deploy AI. Users can embed models into PDFs and distribute them to others for offline text generation and interaction.

QA

- What models does llm.pdf support?

llm.pdf only supports quantization models in GGUF format, Q8 quantization models are recommended for best performance. Users can download compatible models from Hugging Face. - Why are the generated PDF files running slowly?

LLM peep inference in PDF is limited by browser performance and model size. 135M parameter model takes about 5 seconds per token. It is recommended that the model be quantized using Q8 and run on a high performance device. - Do I need an internet connection to use llm.pdf?

Not required. After generating the PDF, the model and inference code are embedded in the file and can be run offline. However, the process of generating the PDF requires an internet connection to download the dependencies. - How to debug errors in PDF files?

Use your browser's developer tools (F12) to check for JavaScript errors when opening PDF files. Also seegeneratePDF.pyof the terminal log.