General Introduction

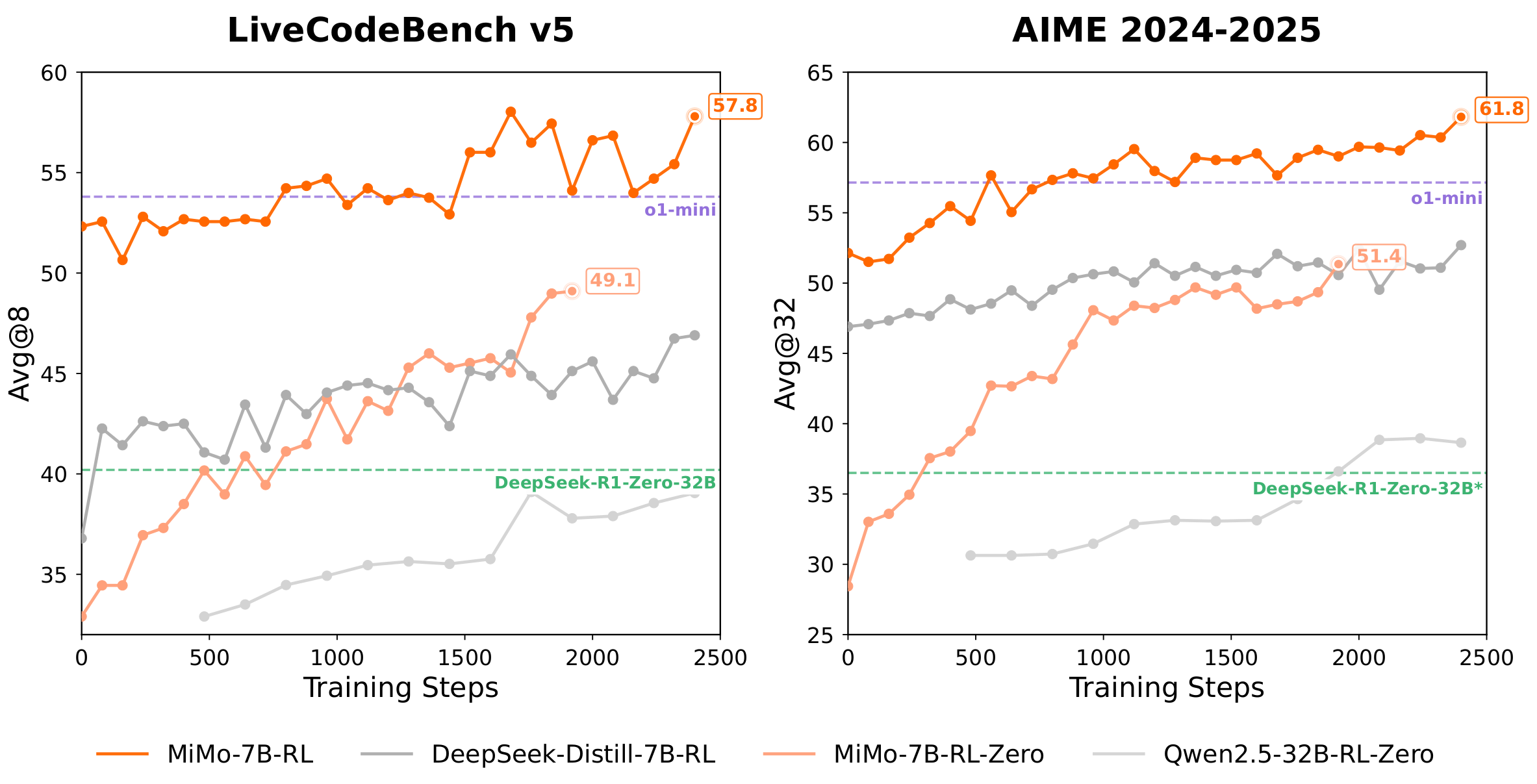

MiMo is an open source large language modeling project developed by Xiaomi, focusing on mathematical reasoning and code generation. The core product is the MiMo-7B family of models, which consists of a base model (Base), a supervised fine-tuning model (SFT), a reinforcement learning model trained from the base model (RL-Zero), and a reinforcement learning model trained from the SFT model (RL). These 7 billion parameter models demonstrate reasoning power comparable to larger models by optimizing pre-training data, multi-token prediction (MTP), and reinforcement learning. miMo-7B-RL outperforms OpenAI o1-mini in mathematical and coding tasks. model support vLLM and SGLang inference engines, and is available for download at Hugging Face and ModelScope. Xiaomi open-sourced MiMo with the aim of pushing the boundaries of efficient inference modeling.

Function List

- mathematical reasoning: Solve math competitions such as AIME, MATH-500, etc., and support complex problem reasoning.

- code generation: Generate high-quality code in Python, C++ and more for LiveCodeBench programming tasks.

- Multi-Token Prediction (MTP): Predict multiple tokens with an inference acceptance rate of ~90%, improving speed and accuracy.

- Open Source Modeling Support: MiMo-7B series models (Base, SFT, RL-Zero, RL) are provided for developers to use freely.

- Efficient inference engine: Support Xiaomi custom vLLM and SGLang to optimize inference performance.

- Enhanced Learning Optimization: Enhanced model inference based on 130,000 math and code problem datasets.

- Seamless Rollback Engine: Accelerates reinforcement learning training with 2.29x faster training and 1.96x faster validation.

- Flexible deploymentHugging Face Transformers, vLLM and SGLang are supported.

Using Help

Installation and Deployment

The MiMo-7B model does not require a standalone software installation, but does require configuration of the inference environment. The following are the detailed deployment steps, Python 3.8 or higher is recommended.

1. Environmental preparation

Ensure that Python and pip are installed on your system. it is recommended that you use a virtual environment to avoid dependency conflicts:

python3 -m venv mimo_env

source mimo_env/bin/activate

2. Installation of dependencies

MiMo recommends using Xiaomi's customized vLLM branch, which supports MTP functionality. The installation command is as follows:

pip install torch transformers

pip install "vllm @ git+https://github.com/XiaomiMiMo/vllm.git@feat_mimo_mtp_stable_073"

If using SGLang, execute:

python3 -m pip install "sglang[all] @ git+https://github.com/sgl-project/sglang.git@main#egg=sglang&subdirectory=python"

3. Download model

The MiMo-7B model is hosted in Hugging Face and ModelScope, with MiMo-7B-RL as an example:

from transformers import AutoModelForCausalLM, AutoTokenizer

model_id = "XiaomiMiMo/MiMo-7B-RL"

model = AutoModelForCausalLM.from_pretrained(model_id, trust_remote_code=True)

tokenizer = AutoTokenizer.from_pretrained(model_id)

The model file is about 14GB, make sure you have enough storage space. modelScope is downloaded in a similar way, replacing the model_id is the corresponding address.

4. Activation of the reasoning service

Start the inference server using vLLM (recommended):

python3 -m vllm.entrypoints.api_server --model XiaomiMiMo/MiMo-7B-RL --host 0.0.0.0 --trust-remote-code

or use SGLang:

python3 -m sglang.launch_server --model-path XiaomiMiMo/MiMo-7B-RL --host 0.0.0.0 --trust-remote-code

Once the server is started, you can interact with the model via API or command line.

Main Functions

mathematical reasoning

MiMo-7B-RL excels in mathematical reasoning tasks, especially on the AIME and MATH-500 datasets. Users can input mathematical problems and the model generates the answers. Example:

from vllm import LLM, SamplingParams

llm = LLM(model="XiaomiMiMo/MiMo-7B-RL", trust_remote_code=True)

sampling_params = SamplingParams(temperature=0.6)

outputs = llm.generate(["Solve: 2x + 3 = 7"], sampling_params)

print(outputs[0].outputs[0].text)

Operating tips::

- utilization

temperature=0.6Balancing generative quality and diversity. - Complex problems can be entered in steps to ensure a clear description.

- AIME 2024 (68.2% Pass@1), AIME 2025 (55.4% Pass@1) and MATH-500 (95.8% Pass@1) are supported.

code generation

MiMo-7B-RL generates high-quality code with support for Python, C++ and other languages for LiveCodeBench v5 (57.8% Pass@1) and v6 (49.3% Pass@1). Example:

from vllm import LLM, SamplingParams

llm = LLM(model="XiaomiMiMo/MiMo-7B-RL", trust_remote_code=True)

sampling_params = SamplingParams(temperature=0.6)

outputs = llm.generate(["Write a Python function to calculate factorial"], sampling_params)

print(outputs[0].outputs[0].text)

Operating tips::

- Provide task-specific descriptions, such as function input and output requirements.

- Checks the syntactic integrity of the generated code.

- Suitable for algorithm design and programming competition tasks.

Multi-Token Prediction (MTP)

MTP is a core feature of MiMo that accelerates inference by predicting multiple tokens with an acceptance rate of about 90%. Enabling MTP requires Xiaomi's customized vLLM:

from vllm import LLM, SamplingParams

llm = LLM(model="XiaomiMiMo/MiMo-7B-RL", trust_remote_code=True, num_speculative_tokens=1)

sampling_params = SamplingParams(temperature=0.6)

outputs = llm.generate(["Write a Python script"], sampling_params)

print(outputs[0].outputs[0].text)

Operating tips::

- set up

num_speculative_tokens=1Enable MTP. - MTP works best in high throughput scenarios.

- The MTP layer is tuned in the pre-training and SFT phases and frozen in the RL phase.

Seamless Rollback Engine

MiMo has developed a seamless rollback engine to optimize reinforcement learning training. Users do not need to operate this feature directly, but its effect is reflected in model performance:

- 2.29x faster training and 1.96x faster validation.

- Integrated continuous rollback, asynchronous reward calculation, and early termination reduce GPU idle time.

Inference Engine Selection

- vLLM (recommended): Xiaomi customized vLLM (based on vLLM 0.7.3) supports MTP with optimal performance. Suitable for high performance reasoning needs.

- SGLang: Support for mainstream reasoning, MTP support coming soon. Suitable for rapid deployment.

- Hugging Face Transformers: Suitable for simple testing or local debugging, but does not support MTP.

caveat

- system alert: Empty system tips are recommended for optimal performance.

- hardware requirement: Single GPU recommended (e.g. NVIDIA A100 40GB), CPU inference requires at least 32GB of RAM.

- Assessment setup: All evaluations use

temperature=0.6AIME and LiveCodeBench use multiple run averages. - Community Support: If you have problems, you can file an issue on GitHub or contact the

mimo@xiaomi.comThe

application scenario

- academic research

The MiMo-7B model is suitable for researchers exploring mathematical reasoning and code generation algorithms. Developers can fine-tune it based on the open-source model to investigate pre-training and reinforcement learning strategies. - programming education

Teachers can use MiMo to generate answers to programming exercises, and students can verify code logic or learn algorithm implementation. - Competition training

MiMo supports AIME and MATH-500 math competition questions for students preparing for math and programming competitions. - AI development

Developers can build customized applications based on MiMo-7B, such as automated code review tools or mathematical solvers.

QA

- What models are available in the MiMo-7B series?

MiMo-7B includes a base model (Base), a supervised fine-tuning model (SFT), a reinforcement learning model trained from the base model (RL-Zero), and a reinforcement learning model trained from the SFT model (RL). the RL version has the best performance. - How to choose an inference engine?

Recommended Xiaomi customized vLLM (support MTP, optimal performance). SGLang is suitable for rapid deployment, Hugging Face Transformers is suitable for simple testing. - How can MTP improve performance?

MTP is suitable for high throughput scenarios by predicting multiple tokens with an inference acceptance rate of 90%, which significantly improves speed. - Does the model support multiple languages?

MiMo is mainly optimized for math and code tasks, and supports English and some Chinese input, with no explicit support for other languages. - What are the hardware requirements?

A single GPU (e.g., NVIDIA A100 40GB) can run MiMo-7B-RL. CPU inference requires at least 32GB of RAM, but is slower.