General Introduction

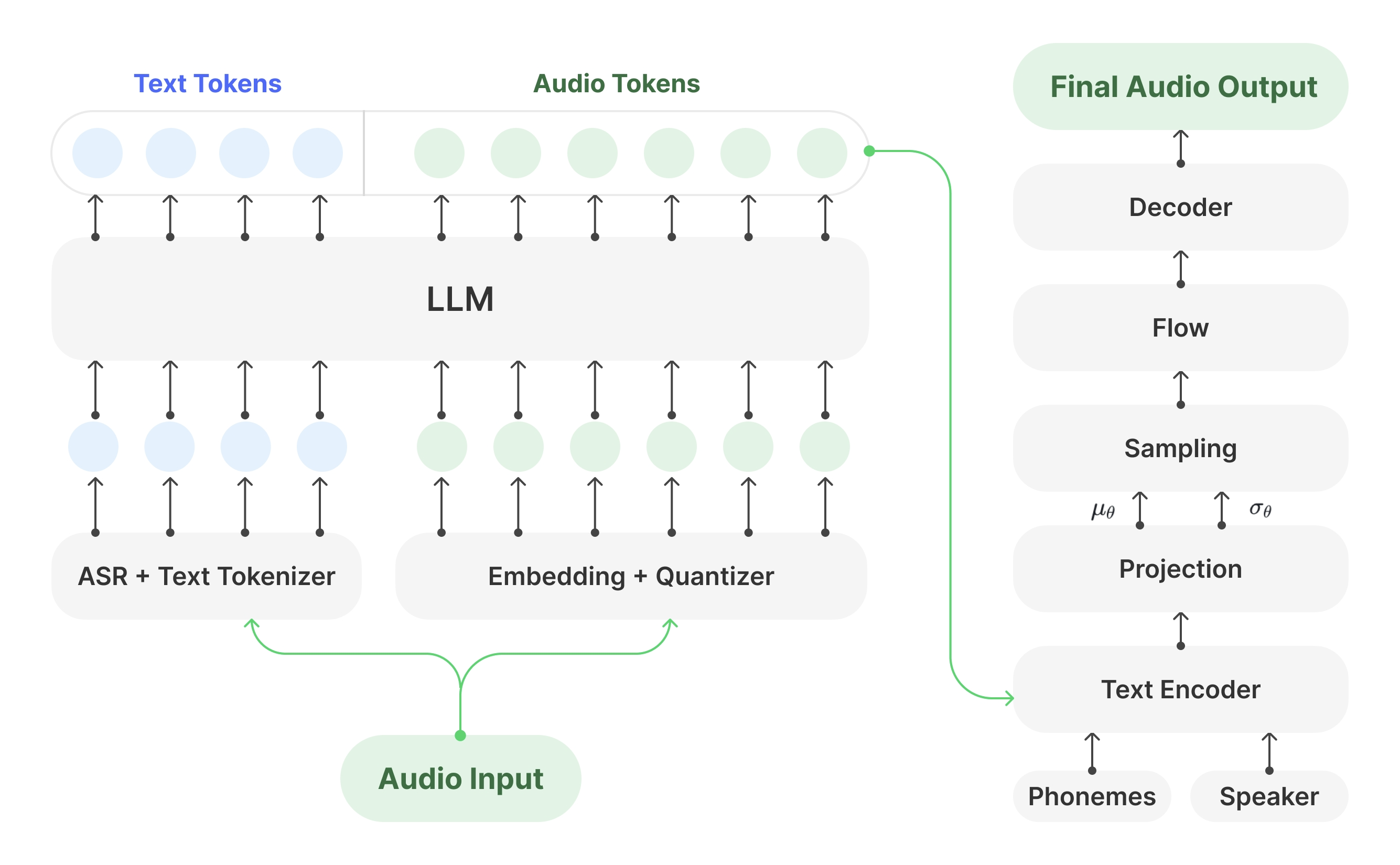

Muyan-TTS is an open source text-to-speech (TTS) model designed for podcasting scenarios. It is pre-trained with over 100,000 hours of podcast audio data and supports zero-sample speech synthesis to generate high-quality natural speech. The model is built based on Llama-3.2-3B and combines the SoVITS Muyan-TTS also supports personalized speech customization from tens of minutes of single-person speech data, tailored to the needs of specific timbres. The project is released under the Apache 2.0 license, providing the complete training code, data processing flow, and model weights, and is hosted on GitHub, Hugging Face, and ModelScope, encouraging secondary development and community contributions.

Function List

- Zero-sample speech synthesis: Generate high-quality podcast-style speech without additional training, adapting to a wide range of tonal inputs.

- Personalized voice customization: Generate a specific speaker's voice by fine-tuning a few minutes of one-person voice data.

- Efficient Reasoning Speed: Approximately 0.33 seconds of audio generation per second on a single NVIDIA A100 GPU, outperforming multiple open-source TTS models.

- Open source training code: Provides a complete training process from base model to fine-tuned model with support for developer customization.

- Data processing pipeline: Integration with Whisper, FunASR and NISQA to clean and transcribe podcast audio data.

- API Deployment Support: Provides API tools for easy integration into podcasts or other voice applications.

- Model weights open: Muyan-TTS and Muyan-TTS-SFT model weights can be downloaded from Hugging Face and ModelScope.

Using Help

Installation process

The installation of Muyan-TTS should be done under Linux environment, Ubuntu is recommended. The following are the detailed steps:

- clone warehouse

Open a terminal and run the following command to clone the Muyan-TTS repository:git clone https://github.com/MYZY-AI/Muyan-TTS.git cd Muyan-TTS

- Creating a Virtual Environment

Create a virtual environment for Python 3.10 using Conda:conda create -n muyan-tts python=3.10 -y conda activate muyan-tts - Installation of dependencies

Run the following command to install the project dependencies:make buildNote: FFmpeg needs to be installed. works on Ubuntu:

sudo apt update sudo apt install ffmpeg - Download pre-trained model

Download the model weights from the link below:- Muyan-TTS. Hugging Face, ModelScope

- Muyan-TTS-SFT. Hugging Face, ModelScope

- chinese-hubert-base. Hugging Face

Place the downloaded model file into thepretrained_modelsdirectory, which is structured as follows:

pretrained_models ├── chinese-hubert-base ├── Muyan-TTS └── Muyan-TTS-SFT - Verify Installation

After ensuring that all dependencies and model files are properly installed, inference or training can be performed.

Using the base model (zero-sample speech synthesis)

The base model of Muyan-TTS supports zero-sample speech synthesis, which is suitable for quickly generating podcast-style speech. The steps are as follows:

- Prepare input text and reference audio

Prepare a text (text) and reference speech (ref_wav_path). Example:ref_wav_path="assets/Claire.wav" prompt_text="Although the campaign was not a complete success, it did provide Napoleon with valuable experience and prestige." text="Welcome to the captivating world of podcasts, let's embark on this exciting journey together." - Run the reasoning command

Use the following command to generate speech, specifyingmodel_typebecause ofbase::python tts.pyOr just run the core inference code:

async def main(model_type, model_path): tts = Inference(model_type, model_path, enable_vllm_acc=False) wavs = await tts.generate( ref_wav_path="assets/Claire.wav", prompt_text="Although the campaign was not a complete success, it did provide Napoleon with valuable experience and prestige.", text="Welcome to the captivating world of podcasts, let's embark on this exciting journey together." ) output_path = "logs/tts.wav" with open(output_path, "wb") as f: f.write(next(wavs)) print(f"Speech generated in {output_path}")The generated speech is saved in the

logs/tts.wavThe - Replacement of reference audio

In the zero-sample mode, theref_wav_pathCan be replaced with any speaker's voice, and the model will mimic its timbre to generate a new voice.

Using the SFT model (personalized voice customization)

The SFT model is fine-tuned with single-person speech data suitable for generating specific timbres. The procedure is as follows:

- Preparing training data

Collect at least a few minutes of single person speech data and save it in WAV format. Use LibriSpeech's dev-clean dataset as an example, available for download:wget --no-check-certificate https://www.openslr.org/resources/12/dev-clean.tar.gzAfter unzipping the

prepare_sft_dataset.pyspecified inlibrispeech_diris the decompression path. - Generate training data

Run the following command to process the data and generatedata/tts_sft_data.json::./train.shThe data format is as follows:

{ "file_name": "path/to/audio.wav", "text": "对应的文本内容" } - Adjustment of training configuration

compilertraining/sft.yaml, set parameters such as learning rate, batch size, etc. - Start training

train.shThe following command is automatically executed to start training:llamafactory-cli train training/sft.yamlAfter training is complete, the model weights are saved in

pretrained_models/Muyan-TTS-new-SFTThe - Copy SoVITS weights

Combine the base model'ssovits.pthCopy to the new model directory:cp pretrained_models/Muyan-TTS/sovits.pth pretrained_models/Muyan-TTS-new-SFT - running inference

To generate speech using the SFT model, you need to keepref_wav_pathConsistent with the speaker used in training:python tts.py --model_type sft

Deployment via API

Muyan-TTS supports API deployment for easy integration into applications. The steps are as follows:

- Starting the API Service

Run the following command to start the service. the default port is 8020:python api.py --model_type baseService logs are kept in the

logs/llm.logThe - Send Request

Use the following Python code to send the request:import requests payload = { "ref_wav_path": "assets/Claire.wav", "prompt_text": "Although the campaign was not a complete success, it did provide Napoleon with valuable experience and prestige.", "text": "Welcome to the captivating world of podcasts, let's embark on this exciting journey together.", "temperature": 0.6, "speed": 1.0, } url = "http://localhost:8020/get_tts" response = requests.post(url, json=payload) with open("logs/tts.wav", "wb") as f: f.write(response.content)

caveat

- hardware requirement: NVIDIA A100 (40GB) or equivalent GPU is recommended for inference.

- language restriction: Since the training data is predominantly in English, only English input is currently supported.

- Data quality: The speech quality of the SFT model relies on the clarity and consistency of the training data.

- Training costs: The total cost of pre-training was approximately $50,000, including data processing ($30,000), LLM pre-training ($19,200), and decoder training ($0.134 million).

application scenario

- Podcast Content Creation

Muyan-TTS quickly converts podcast scripts into natural speech for independent creators to generate high-quality audio. Users can generate podcast-style speech by simply entering text and a reference voice, reducing recording costs. - Audiobook production

With the SFT model, creators can customize the voice of specific characters to generate audiobook chapters. The model supports long audio generation, which is suitable for long content. - Voice assistant development

Developers can integrate Muyan-TTS into voice assistants via APIs to provide a natural and personalized voice interaction experience. - Educational content generation

Schools or training organizations can convert teaching materials to speech to generate audio for listening exercises or course explanations, suitable for language learning or visually impaired people.

QA

- What languages does Muyan-TTS support?

Currently only English is supported, as the training data is dominated by English podcast audio. Other languages can be supported in the future by expanding the dataset. - How to improve the speech quality of SFT models?

Use high-quality, clear single-person speech data to avoid background noise. Ensure that the training data is consistent with the speech style of the target scene. - What about slow reasoning?

Ensure the use of support vLLM Accelerated GPU environments. Bynvidia-smiCheck the video memory usage to make sure the model is properly loaded to the GPU. - Does it support commercial use?

Muyan-TTS is distributed under the Apache 2.0 license and is supported for commercial use, subject to the terms of the license. - How do I get technical support?

Issues can be submitted via GitHub (Issues) or join the Discord community (Discord) Get support.