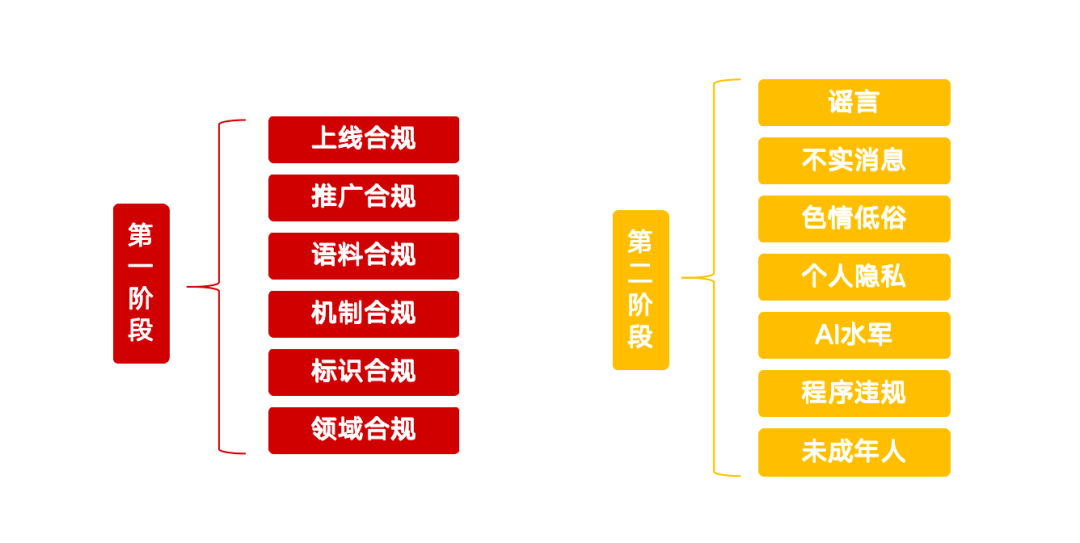

Recently, the Central Office of Internet Information Technology launched a special action called "Clear - Rectify AI Technology Abuse", which draws a clear red line for the governance of a number of issues that have arisen in the current development of artificial intelligence. This initiative aims to guide the healthy development of AI technology and prevent potential risks. The special action focuses on 13 key directions and is divided into two phases, with detailed requirements for AI products, services, content and behavioral norms.

Phase I: Source Governance and Infrastructure

The first phase of the action focuses on the source management of AI technology, with the goal of cleaning up offending AI applications, strengthening content labeling management, and improving the platform's ability to detect and identify counterfeits.

Non-compliant AI products need to complete online compliance

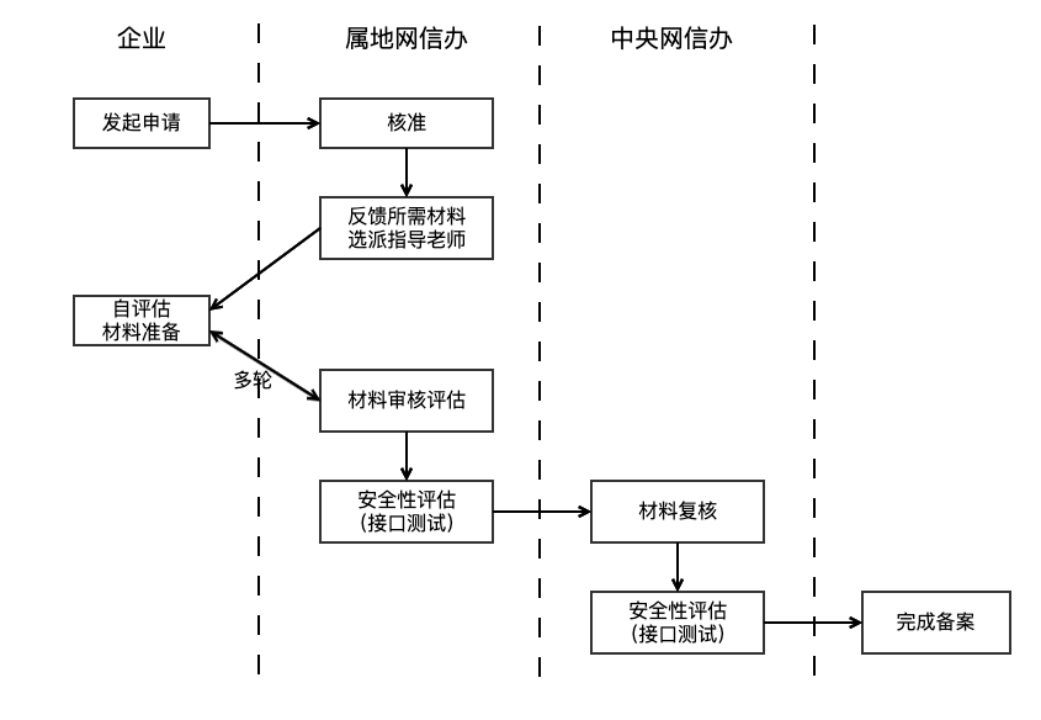

The regulator noted that applications that utilize generative AI technology to provide services to the public must fulfill the large model filing or registration process. This requirement is based on Article 17 of the Interim Measures for the Administration of Generative Artificial Intelligence Services (hereinafter referred to as the Interim Measures), which makes it clear that AI products are required to pass a safety assessment before being officially launched. The filing cycle is usually expected to take three to six months. In addition, it is against the law to provide illegal and unethical functions such as "one-click undressing", or to clone or edit other people's biometric features (e.g., voices and faces) without authorization.

Identifying and regulating unrecorded AI services has become a challenge. Regulators may use technical means, such as web crawlers, to monitor various platforms by identifying whether the services call big model API interfaces (e.g., dialog generation, image synthesis) and their technical features. For the compliance of functional design, in addition to internal legal assessment of enterprises and app store audits, the regulation of informal channel services currently relies more on user complaint reporting. Service providers should self-check whether AI products have completed dual filing (large model filing and algorithm filing) and ensure that the functional design complies with the specifications.

Promoting Compliance: Cracking Down on Illegal AI Product Tutorials and Merchandise

The special action also focuses on the promotion of AI products. Tutorials that teach the use of non-compliant AI products to create face-changing videos and voice-changing videos, the sale of non-compliant products such as "voice synthesizers" and "face-changing tools", and the marketing and hype of non-compliant AI products are all included in the remediation. Promoters have the responsibility to verify the compliance of AI products before choosing to promote them. Platforms need to strengthen content auditing, not only to ensure that the content itself is legal, but also to have the ability to identify and audit the compliance of the AI products promoted in the content, for example, through the initial screening of semantic models, supplemented by manual intervention.

Corpus compliance: training data sources and management emphasized

Compliance of training data is the foundation of AI model security. Article 7 of the Interim Measures emphasizes that service providers should use data and underlying models from legitimate sources. The Basic Requirements for the Safety of Generative AI Services (hereinafter referred to as the Basic Requirements) are further refined, stipulating that if the corpus contains more than 5% of illegal and undesirable information, the source should not be harvested. At the same time, there are clear provisions on the diversity of data sources, open source protocol compliance, self-harvested data records and legal procedures for commercial data procurement.

Due to data privacy and technical confidentiality, the regulation of training corpus is quite difficult and usually takes the form of questioning and material proof. Enterprises also face great challenges in managing the huge amount of training data with complex sources and varying quality. The Basic Requirements recommend that enterprises combine keyword filtering, classification model screening and manual sampling to clean up illegal and undesirable information in the corpus.

Mechanism compliance: enhanced security management measures

Enterprises need to establish security mechanisms such as content auditing and intent recognition that are appropriate to the scale of their business, as well as an effective management process for violating accounts and a regular security self-assessment system. For services such as AI auto-replies accessed through API interfaces, social platforms need to be mindful and strict. Chapter 7 of the Basic Requirements requires service providers to monitor user input and take measures such as restricting services for users who repeatedly input illegal information. In addition, companies should have supervisory staff matching the scale of their services, responsible for tracking policies and analyzing complaints in order to improve content quality and safety. A sound risk control mechanism is a prerequisite for the launch of AI products.

Labeling Compliance: Implementing Labeling Requirements for AI-Generated Content

To enhance transparency, the Measures for Marking Artificial Intelligence Generated Synthetic Content (hereinafter referred to as the Marking Measures) stipulate that service providers are required to add explicit or implicit markings to deeply synthesized content and ensure that explicit markings are included in the file and implicit markings are added to the metadata when users download and copy the file. The approach is accompanied by the national standard "Network Security Technology Artificial Intelligence Generated Synthesized Content Identification Methods" and will come into effect on September 1, 2025 onwards. Enterprises are required to conduct self-checks based on this and deploy technical means to detect AI-generated content on their platforms and provide alerts for suspected content. Regulators may also enhance user education to improve public recognition of AI-generated content.

Domain Compliance: Focus on Security Risks in Key Industries

AI products that have been filed to provide Q&A services in key areas such as healthcare, finance, and minors must set up industry-specific security audits and control measures. Prevent the phenomenon of "AI prescribing", inducing investment or using "AI illusion" to mislead users. Compliance governance in these specific areas is complex and segmented, requiring domain expert knowledge and specialized compliance corpus support. Industry regulators and headline companies will play a key role in setting standards and ensuring that security measures are in place. Generic solutions include hallucination detection, manual approval of key operations, etc. to guard against the risk of AI loss of control.

Phase 2: Cracking down on the use of AI for illegal and illicit activities

The second phase of the special action will focus on the use of AI technology to produce and disseminate rumors, inaccurate information, pornographic and vulgar content, as well as impersonating others and engaging in cybersquad activities.

Combating the use of AI to create and publish rumors

The scope of remediation includes fabricating rumors related to current affairs and people's livelihoods out of thin air, maliciously interpreting policies, fabricating details on the basis of emergencies, posing as official releases of information, and using AI cognitive bias for malicious guidance. China's Internet Joint Rumor Dispelling Platform has long been in operation, but in the age of AI, the reduced cost of rumor production and increased fidelity pose new governance challenges. Regulation mainly relies on public opinion monitoring and user reporting, and penalties may be increased for AI rumors in key areas. Service providers need to improve their ability to detect AI-generated rumors, such as using AI tools to calibrate content accuracy, monitoring abnormal account behavior, and manually spot-checking hot content.

Clean up the use of AI to produce and publish inaccurate information

Such behaviors include piecing together and editing unrelated graphics and videos to generate mixed information, blurring and altering factual elements, rehashing old news, publishing exaggerated or pseudo-scientific content related to specialized fields, and spreading superstition with the help of AI fortune-telling and divination. Compared with rumors, the subjective malice of inaccurate information may be weaker, but its hidden nature and potential impact should not be ignored. The governance mainly relies on content platforms to strengthen ecological construction, identify low-quality content through technical means, and take measures to restrict the dissemination or penalize relevant content and creators.

Rectify the use of AI to produce and distribute pornographic and vulgar content

The use of AI to generate pornographic or indecent images and videos, soft pornography, secondary yuan images, gory violence, horror and grotesque images, and "small yellow text" are among the measures to be taken against AI technology. While AI technology improves the efficiency of content production, it may also be misused to create such undesirable content. Traditional auditing methods, such as keyword libraries, semantic modeling, and image classification ("pornography identification model"), are relatively mature for identifying user-generated content (UGC). However, the feature distribution of AI-generated content may be different from that of UGC, and service providers need to pay attention to updating model training data to maintain recognition accuracy.

Investigating and dealing with the use of AI to impersonate others to commit infringement offenses

The key targets include the impersonation of public figures through AI face-swapping, voice cloning and other in-depth forgery techniques for deception or profit, the spoofing and smearing of public figures or historical figures, the use of AI to impersonate family members and friends in fraudulent schemes, as well as the inappropriate use of AI to "resurrect the dead" and the misuse of information about the dead. Such risks stem from the leakage and misuse of personal privacy data. It is important to raise the public's awareness of privacy protection and, in the long run, establish a data traceability mechanism to ensure that the entire chain of data is compliant.

Countering the use of AI for cybersquatting

"AI water army" is a new variant of network water army. The use of AI technology to "raise" bulk registration and operation of social accounts, the use of AI content farms or AI manuscript washing to generate low-quality homogenized content in bulk to brush the flow, as well as the use of AI group control software, social robots to brush the amount of control reviews and other behavior will be severely cracked down on. Governance needs to start from the two dimensions of content and account, detecting low-quality and AIGC content on the platform, monitoring the behavioral characteristics of accounts that publish AIGC content, and automating rapid intervention in order to increase the cost of the evil of the water army.

Regulating the behavior of AI product services and applications

The production and dissemination of counterfeit and shell AI websites and applications, the provision of non-compliant functions by AI applications (e.g., the provision of "Hot Search Hot List Expansion" by authoring tools), the provision of vulgar and soft-pornographic dialog services by AI social chatting software, as well as the sale and promotion of non-compliant AI applications, services or courses that divert users, are all included in the scope of the remediation. This re-emphasizes the compliance of AI product launch and dissemination, and adds the definition of "shell" and illegal functions. Service providers should adhere to the original intent of technology to serve people.

Protection of minors' rights and interests against AI

AI applications inducing minors to become addicted, or content affecting their physical and mental health still exists under the minors' mode, is another focus of this special action.AI models are based on probability statistics, and the content they generate is unpredictable, and may output erroneous values, posing a potential risk to the growth of minors. Therefore, the application of AI in minors' education scenarios requires extra caution and strict control of the use of the scenarios; in other products with high frequency contact by minors, the minors' mode should be strengthened and AI application rights should be restricted.