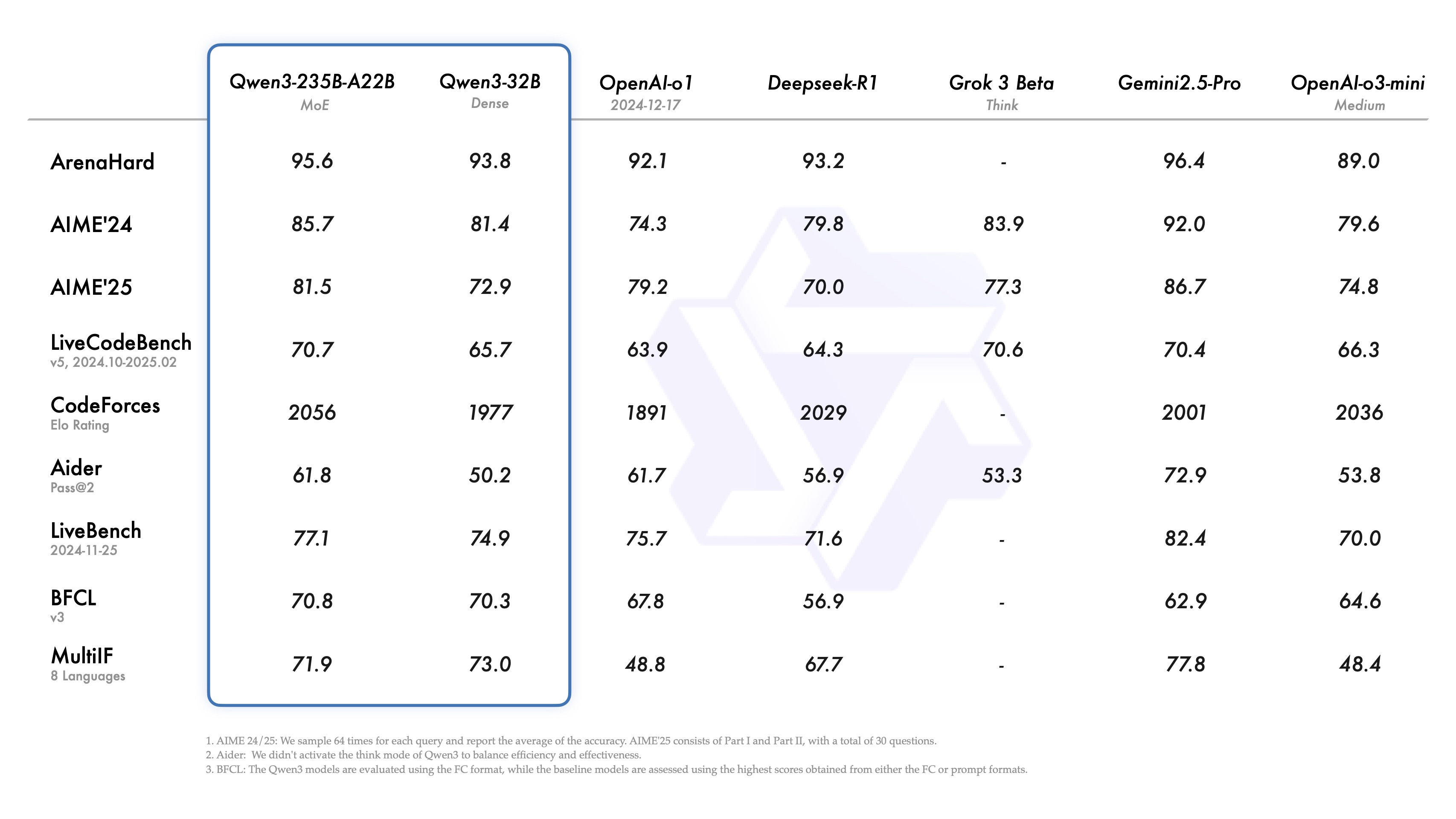

The field of large language models has a new member. Recently, the Qwen family of large language models has released its latest version, Qwen3. According to the development team, its flagship model, Qwen3-235B-A22B, has been shown to compare favorably with Qwen3-235B-A22B in benchmarks of coding, math, and general-purpose abilities. DeepSeek-R1 , o1 . o3-mini The Qwen3 is designed to match the performance of the industry's top models such as the , Grok-3 and Gemini-2.5-Pro. The selection of these competitors reflects Qwen3's positioning as aiming for a direct dialog with the current performance benchmarks.

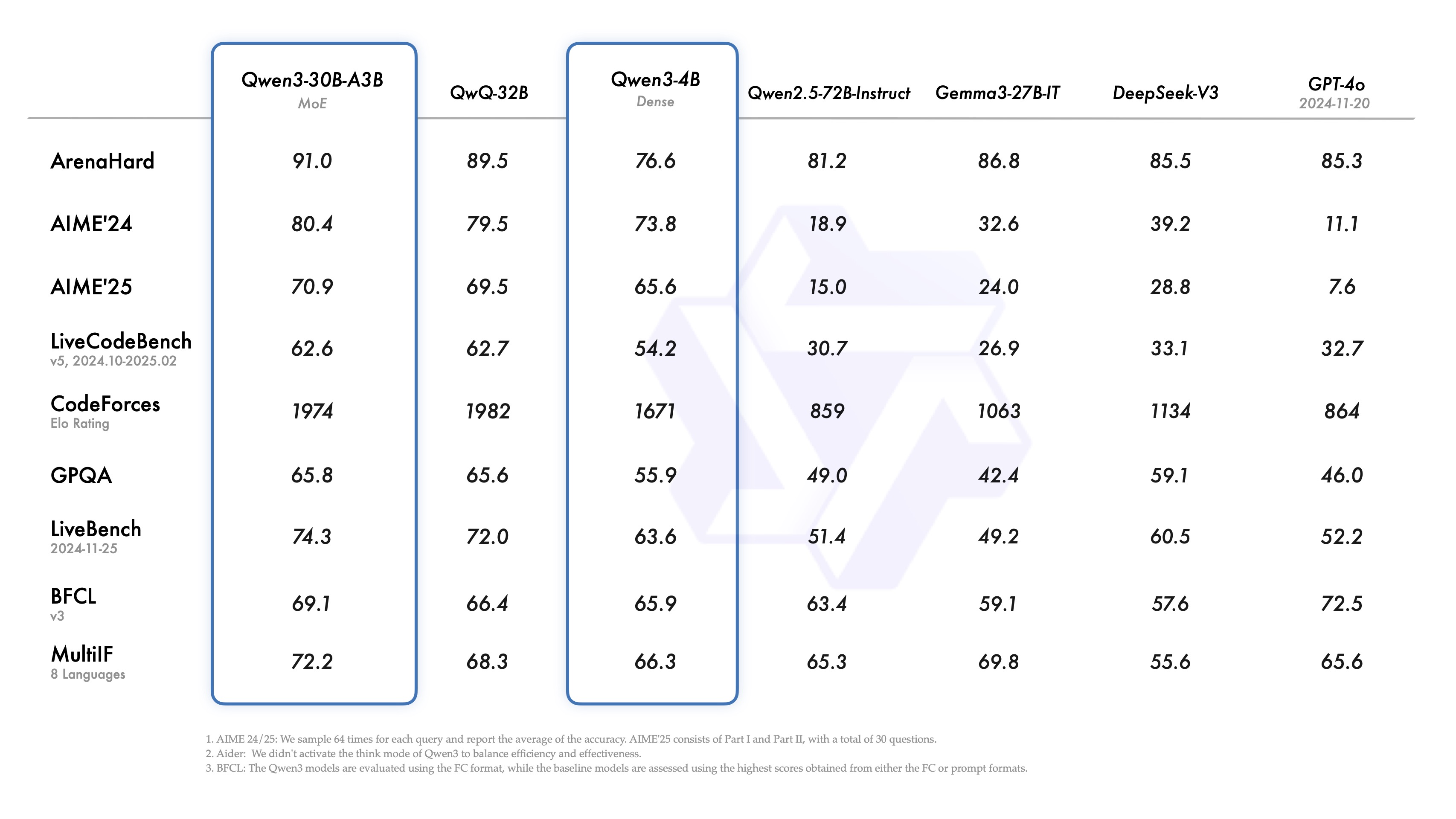

Notably, the small hybrid expert (MoE) model Qwen3-30B-A3B is claimed to outperform QwQ-32B with a larger number of covariates (albeit with one-tenth of the latter's activation parameters), and even the Qwen3-4B model with a smaller number of covariates is claimed to match the performance of Qwen2.5-72B-Instruct. This shows a significant efficiency gain due to the optimization of the model architecture and the improvement of the training method.

A highlight of the release is the opening of the weights of two MoE models: Qwen3-235B-A22B (235 billion total parameters, 22 billion activated) and Qwen3-30B-A3B (30 billion total parameters, 3 billion activated). MoE is a technique that allows a model to activate only a portion of the "expert" network when reasoning. MoE is a technique that allows models to activate only a portion of the "expert" network when reasoning, significantly reducing computational requirements while maintaining strong performance, which is important for driving the deployment of large models in a wider range of scenarios.

Also, following the Apache 2.0 license, the team has opened up weights for six dense (Dense) models with parameter size coverage ranging from 600 million to 32 billion, specifically Qwen3-32B, Qwen3-14B, Qwen3-8B, Qwen3-4B, Qwen3-1.7B, and Qwen3-0.6B.

Overview of model specifications (dense models):

| Models | Layers | Heads (Q / KV) | Tie Embedding | Context Length |

|---|---|---|---|---|

| Qwen3-0.6B | 28 | 16 / 8 | Yes | 32K |

| Qwen3-1.7B | 28 | 16 / 8 | Yes | 32K |

| Qwen3-4B | 36 | 32 / 8 | Yes | 32K |

| Qwen3-8B | 36 | 32 / 8 | No | 128K |

| Qwen3-14B | 40 | 40 / 8 | No | 128K |

| Qwen3-32B | 64 | 64 / 8 | No | 128K |

Overview of model specifications (MoE model):

| Models | Layers | Heads (Q / KV) | # Experts (Total / Activated) | Context Length |

|---|---|---|---|---|

| Qwen3-30B-A3B | 48 | 32 / 4 | 128 / 8 | 128K |

| Qwen3-235B-A22B | 94 | 64 / 4 | 128 / 8 | 128K |

These models and their pre-trained versions (e.g., Qwen3-30B-A3B-Base) are already live on Hugging Face, ModelScope, Kaggle, and other major platforms. For model deployment, it is recommended to use SGLang and vLLM and other frameworks. Ollama, LMStudio, MLX, for local use scenarios llama.cpp, and KTransformers and other tools provide easy access.

Developers can use the Qwen Chat Web and mobile applications to experience the power of Qwen3.

Core Feature Analysis

Qwen3 introduces several noteworthy features:

- Hybrid Thinking ModesQwen3 supports two problem-solving modes:

- Thinking Mode. The model performs step-by-step reasoning, shows the thought process, and then gives the final answer. This applies to complex problems that require in-depth analysis.

- Non-Thinking Mode. The model provides fast, near-instantaneous response for scenarios where speed is important and the problem is relatively simple.

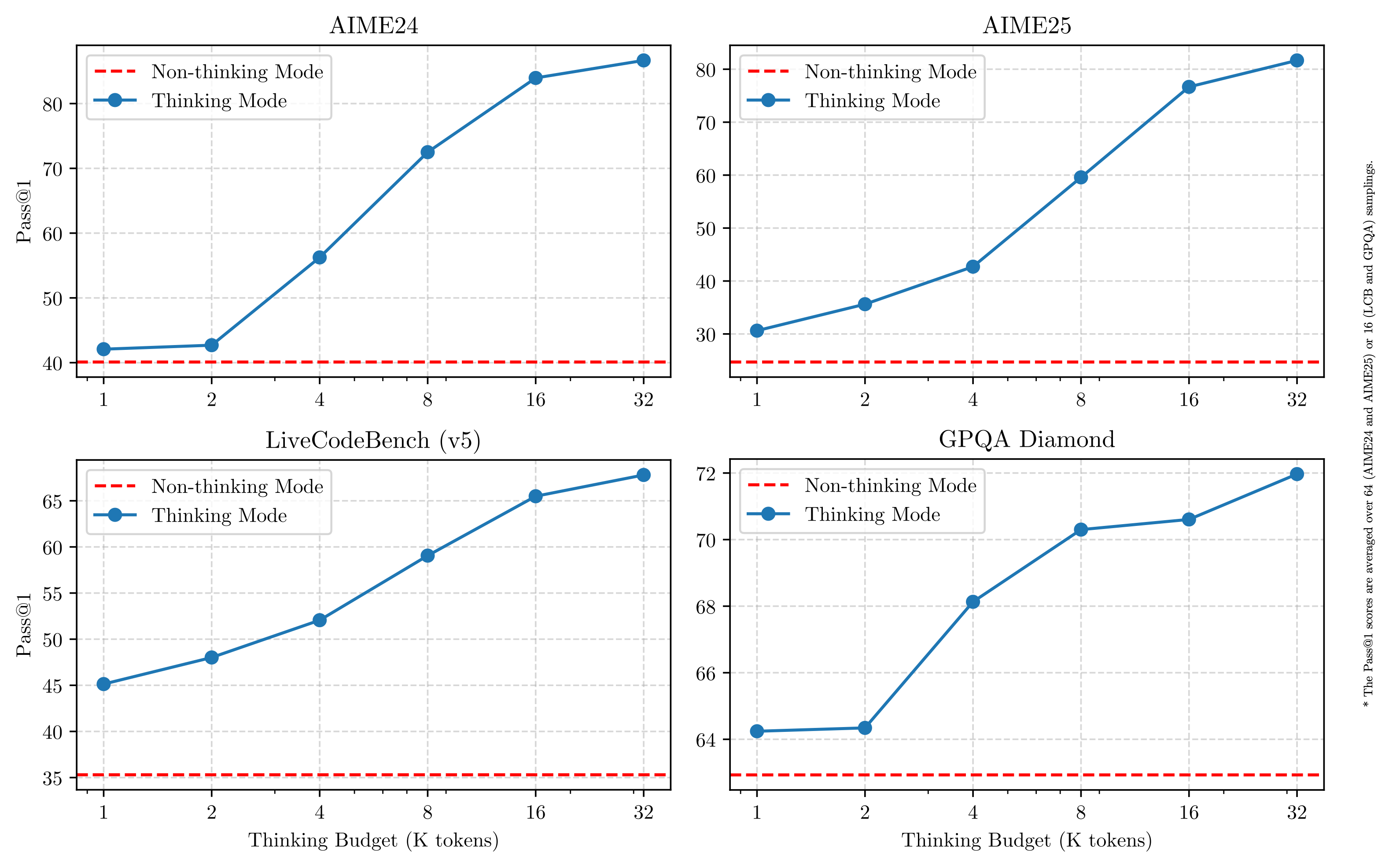

This design gives the user the flexibility to control the model's "depth of thinking" according to the needs of the task. More importantly, this hybrid model allows for stable and efficient control of the model's "thinking budget". As shown in the figure below, Qwen3's performance exhibits scalability and smooth improvements directly related to the computational reasoning budget allocated. Users can more easily configure budgets for specific tasks, thus finding a better balance between cost-effectiveness and reasoning quality. This provides new ideas for cost management of large models in real-world applications.

- Extensive multi-language supportQwen3 models support up to 119 languages and dialects. This broad multilingual capability opens up new possibilities for internationalized applications and helps global users to take advantage of these models.

Language Family Languages & Dialects Indo-European English, French, Portuguese, German, Romanian, Swedish, Danish, Bulgarian, Russian, Czech, Greek, Ukrainian, Spanish, Dutch, Slovak, Croatian. Polish, Lithuanian, Norwegian Bokmål, Norwegian Nynorsk, Persian, Slovenian, Gujarati, Latvian, Italian, Occitan, Nepali, Marathi, Belarusian. Serbian, Luxembourgish, Venetian, Assamese, Welsh, Silesian, Asturian, Chhattisgarhi, Awadhi, Maithili, Bhojpuri, Sindhi, Irish, Faroese, Hindi. Punjabi, Bengali, Oriya, Tajik, Eastern Yiddish, Lombard, Ligurian, Sicilian, Friulian, Sardinian, Galician, Catalan, Icelandic, Tosk Albanian. Limburgish, Dari, Afrikaans, Macedonian, Sinhala, Urdu, Magahi, Bosnian, Armenian Sino-Tibetan Chinese (Simplified Chinese, Traditional Chinese, Cantonese), Burmese Afro-Asiatic Arabic (Standard, Najdi, Levantine, Egyptian, Moroccan, Mesopotamian, Ta'izzi-Adeni, Tunisian), Hebrew, Maltese Austronesian Indonesian, Malay, Tagalog, Cebuano, Javanese, Sundanese, Minangkabau, Balinese, Banjar, Pangasinan, Iloko, Waray (Philippines) Dravidian Tamil, Telugu, Kannada, Malayalam Turkic Turkish, North Azerbaijani, Northern Uzbek, Kazakh, Bashkir, Tatar Tai-Kadai Thai, Lao Uralic Finnish, Estonian, Hungarian Austroasiatic Vietnamese, Khmer Other Japanese, Korean, Georgian, Basque, Haitian, Papiamento, Kabuverdianu, Tok Pisin, Swahili - Enhanced Agentic CapabilitiesThe Qwen3 model is optimized for coding and the ability to perform tasks as an intelligent agent (Agent). The team has also enhanced support for MCP (which may refer to Multi-Agent Collaboration Platform). The video link below shows how Qwen3 thinks and interacts with the environment (e.g., invoking tools).Link to video presentation: https://qianwen-res.oss-accelerate-overseas.aliyuncs.com/mcp.mov

Training details: data, phases and optimization

The amount of pre-training data for Qwen3 is significantly higher than that of Qwen2.5, which used 18 trillion tokens, while Qwen3's pre-training data is close to double that amount, at about 36 trillion tokens, covering 119 languages and dialects.

The data sources included not only web pages, but also PDF-like documents. The team utilizes Qwen2.5-VL The model extracts text from these documents and uses Qwen2.5 to enhance the quality of the extracted content. To increase the proportion of math and code data, synthetic data such as textbooks, quiz pairs, and code snippets were also generated using Qwen2.5-Math and Qwen2.5-Coder.

The pre-training process is divided into three phases:

- Phase S1. In excess of 30 trillion dollars token Pre-training is performed on a context length of 4K tokens.This phase aims to give the model basic linguistic skills and generalized knowledge.

- Phase S2. Increase the proportion of knowledge-intensive data (e.g., STEM, coding, reasoning tasks), optimize the dataset, and continue pre-training on an additional 5 trillion tokens.

- Phase S3. Using high-quality long context data, the model's context length is extended to 32K tokens (and even 128K for some models) to ensure that the model can handle long inputs efficiently.

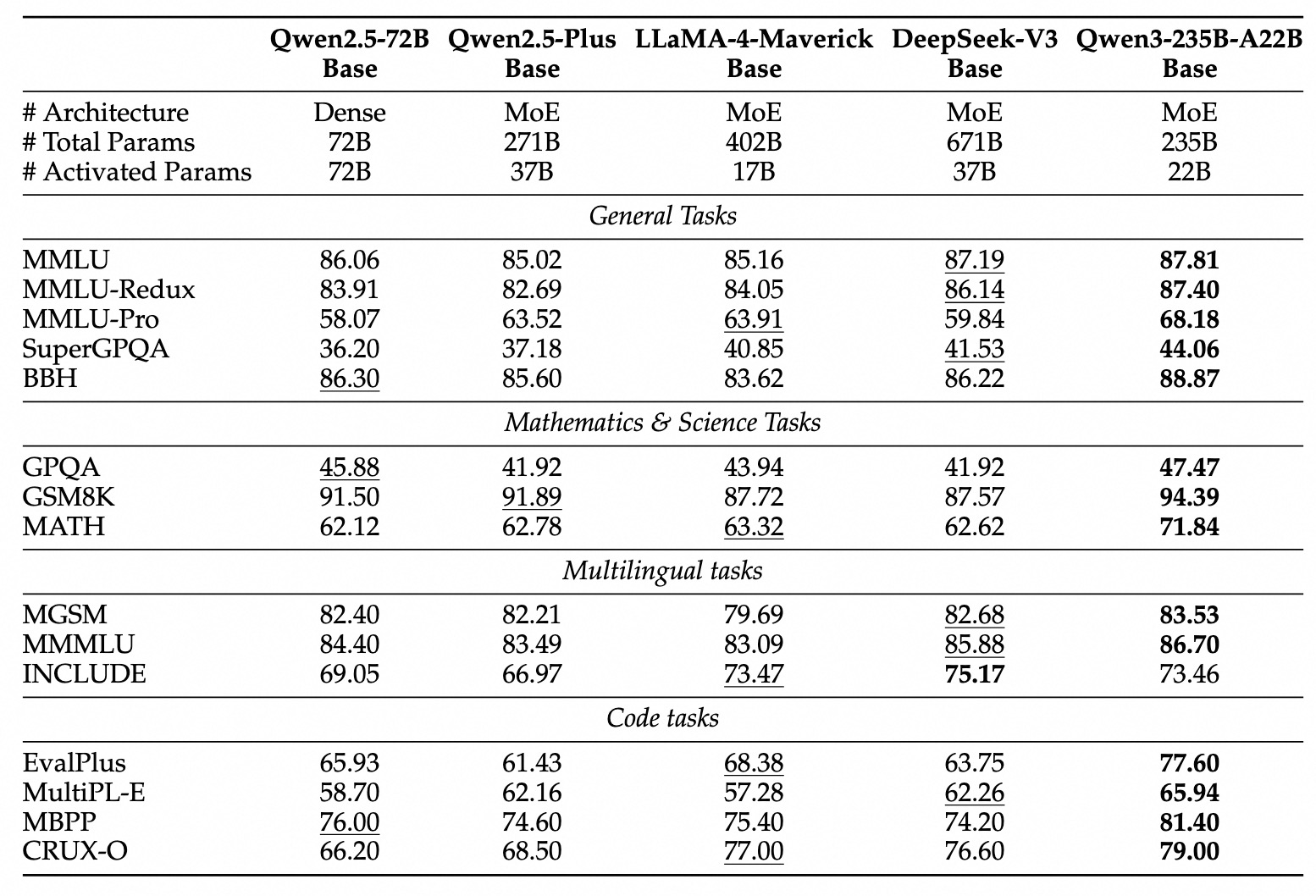

Thanks to the improved model architecture, increased training data, and more efficient training methods, the overall performance of the Qwen3 dense base model reaches the level of the Qwen2.5 base model, which has a much larger number of covariates. For example, Qwen3-1.7B/4B/8B/14B/32B-Base performs comparably to Qwen2.5-3B/7B/14B/32B/72B-Base, respectively. In particular, the Qwen3 dense-base model outperforms even the larger-parameter Qwen2.5 model in areas such as STEM, coding, and inference.

For the Qwen3-MoE base model, they achieve similar performance to the Qwen2.5 dense base model using only about 10% activation parameters, which implies significant savings in both training and inference costs.

Post-training process

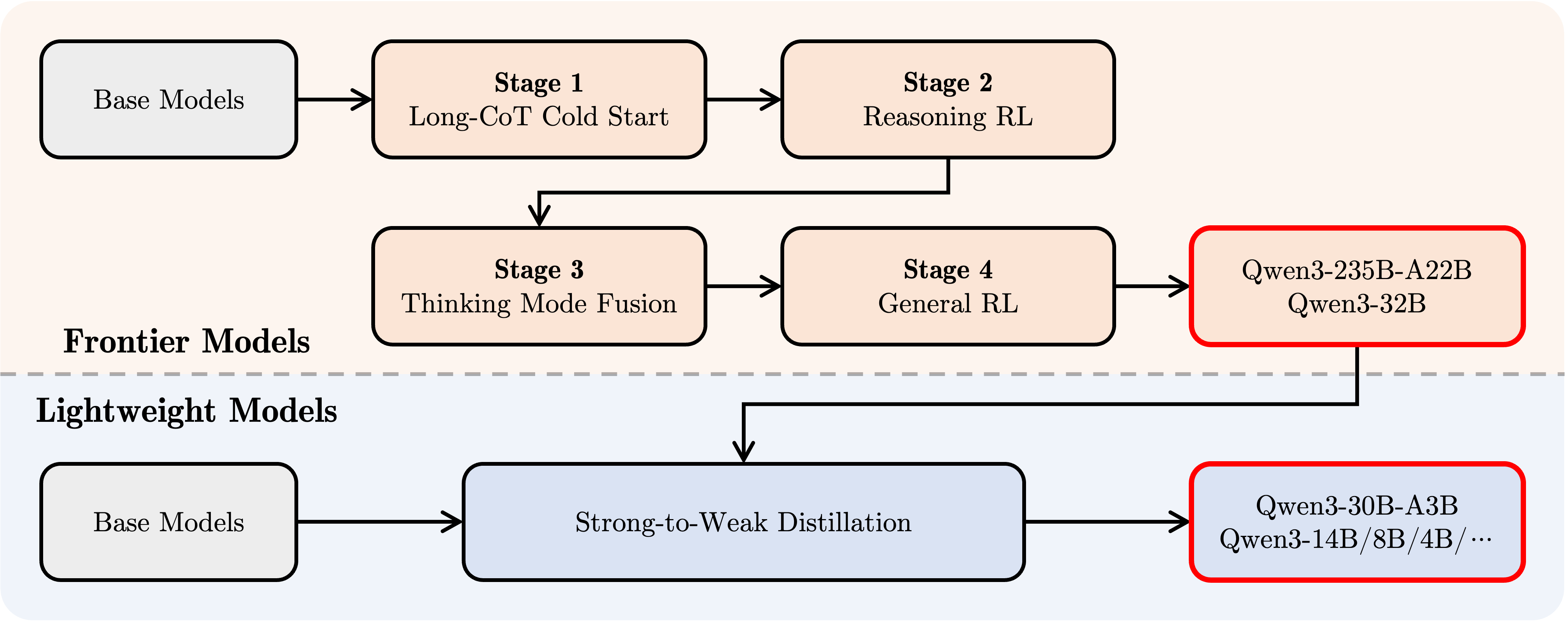

In order to develop mixed-mode models that combine stepwise reasoning with fast response capabilities, the Qwen team implemented a four-stage post-training process:

- Long CoT Cold Start. Models are fine-tuned using long chains of thought data covering diverse tasks and domains such as math, coding, logical reasoning, STEM, etc., with the aim of building foundational reasoning skills.

- Reasoning-based RL. Expanding the computational resource investment in reinforcement learning and utilizing Rule-based Rewards (RBR) to enhance the exploration and exploitation of models to further improve inference performance.

- Thinking Patterns Convergence. Integration of non-thinking capabilities into the thinking model. Integration of reasoning and rapid response capabilities is achieved by fine-tuning on a mixture of long CoT data and generalized command fine-tuning data (which are generated by the enhanced thinking model in Phase II).

- General Reinforcement Learning (General RL). Reinforcement learning is applied on over 20 generic domain tasks to further strengthen the model's generic capabilities (e.g., instruction adherence, format adherence, Agent capabilities, etc.) and to correct undesirable behaviors.

Development with Qwen3

Here is a brief guide to using Qwen3 in different frameworks. First is a standard example of using Qwen3-30B-A3B in the Hugging Face transformers library:

from modelscope import AutoModelForCausalLM, AutoTokenizer

model_name = "Qwen/Qwen3-30B-A3B"

# 加载 tokenizer 和模型

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(

model_name,

torch_dtype="auto",

device_map="auto"

)

# 准备模型输入

prompt = "给我简要介绍一下大型语言模型。"

messages = [

{"role": "user", "content": prompt}

]

# apply_chat_template 用于构建符合 Qwen 对话格式的输入

# enable_thinking=True 启用思考模式 (默认为 True)

text = tokenizer.apply_chat_template(

messages,

tokenize=False,

add_generation_prompt=True,

enable_thinking=True # 在思考模式和非思考模式间切换,默认为 True

)

model_inputs = tokenizer([text], return_tensors="pt").to(model.device)

# 进行文本生成

generated_ids = model.generate(

**model_inputs,

max_new_tokens=32768 # 设置最大生成 token 数

)

# 提取生成的内容部分 (去除输入部分)

output_ids = generated_ids[0][len(model_inputs.input_ids[0]):].tolist()

# 解析思考内容 (如果存在)

# 寻找 '</think>' 对应的 token id (151668)

try:

# rindex 从后往前查找 151668

index = len(output_ids) - output_ids[::-1].index(151668)

except ValueError:

# 如果没有找到 '</think>',说明没有思考内容

index = 0

# 分别解码思考内容和最终回复内容

thinking_content = tokenizer.decode(output_ids[:index], skip_special_tokens=True).strip("\n")

content = tokenizer.decode(output_ids[index:], skip_special_tokens=True).strip("\n")

print("思考内容:", thinking_content)

print("最终回复:", content)

To disable the thinking mode, simply set the enable_thinking parameter to False: the

text = tokenizer.apply_chat_template( messages, tokenize=False, add_generation_prompt=True, enable_thinking=False # 默认为 True )

For model deployment, endpoints compatible with the OpenAI API can be created using sglang>=0.4.6.post1 or vllm>=0.8.4:

- Using SGLang.

python -m sglang.launch_server --model-path Qwen/Qwen3-30B-A3B --reasoning-parser qwen3

- Using vLLM.

vllm serve Qwen/Qwen3-30B-A3B --enable-reasoning --reasoning-parser deepseek_r1

(Note: the original article here uses the deepseek_r1 parser, developers need to check its compatibility with Qwen3)

In the local development environment, ollama can be used with the simple command ollama run qwen3:30b-a3b to interact with the model. In addition, tools such as LMStudio, llama.cpp and ktransformers support building and running Qwen3 locally.

Advanced Usage: Dynamic Switching of Thinking Modes

Qwen3 provides a "soft-switching" mechanism that allows the user to dynamically control the model's behavior when enable_thinking=True. Specifically, you can add /think or /no_think tags to user questions or system messages to switch the model's thinking mode from round to round. In a multi-round dialog, the model will follow the most recent instruction.

The following is an example of a multi-round dialog:

from transformers import AutoModelForCausalLM, AutoTokenizer

class QwenChatbot:

def __init__(self, model_name="Qwen/Qwen3-30B-A3B"):

self.tokenizer = AutoTokenizer.from_pretrained(model_name)

# 注意:实际运行时需要确保有足够的 GPU 显存加载模型

# device_map="auto" 可能需要根据实际硬件调整

self.model = AutoModelForCausalLM.from_pretrained(

model_name,

torch_dtype="auto", # 自动选择合适的精度

device_map="auto" # 自动分配模型到可用设备

)

self.history = [] # 用于存储对话历史

def generate_response(self, user_input):

# 将当前用户输入加入历史记录

messages = self.history + [{"role": "user", "content": user_input}]

# 使用 apply_chat_template 构建输入

# 注意:这里没有显式设置 enable_thinking,会使用模型的默认行为或最近的 /think /no_think 指令

text = self.tokenizer.apply_chat_template(

messages,

tokenize=False,

add_generation_prompt=True

)

inputs = self.tokenizer(text, return_tensors="pt").to(self.model.device)

# 生成回复

response_ids = self.model.generate(

**inputs,

max_new_tokens=32768 # 设置最大生成 token 数

)[0][len(inputs.input_ids[0]):].tolist() # 提取生成内容

# 解码回复文本

response = self.tokenizer.decode(response_ids, skip_special_tokens=True).strip()

# 更新对话历史

self.history.append({"role": "user", "content": user_input})

# 注意:此处仅添加解码后的纯文本回复,未处理思考内容

# 如果需要保留或展示思考过程,需要像之前的例子一样解析 output_ids

self.history.append({"role": "assistant", "content": response})

# 返回解码后的回复文本

return response

# 示例用法

if __name__ == "__main__":

# 确保在有足够资源的机器上运行

try:

chatbot = QwenChatbot()

# 第一次输入 (默认启用思考模式)

user_input_1 = "strawberries 这个单词里有多少个 'r'?"

print(f"用户: {user_input_1}")

response_1 = chatbot.generate_response(user_input_1)

print(f"助手: {response_1}")

print("----------------------")

# 第二次输入,使用 /no_think 禁用思考模式

user_input_2 = "那么,blueberries 这个单词里有多少个 'r'? /no_think"

print(f"用户: {user_input_2}")

response_2 = chatbot.generate_response(user_input_2)

print(f"助手: {response_2}")

print("----------------------")

# 第三次输入,使用 /think 再次启用思考模式

user_input_3 = "真的吗?请再想想。 /think"

print(f"用户: {user_input_3}")

response_3 = chatbot.generate_response(user_input_3)

print(f"助手: {response_3}")

except Exception as e:

print(f"运行出错: {e}")

print("请确保已安装所需依赖,并拥有足够的计算资源(如 GPU 显存)。")

Agent Usage: Tool call

Qwen3 excels in its tool-calling capabilities. Recommended use Qwen-Agent framework (https://github.com/QwenLM/Qwen-Agent) to take full advantage of Qwen3's Agent capabilities. Qwen-Agent encapsulates templates and parsers for tool calls internally, simplifying development complexity.

This can be done by MCP Configuration files, using Qwen-Agent's integration tools, or integrating other tools on your own to define the set of tools available.

import os

from qwen_agent.agents import Assistant

# 定义 LLM 配置

llm_cfg = {

'model': 'Qwen3-30B-A3B', # 指定使用的 Qwen3 模型

# 如果使用阿里云 ModelScope 提供的 DashScope API:

# 'model_type': 'qwen_dashscope',

# 'api_key': os.getenv('DASHSCOPE_API_KEY'), # 需要设置环境变量

# 如果使用兼容 OpenAI API 的自定义端点 (例如 VLLM 或 SGLang 部署的):

'model_server': 'http://localhost:8000/v1', # 替换为你的 API 地址

'api_key': 'EMPTY', # 对于本地部署或不需要 key 的情况

# 可选:其他生成参数配置

# 'generate_cfg': {

# # 如果模型的原始输出将思考过程 <think>...</think> 包含在最终内容里,设为 True

# # 如果 VLLM/SGLang 等框架已分离 reasoning_content 和 content,则设为 False 或不设置

# 'thought_in_content': False,

# },

}

# 定义工具列表

tools = [

{'mcpServers': { # 可以指定 MCP 配置文件或直接定义服务

'time': { # 定义一个名为 'time' 的工具

'command': 'uvx', # 使用 uvx 启动

# 启动参数,运行 mcp-server-time,并设置时区

'args': ['mcp-server-time', '--local-timezone=Asia/Shanghai']

},

"fetch": { # 定义一个名为 'fetch' 的工具,用于访问网页

"command": "uvx",

"args": ["mcp-server-fetch"]

}

}

},

'code_interpreter', # 使用 Qwen-Agent 内置的代码解释器工具

# 可以继续添加其他自定义或内置工具

]

# 初始化 Agent

# 需要确保 uvx 和相应的 mcp-server-* 已安装并配置好

bot = Assistant(llm=llm_cfg, function_list=tools)

# 流式生成调用示例

messages = [{'role': 'user', 'content': '访问 https://qwenlm.github.io/blog/ 页面,并介绍一下 Qwen 的最新进展。'}]

responses = None # 初始化 responses 变量

# bot.run 返回一个生成器,用于流式输出

for responses in bot.run(messages=messages):

# 可以在这里处理中间步骤的输出,例如工具调用和观察结果

# print(responses) # 打印每个中间步骤或最终回复

pass # 循环结束后,responses 将包含最终的完整回复

# 打印最终的回复内容

if responses:

print(responses)

else:

print("未能获取到回复。")

(Note: To run the Agent example, you need to install the qwen-agent library and related dependencies, and make sure that the uvx and mcp-server-* tools are available.)

future outlook

Qwen3 is seen as an important milestone in the journey towards general artificial intelligence (AGI) and super artificial intelligence (ASI). By scaling up pre-training and reinforcement learning, the model achieves a higher level of intelligence. The integration of hybrid thought patterns provides users with the flexibility to control their thinking budget, while extensive language support enhances global accessibility.

Going forward, the Qwen team plans to continue to improve model capabilities across multiple dimensions, including optimizing model architectures and training methods for data scaling, increasing model size, extending context length, broadening modality support, and advancing reinforcement learning through environmental feedback for long time horizon reasoning. The team believes that the industry is moving from the era of training "models" to the era of training "intelligences" (Agents). Its next iteration promises to bring more meaningful advances to work and life.