General Introduction

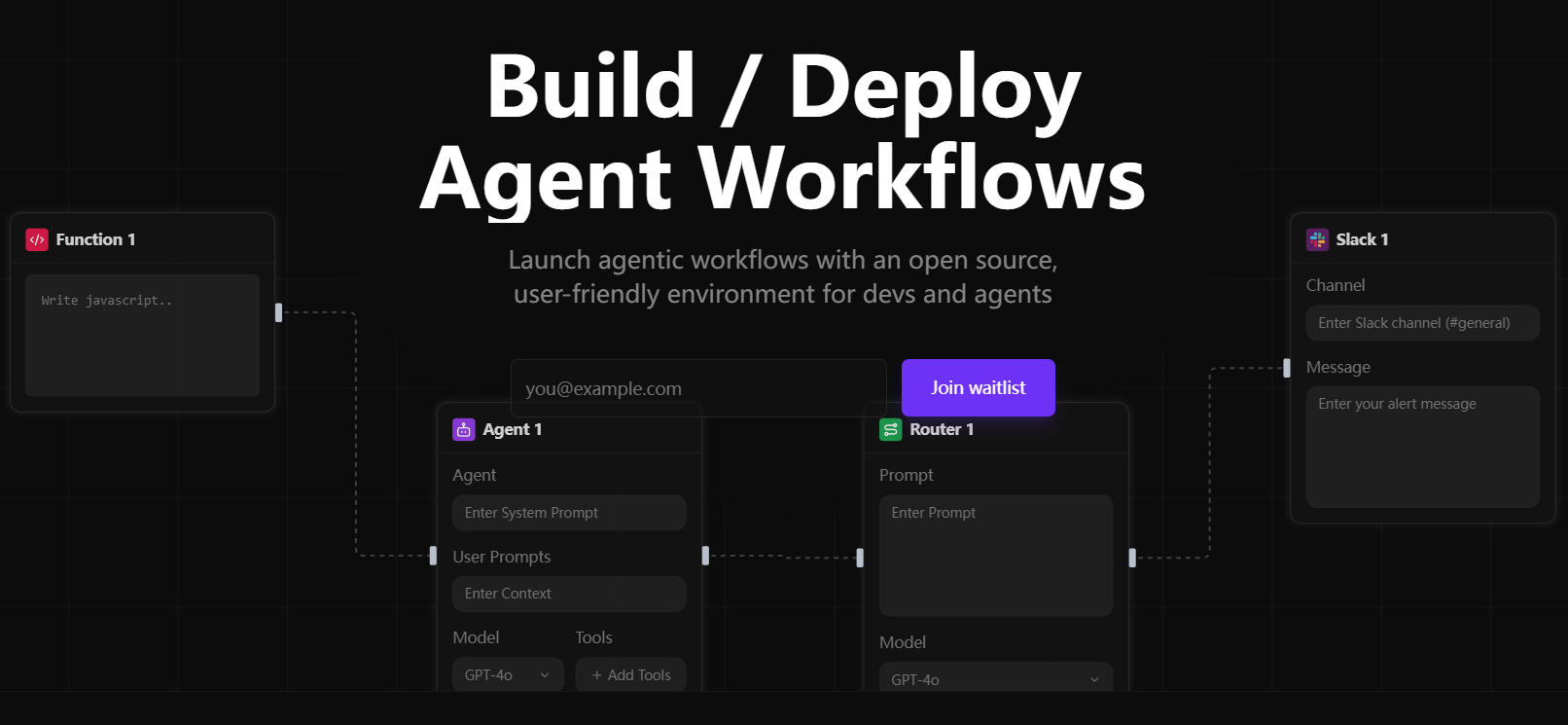

Sim Studio is an open source AI agent workflow building platform focused on helping users quickly design, test, and deploy large-scale language model (LLM) workflows through a lightweight, intuitive visual interface. Users can create complex multi-agent applications with drag-and-drop without deep programming. It supports both local and cloud models, and is compatible with a wide range of tool integrations such as Slack and databases.Sim Studio is modular in design and is suitable for developers, researchers, and enterprise users. An official cloud-hosted version (https://simstudio.ai) and self-hosted options are available to meet different needs.

Nowadays, AI agent frameworks are blossoming, why would I recommend Sim Studio in particular? I have compiled a list of the features of several mainstream open source AI agent frameworks:

| Framework name | core paradigm | Key Benefits | Applicable Scenarios |

|---|---|---|---|

| LangGraph | Graph-based prompting workflow | Explicit DAG control, branching and debugging | Complex multi-step tasks, advanced error handling |

| OpenAI Agents SDK | OpenAI Advanced Toolchain | Integration of tools such as web and file search | Teams that rely on the OpenAI ecosystem |

| Smolagents | Code-centered minimal agent loops | Simple setup, direct code execution | Rapid automation of tasks without complex orchestration |

| CrewAI | Multi-agent collaboration (crews) | Role-based parallel workflow with shared memory | Complex tasks requiring the collaboration of multiple experts |

| AutoGen | asynchronous multi-agent chat | Real-time dialog, event-driven | Scenarios that require real-time concurrent and multi-LLM "voice" interaction |

| Sim Studio | Visual Workflow Builder | Intuitive interface, rapid deployment, open source flexibility | Rapid prototyping and production environment deployment |

There are quite a few low-code/no-code AI agent building platforms on the market today, and I've compiled a list of how they compare to Sim Studio:

| flat-roofed building | specificities | Applicable Scenarios | prices |

|---|---|---|---|

| Vertex AI Builder | Enterprise-class no-code platform with complex APIs | Large Enterprise Workflow Automation | cover the costs |

| Beam AI | Horizontal platform with support for multiple pre-built agents | Multi-discipline automation (compliance, customer service, etc.) | cover the costs |

| Microsoft Copilot Studio | Low code, 1200+ data connectors | Internal Chatbot, Order Management | cover the costs |

| Lyzr Agent Studio | Modular and suitable for prototyping | Finance, human resources automation | cover the costs |

| Sim Studio | Open source, visual interface, flexible deployment | Full process from prototype to production | free and open source |

As you can see, as an open source project, Sim Studio is no slouch in terms of functionality and flexibility, and there is no financial pressure to use it.

Function List

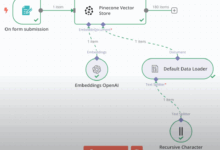

- Visual Workflow Editor : Design AI agent workflows with a drag-and-drop interface that supports conditional logic and multi-step tasks.

- Multi-model support : Compatible with cloud and local LLMs, such as local models run through Ollama.

- tool integration : Support for connecting to external tools such as Slack, databases, and extended proxy functionality.

- API Deployment : Generate workflow APIs with one click for easy integration into other systems.

- local deployment : Self-hosting is supported via Docker or manually for privacy-sensitive scenarios.

- Modular Expansion : Enhances flexibility by allowing users to customize function blocks and tools.

- Logging and Debugging : Provide detailed logs for workflow optimization and error troubleshooting.

- Development Container Support : Simplify the setup of local development environments with VS Code development containers.

Using Help

The core of Sim Studio is its lightweight and intuitive workflow building capabilities. The following is a detailed description of the installation process, the operation of the main functions and the use of special features to ensure that users can quickly get started.

Installation process

Sim Studio offers three self-hosting options: Docker (recommended), development containers, and manual installation. The following are mainly Docker and manual installation, and the development container is suitable for developers who are familiar with VS Code.

Way 1: Docker installation (recommended)

Docker provides a consistent runtime environment that is suitable for most users. Docker and Docker Compose need to be installed first.

- Cloning Codebase

Runs in the terminal:git clone https://github.com/simstudioai/sim.git cd sim

- Configuring Environment Variables

Copy and edit the environment file:cp sim/.env.example sim/.env

configured in the .env file:

- BETTER_AUTH_SECRET: Generate random key for authentication.

- RESEND_API_KEY: used for mailbox verification, if not set, the verification code will be output to the console.

- Database settings: PostgreSQL is used by default, you need to make sure the database service is running.

- OLLAMA_HOST: Set to http://host.docker.internal:11434 if using a local model.

- Starting services

Run the following command:docker compose up -d --build

or use a script:

./start_simstudio_docker.sh

Once the service has started, visit http://localhost:3000/w/ to access the workflow interface.

- Management services

- View Log:

docker compose logs -f simstudio

- Discontinuation of services:

docker compose down

- Restart the service (after code update):

docker compose up -d --build

- View Log:

- Using Local Models

If local LLM (e.g. LLaMA) is required, pull the model:./sim/scripts/ollama_docker.sh pull <model_name>

Start services that support local models:

./start_simstudio_docker.sh --local

Or choose based on hardware:

# 有 NVIDIA GPU docker compose up --profile local-gpu -d --build # 无 GPU docker compose up --profile local-cpu -d --build

If you already have an Ollama instance, modify docker-compose.yml to add it:

extra_hosts: - "host.docker.internal:host-gateway" environment: - OLLAMA_HOST=http://host.docker.internal:11434

Mode 2: Manual Installation

For developers who need to customize their environment with Node.js, npm and PostgreSQL installed.

- Clone and install dependencies

git clone https://github.com/simstudioai/sim.git cd sim/sim npm install

- Configuration environment

Copy and edit the environment file:cp .env.example .env

Configure BETTER_AUTH_SECRET, database connections, etc.

- Initializing the database

Push database architecture:npx drizzle-kit push

- Starting the Development Server

npm run dev

Visit http://localhost:3000.

Approach 3: Developing Containers

- Install the Remote - Containers extension in VS Code.

- Open the project directory and click "Reopen in Container".

- Run npm run dev or sim-start to start the service.

Main Functions

At the heart of Sim Studio is the visual workflow editor, which is described below:

Creating Workflows

- Log in to Sim Studio (http://localhost:3000/w/).

- Click "New Workflow" to enter the editor.

- Drag and drop the Agent node and select LLM (Cloud or Local Model).

- Add a Tools node (e.g. Slack or Database) and configure the parameters.

- Use the Conditional Logic node to set the branching logic.

- Connect nodes and save workflows.

Test Workflow

- Click "Test" and enter the example data.

- View output and logs to check node execution.

- Adjust nodes or logic as needed and retest.

Deployment workflow

- Click "Deploy" and select "Generate API".

- Get the API endpoint (e.g. http://localhost:3000/api/workflow/).

- Test the API:

curl -X POST http://localhost:3000/api/workflow/<id> -d '{"input": "示例数据"}'

Debugging Workflow

- Check the node inputs and outputs by viewing the "Log" in the editor.

- Use version control to save workflow snapshots for easy rollback.

Featured Function Operation

- Local Model Support : Run local models through Ollama for privacy-sensitive scenarios. After configuration, select the model at the agent node and test the performance.

- tool integration Take Slack as an example, enter the API Token in the Tools node, set the message target, and test the message sending functionality.

- Development Containers : Containerized development via VS Code, with automatic environment configuration for rapid iteration.

caveat

- Docker installations need to ensure that port 3000 is not occupied.

- Local models require a high hardware configuration (16GB RAM recommended, GPU optional).

- Production environments need to be configured with RESEND_API_KEY and HTTPS.

- Regularly update the code:

git pull origin main docker compose up -d --build

technology stack

Sim Studio uses a modern technology stack to ensure performance and development efficiency:

- organizing plan : Next.js (App Router)

- comprehensive database : PostgreSQL + Drizzle ORM

- accreditation : Better Auth

- interfaces : Shadcn, Tailwind CSS

- Status Management : Zustand

- Process Editor : ReactFlow

- (computer) file : Fumadocs

application scenario

- Automated Customer Service

Design multi-agent workflows, integrate database and Slack, automate responses to customer questions and notify human customer service, suitable for e-commerce platforms. - data analysis

Build workflows to extract data from databases, call LLM to generate reports, deploy as APIs, suitable for financial analytics. - Educational tools

Creating interactive learning agents, combining local modeling to answer questions, and integrating test question generation tools for online education.

QA

- Does Sim Studio support Windows?

Windows is supported, but requires Docker Desktop or Node.js. The Docker approach is recommended to ensure a consistent environment. - How do I connect to an existing Ollama instance?

Modify docker-compose.yml, add host.docker.internal mapping, set OLLAMA_HOST. - What hardware is required for a local model?

16GB RAM recommended, GPU for improved performance, may run slowly on lower-end devices. - How do I contribute code?

Refer to https://github.com/simstudioai/sim/blob/main/.github/CONTRIBUTING.md.