General Introduction

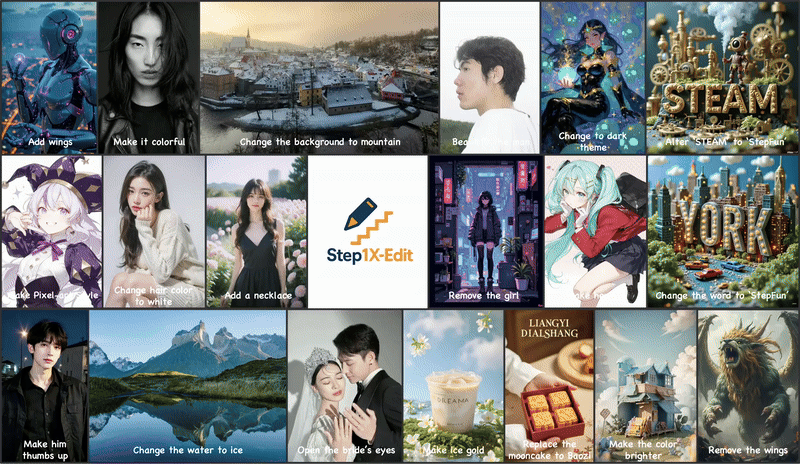

Step1X-Edit is an open source image editing framework, developed by the Stepfun AI team and hosted on GitHub, that combines a multimodal large language model (Qwen-VL) and a diffusion transformer (DiT) to allow users to edit an image with simple natural language commands, such as changing the background, removing an object, or switching styles. The project was released on April 25, 2025, and performs close to closed-source models such as GPT-4o and Gemini 2 Flash. step1X-Edit provides model weights, inference code, and GEdit-Bench benchmarking to support a wide range of editing scenarios. the Apache 2.0 license allows free use and commercial development, attracting developers, designers, and researchers. Community support is active and has launched ComfyUI Plug-ins and FP8 quantized versions to optimize hardware requirements.

Currently available in Step AI Free experience. However, the actual image editing results are slightly different from GPT-4o and Gemini 2 Flash.

Function List

- Supports natural language commands for editing images, such as "Change background to beach" or "Remove people from photo".

- Parsing image and text commands using a multimodal large language model (Qwen-VL) to generate precise edits.

- Generates high quality images based on Diffusion Transformer (DiT) that maintains the original image details.

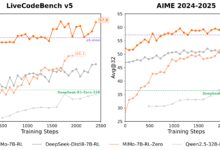

- Provides GEdit-Bench benchmarking to evaluate editing performance under real user commands.

- Supports FP8 quantization models, reduces hardware requirements, and adapts to low-memory GPUs.

- Integration with ComfyUI plug-in simplifies workflow and enhances user experience.

- Provides an online demo that allows users to experience editing functionality without installation.

- Open source model weights and inference code to support secondary development and research.

Using Help

Installation process

To use Step1X-Edit, you need to install the environment and download the model weights. Below are the detailed steps, suitable for Linux systems (Ubuntu 20.04 or higher recommended):

- Preparing the environment

Ensure that Python 3.10 or higher is installed on your system, as well as the CUDA toolkit (12.1 recommended). A GPU is recommended (80GB of RAM is best, such as the NVIDIA H800), but FP8 quantized versions support lower RAM (16GB or 24GB).conda create -n step1x python=3.10 conda activate step1x

- clone warehouse

Download the Step1X-Edit project code from GitHub:git clone https://github.com/stepfun-ai/Step1X-Edit.git cd Step1X-Edit - Installation of dependencies

Install PyTorch (2.3.1 or 2.5.1 recommended) and related libraries:pip install torch==2.3.1 torchvision --index-url https://download.pytorch.org/whl/cu121 pip install -r requirements.txtInstall Flash Attention (optional, for accelerated reasoning):

pip install flash-attn --no-build-isolationIf you encounter problems with Flash Attention installation, you can refer to the official script to generate a pre-compiled wheel file suitable for your system:

python scripts/find_flash_attn_wheel.py - Download model weights

Download the model weights and Variable Autocoder (VAE) from Hugging Face or ModelScope:- Step1X-Edit model:

step1x-edit-i1258.safetensors(approx. 24.9 GB) - VAE:

vae.safetensors(approximately 335MB) - Qwen-VL model:

Qwen/Qwen2.5-VL-7B-Instruct

Automated downloads using Python scripts:

from huggingface_hub import snapshot_download import os target_dir = "models/step1x" os.makedirs(target_dir, exist_ok=True) # 下载 Step1X-Edit 模型 snapshot_download(repo_id="stepfun-ai/Step1X-Edit", local_dir=target_dir, allow_patterns=["step1x-edit-i1258.safetensors"]) # 下载 VAE snapshot_download(repo_id="stepfun-ai/Step1X-Edit", local_dir=target_dir, allow_patterns=["vae.safetensors"]) # 下载 Qwen-VL qwen_dir = os.path.join(target_dir, "Qwen2.5-VL-7B-Instruct") snapshot_download(repo_id="Qwen/Qwen2.5-VL-7B-Instruct", local_dir=qwen_dir) - Step1X-Edit model:

- running inference

Edit an image using the provided reasoning script. For example, edit an image and change the background:python scripts/run_inference.py --image_path assets/demo.png --prompt "将背景改为夜空" --output_path output.pngParameter Description:

--image_path: Enter the image path.--prompt: Editing commands (e.g. "Change sky to sunset").--output_path: Output image path.--size_level: Resolution (default 512x512, 1024x1024 requires more memory).--seed: Random seeds to control generation consistency.

Using the ComfyUI plug-in

Step1X-Edit offers a ComfyUI plug-in for users who wish to integrate into workflows.

- Clone the ComfyUI plugin repository:

cd path/to/ComfyUI/custom_nodes git clone https://github.com/quank123wip/ComfyUI-Step1X-Edit.git - Place the model weights in the

ComfyUI/models/Step1x-EditCatalog:step1x-edit-i1258.safetensorsvae.safetensors- Qwen-VL model folder:

Qwen2.5-VL-7B-Instruct

- Start ComfyUI and load the Step1X-Edit node.

- In the ComfyUI interface, upload an image, enter an editing command (e.g. "Add Wings"), and run the workflow to generate the result.

Main Functions

- natural language editor (computing)

The user uploads an image and enters a text command. For example, to change the background of a photo to a mountain view, enter "change background to mountain view". The model parses the command through Qwen-VL, extracts the semantics, and generates a new image in conjunction with DiT. It is recommended that the commands are clear and specific, e.g. "Change sky to blue starry sky" is more effective than "Beautify sky". - Object Removal or Addition

Commands such as "Remove a person from a photo" or "Add a tree". The model keeps the rest of the image and edits the specified area precisely. In complex scenes, multiple adjustments of the commands can optimize the results. - style shift

Supports stylized editing, such as "Convert image to pixel art style" or "Change to Miyazaki style". The model generates a stylized image through a diffusion process. - Online Demo

Visit the Hugging Face space (https://huggingface.co/spaces/stepfun-ai/Step1X-Edit), upload an image, enter instructions, and experience it directly. Each generation is limited by GPU time and free users have two attempts.

caveat

- hardware requirementThe FP8 quantized version can be reduced to 16GB of memory for GPUs such as the 3090 Ti.

- Command optimizationComplex editing requires detailed instructions, such as "change the background to snowy mountains and keep the foreground characters unchanged".

- Community Support: The GitHub repository has an active community, so check out Issues or Discussions if you run into problems.

application scenario

- content creation

Designers use Step1X-Edit to quickly change the background or adjust the style for advertising materials. For example, change the background of a product photo to a holiday theme to enhance visual appeal. - Personal photo editing

Ordinary users can beautify their photos, such as removing background clutter or changing a daytime photo to a nighttime one, through online demos that are easy to operate and require no specialized skills. - E-commerce product optimization

E-commerce platforms use Step1X-Edit to generate display images of their products in different scenarios, such as placing clothes on a beach or city background to save on shooting costs. - academic research

Researchers use the GEdit-Bench dataset and model weights to develop new image editing algorithms or compare model performance.

QA

- What resolutions does Step1X-Edit support?

Supports 512x512 and 1024x1024 resolutions. 512x512 is faster and requires less memory; 1024x1024 is more detailed and requires more memory. - How can I optimize my editing results?

Use specific instructions and avoid vague descriptions. Trying different wording several times can improve results. For example, "change the sky to a red sunset" is clearer than "change the sky". - Does it support Chinese commands?

Yes, the model supports Chinese commands with results comparable to English. It is recommended to describe the requirements in concise language. - FP8 What is the difference between quantized versions?

The FP8 version has a lower video memory requirement (16GB to run), but may sacrifice detail slightly. Ideal for users with limited hardware resources. - Does it need to be networked to operate?

No internet connection is required to run locally. Online demos require access to the Hugging Face space.