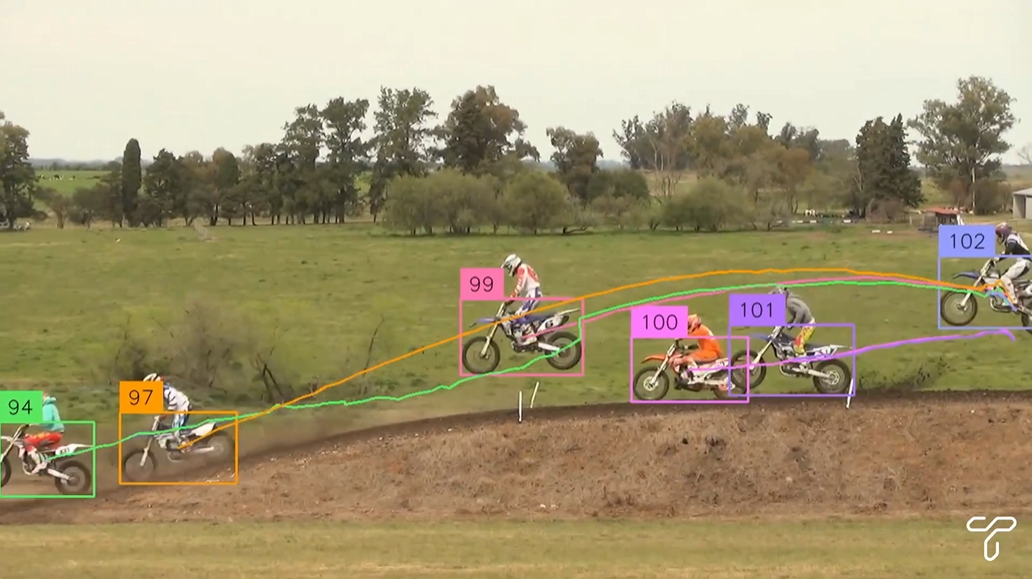

Trackers: open source tool library for video object tracking

General Introduction

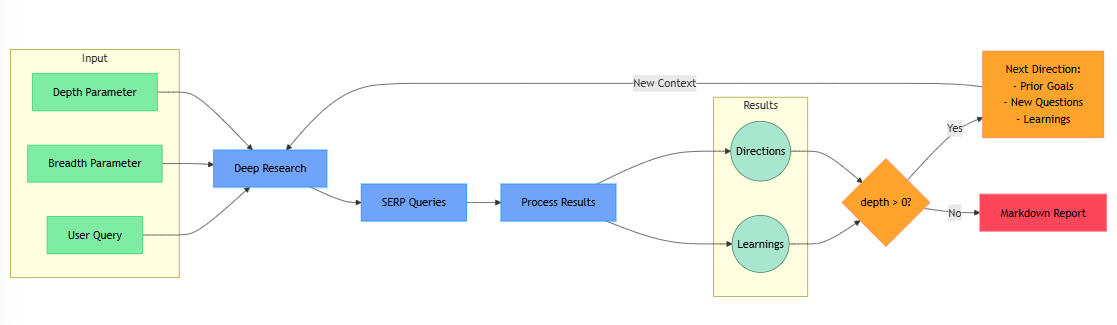

Trackers is an open source Python tool library focused on multi-object tracking in video. It integrates a variety of leading tracking algorithms, such as SORT and DeepSORT, allowing users to combine different object detection models (e.g., YOLO, RT-DETR) for flexible video analysis. Users can achieve detection, tracking and labeling of video frames with simple code for traffic monitoring, industrial automation and other scenarios.

Function List

- Multiple tracking algorithms are supported, including SORT and DeepSORT, with plans to add more in the future.

- Compatible with leading object detection models such as YOLO, RT-DETR and RFDETR.

- Provides video frame labeling with support for displaying trace IDs and bounding boxes.

- The modular design allows the user to freely combine detectors and trackers.

- Supports processing frames from video files or live video streams.

- Open source and free, based on Apache 2.0 license, open and transparent code.

Using Help

Installation process

To use Trackers, you need to install the dependencies in your Python environment. Here are the detailed installation steps:

- Preparing the environment

Ensure that Python 3.6 or later is installed on your system. A virtual environment is recommended to avoid dependency conflicts:python -m venv venv source venv/bin/activate # Windows 用户使用 venv\Scripts\activate - Installing the Trackers Library

You can install the latest version from GitHub:pip install git+https://github.com/roboflow/trackers.gitOr install the released stable version:

pip install trackers - Installation of dependent libraries

Trackers dependenciessupervision,torchand other libraries. Depending on the detection model used, additional installations may be required:- For the YOLO model:

pip install ultralytics - For the RT-DETR model:

pip install transformers - Ensure installation

opencv-pythonFor video processing:pip install opencv-python

- For the YOLO model:

- Verify Installation

Run the following code to check if the installation was successful:from trackers import SORTTracker print(SORTTracker)

Usage

The core function of Trackers is to process video frames through object detection and tracking algorithms. The following is a detailed procedure for using SORTTracker in conjunction with the YOLO model:

Example: Video Object Tracking with YOLO and SORTTracker

- Preparing the video file

Make sure there is an input video file, for exampleinput.mp4. Place it in the project directory. - Write code

Below is a complete example code for loading a YOLO model, tracking objects in the video, and labeling the output video with the tracking ID:import supervision as sv from trackers import SORTTracker from ultralytics import YOLO # 初始化跟踪器和模型 tracker = SORTTracker() model = YOLO("yolo11m.pt") annotator = sv.LabelAnnotator(text_position=sv.Position.CENTER) # 定义回调函数处理每帧 def callback(frame, _): result = model(frame)[0] detections = sv.Detections.from_ultralytics(result) detections = tracker.update(detections) return annotator.annotate(frame, detections, labels=detections.tracker_id) # 处理视频 sv.process_video( source_path="input.mp4", target_path="output.mp4", callback=callback )Code Description::

YOLO("yolo11m.pt")Load the pre-trained YOLO11 model.SORTTracker()Initializes the SORT tracker.sv.Detections.from_ultralyticsConverts YOLO inspection results to Supervision format.tracker.update(detections)Updates the trace status and assigns a trace ID.annotator.annotateDraws a bounding box and ID on the frame.sv.process_videoProcess the video frame by frame and save the result.

- running code

Save the code astrack.py, and then run:python track.pyOutput Video

output.mp4will contain annotations with tracking IDs.

Featured Function Operation

- Switching Detection Models

Trackers supports a variety of detection models. For example, the RT-DETR model is used:import torch from transformers import RTDetrV2ForObjectDetection, RTDetrImageProcessor tracker = SORTTracker() processor = RTDetrImageProcessor.from_pretrained("PekingU/rtdetr_v2_r18vd") model = RTDetrV2ForObjectDetection.from_pretrained("PekingU/rtdetr_v2_r18vd") annotator = sv.LabelAnnotator() def callback(frame, _): inputs = processor(images=frame, return_tensors="pt") with torch.no_grad(): outputs = model(**inputs) h, w, _ = frame.shape results = processor.post_process_object_detection( outputs, target_sizes=torch.tensor([(h, w)]), threshold=0.5 )[0] detections = sv.Detections.from_transformers(results, id2label=model.config.id2label) detections = tracker.update(detections) return annotator.annotate(frame, detections, labels=detections.tracker_id) sv.process_video(source_path="input.mp4", target_path="output.mp4", callback=callback) - Customized markup

You can adjust the annotation style, for example by changing the label position or adding a bounding box:annotator = sv.LabelAnnotator(text_position=sv.Position.TOP_LEFT) box_annotator = sv.BoundingBoxAnnotator() def callback(frame, _): result = model(frame)[0] detections = sv.Detections.from_ultralytics(result) detections = tracker.update(detections) frame = box_annotator.annotate(frame, detections) return annotator.annotate(frame, detections, labels=detections.tracker_id) - Processing live video streams

If you need to handle camera input, you can modify the code:import cv2 cap = cv2.VideoCapture(0) # 打开默认摄像头 while cap.isOpened(): ret, frame = cap.read() if not ret: break annotated_frame = callback(frame, None) cv2.imshow("Tracking", annotated_frame) if cv2.waitKey(1) & 0xFF == ord("q"): break cap.release() cv2.destroyAllWindows()

caveat

- performance optimization: Processing long videos may result in high memory usage. You can limit the buffer size by setting environment variables:

export VIDEO_SOURCE_BUFFER_SIZE=2 - Model Selection: To ensure that the detection model is compatible with the tracker, YOLO and RT-DETR are the recommended options.

- adjust components during testing: If the tracking ID switches frequently, try adjusting the confidence threshold of the detection model or the parameters of the tracker, e.g., the

track_bufferThe

application scenario

- traffic monitoring

Trackers can be used to analyze vehicle and pedestrian trajectories on the road. For example, in combination with YOLO detection of vehicles in urban traffic cameras, SORTTracker tracks the path of each vehicle, generating traffic statistics or violation records. - industrial automation

On production lines, Trackers track moving objects, such as products on conveyor belts. Combined with an inspection model to identify the product type, Trackers record the movement path of each product for quality control or inventory management. - motion analysis

In sports videos, Trackers tracks the movement of a player or a ball. For example, analyze the running path of a player in a soccer game and generate heat maps or statistics. - safety monitoring

In security systems, Trackers can track the movement of suspicious targets. For example, in a shopping mall camera, specific people are detected and tracked, and their paths are recorded for subsequent analysis.

QA

- What tracking algorithms does Trackers support?

SORT and DeepSORT are currently supported, with more algorithms such as ByteTrack planned for future releases. - How can I improve tracking stability?

Ensure the accuracy of the detection model by adjusting the confidence threshold (e.g., 0.5) or increasing the tracker'strack_bufferparameter to reduce ID switching. - Does it support real-time video processing?

Yes, Trackers supports live video streams such as camera input. Frames need to be captured and processed frame by frame using OpenCV. - How to deal with memory overflow problems?

Setting environment variablesVIDEO_SOURCE_BUFFER_SIZE=2Limit the frame buffer, or use a more lightweight model such as YOLO11n.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...