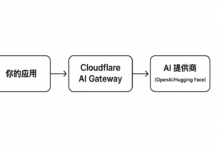

This article describes how to use the Ollama This document is designed to help developers get up to speed quickly and take full advantage of Ollama's capabilities. You can call the Ollama API directly in your application, or you can call Ollama through Spring AI components.By studying this document, you can easily integrate Ollama into your projects.

I. Environmental preparedness

To use the Ollama API in Java, make sure you have the following environment and tools ready:

- Java Development Kit (JDK) : Install JDK version 1.8 or later.

- Building Tools : such as Maven or Gradle, for project dependency management.

- HTTP Client Library : Choose a suitable HTTP client library, such as Apache HttpClient or OkHttp.

II. Direct use of Ollama

There are a lot of third-party developed components on github that make it easy to integrate Ollama in your application, here's an example with the Asedem For example, the following 3 steps can be followed (maven is used here for project management):

- Add ollama dependency in pom.xml

<repositories>

<repository>

<id>jitpack.io</id>

<url>https://jitpack.io</url>

</repository>

</repositories>

<dependencies>

<dependency>

<groupId>com.github.Asedem</groupId>

<artifactId>OllamaJavaAPI</artifactId>

<version>master-SNAPSHOT</version>

</dependency>

</dependencies>

- Initialize Ollama

// 默认情况下,它将连接到 localhost:11434

Ollama ollama = Ollama.initDefault();

// 对于自定义值

Ollama ollama = Ollama.init("http://localhost", 11434);

- Using Ollama

- dialogues

String model = "llama2:latest"; // 指定模型

String prompt = "为什么天空是蓝色的?"; // 提供提示

GenerationResponse response = ollama.generate(new GenerationRequest(model, prompt));

// 打印生成的响应

System.out.println(response.response());

- List Local Models

List<Model> models = ollama.listModels(); // 返回 Model 对象的列表

- Displaying model information

ModelInfo modelInfo = ollama.showInfo("llama2:latest"); // 返回 ModelInfo 对象

- Replication models

boolean success = ollama.copy("llama2:latest", "llama2-backup"); // 如果复制过程成功返回 true

- Delete Model

boolean success = ollama.delete("llama2-backup"); // 如果删除成功返回 true

Calling Ollama with Spring AI

Introduction to Spring AI

Spring AI is an application framework designed for artificial intelligence engineering. The core features are listed below:

- API support across AI providers: Spring AI provides a portable set of APIs that support interaction with chat, text-to-image, and embedded models from multiple AI service providers.

- Synchronized and Streaming API options: The framework supports synchronized and streaming APIs, providing developers with flexible interaction methods.

- Model-Specific Function Access: Allows developers to access model-specific functions via configuration parameters, providing more granular control.

Using Spring AI

- Add Spring AI dependency in pom.xml

<dependencies>

<dependency>

<groupId>io.springboot.ai</groupId>

<artifactld>spring-ai-ollama-spring-boot-starter</artifactld>

<version>1.0.3</version>

</dependency>

</dependencies>

Note: When using IDEA to create a project, you can directly specify the dependencies, the system automatically completes the pom.xml file, you do not need to manually modify, as shown in the following figure:

- Add the configuration for Spring AI and Ollama to the configuration file of your Spring Boot application. Example:

ai:

ollama:

base-url: http://localhost:11434

chat:

options:

model: llama3.1:latest

- Use Ollama for text generation or dialog:

First create a Spring Boot controller to call the Ollama API:

import jakarta.annotation.Resource;

import org.springframework.ai.chat.model.ChatResponse;

import org.springframework.ai.chat.prompt.Prompt;

import org.springframework.ai.ollama.OllamaChatModel;

import org.springframework.ai.ollama.api.OllamaOptions;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RequestParam;

import org.springframework.web.bind.annotation.RestController;

@RestController

public class OllamaController {

@Resource

private OllamaChatModel ollamaChatModel;

@RequestMapping(value = "/ai/ollama")

public Object ollama(@RequestParam(value = "msg")String msg){

ChatResponse chatResponse=ollamaChatModel.call(new Prompt(msg, OllamaOptions.create()

.withModel("llama3.1:latest")//指定使用哪个大模型

.withTemperature(0.5F)));

System.out.println(chatResponse.getResult().getOutput().getContent());

return chatResponse.getResult().getOutput().getContent();

}

}

Then run the program and enter the URL in your browser http://localhost:8080/ai/ollama?msg="提示词" The result is shown below:

reference document